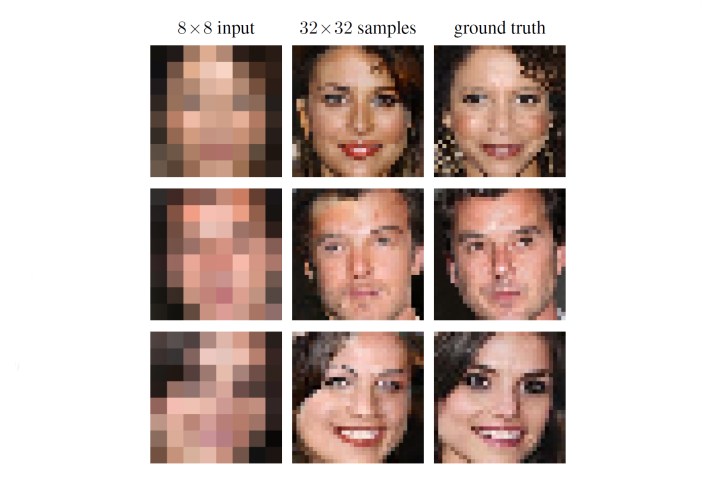

The new technology essentially uses a pair of neural networks, which are fed an 8 x 8-pixel image and are then able to create an approximation of what it thinks the original image would look like. The results? Well, they aren’t perfect, but they are pretty close.

To be clear, the neural networks don’t magically enhance the original image — rather, they use machine learning to figure out what they think the original could have looked like. So, using the example of a face, the generated image may not look exactly like the real person but instead, a fictional character that represents the computer’s best guess. In other words, law enforcement may not be able to use this technology to produce an image of a suspect using a blurry reflection from a photo of a number plate yet, but it may help the police get a pretty good guess at what a suspect may look like.

As mentioned, two neural networks are involved in the process. The first is called a “conditioning network,” and it basically maps out the pixels of the 8 x 8-pixel image into a similar looking but higher resolution image. That image serves as the rough skeleton for the second neural network, or the “prior network,” which takes the image and adds more details by using other, already existing images that have similar pixel maps. The two networks then combine their images into one final image, which is pretty impressive.

It is likely we will see more and more tech related to image processing in the future — in fact, artificial intelligence is getting pretty good at generating images, and Google and Twitter have both put a lot of research into image enhancing. At this rate, maybe crime-show tech will one day become reality.