In recent months, Nvidia has pushed itself as a company at the forefront of ‘edge’ AI development, which concerns local algorithmic processing and analytics. Nvidia considers itself uniquely positioned to be a pioneer of that burgeoning technology space, and the provider for much of its future hardware, thanks to its consumer graphics developments.

To find out more about Nvidia’s vision for the future, we spoke with the company’s vice president of sales and marketing in Europe, Serge Palaric. He talked us through the green team’s plan for a future that ranges from traditional gaming hardware to cutting-edge AI development.

Bringing AI to the user

Despite the added pressure off AMD’s upcoming Vega graphics cards, Nvidia is not looking to narrow its approach. “All technology is linked to deep learning and AI,” Palaric said.

“We’re trying to make computers understand the real world, without simulations.”

Nvidia made its commitment to AI clear with the recent announcement of its second-generation Jetson TX hardware, a localized AI processing platform that Nvidia thinks will popularize the idea of what it calls “inference at the edge.” The goal is to provide the parallel computing ability needed to make complicated algorithmic choices on the fly, without cloud connectivity.

Many contemporary technologies, from autonomous cars, to in-home audio assistants like Amazon’s Echo, use AI to provide information or make complicated decisions. However, they require a connection to the cloud, and that means added latency, as well as reliance on mobile data. Nvidia wants to bring equivalent processing power to the local level, using the parallel processing capabilities of its powerful graphics chips.

“You have to have a powerful system with adequate software to handle the learning part of the equation,” Palaric said. “[That’s why] we launched the TX1. It was the first device that could handle that sort of learning [locally].”

Combining a powerful graphics processor alongside multiple processor cores and as much as 8GB of memory, the Jetson TX1 and its sequel, the TX2, are Nvidia’s front-line assault on the local AI supercomputing market. They offer powerful computing on a single PCB, meaning that no data needs to be sent remotely to be processed.

The TX2 was only recently announced, but represents a massive improvement over the TX1. Built to the same physical dimensions as its predecessor, it offers twice the performance and efficiency, thanks to the introduction of a secondary processor core, a new graphics chip, and double the memory.

More importantly, each Jetson board measures just five inches in length and width, they can fit in most smart hardware.

“Say you are building a smart camera and using facial recognition software, and you want to have an answer right away,” Palaric said. “Having a part of the deep learning inference inside the camera is the solution. You can download the dataset inside your TX1 or now TX2, and you don’t have to wait to get an answer from the datacenter. We are talking a few milliseconds, but having the AI at the edge helps make things happen immediately.”

Nvidia’s embedded ecosystem and PR manager for the EMEA region, Pierre-Antoine Beaudoin expanded on Palaric’s point. “In something like voice recognition for searching on Youtube, it makes sense to send the voice through the cloud, because the action of pushing the video through search, will happen on the cloud,” he said. “When it comes to a drone or self-driving cars though, it’s really better to have the action taken by the AI locally because of the reduction in latency.”

Graphics powered vehicles

Autonomous driving is a major avenue of interest for Nvidia, and several of its new hardware packages are designed specifically with it in mind. While many of the world’s auto manufacturers and technology companies are developing their own driverless car solutions, Nvidia wants to be the hardware provider of choice, and Palaric believes it’s in position to fill that role.

Although a different platform than Jetson, Nvidia’s autonomous car-focused Drive PX 2 is still built on the foundation of a Tegra processor, the same kind found in Jetson hardware and many consumer devices, like the Nintendo Switch and Nvidia’s own Shield tablet. The use of Tegra in all these devices demonstrates how deeply the green team’s consumer, enterprise, and research interests are linked.

“With the Drive PX 2, we have a platform that we can provide to tier one OEMs. If you look at what we showed off at CES this year, we demonstrated autonomous driving with only a single camera,” Palaric said.

“We are trying to bring one platform that will […] replace all black box and infotainment hardware inside the car.”

While it seems likely that multiple cameras and sensors will be used by the first generation of truly autonomous vehicles, masses of computing power will be required to process all that information, and Nvidia wants to provide the hardware to makes it happen locally.

The Drive PX2 is designed, Palaric said, to show off the capabilities of its planned Xavier platform. That system will have all the power of the PX2 in one chip. By having an idea of what the hardware capabilities will be so far in advance, Nvidia hopes to make it easier for car manufacturers to adjust their designs accordingly. Nvidia is doing the same with its Jetson TX2, as it has the same exact physical dimensions as the TX1. That makes it much easier for hardware manufacturers to plan their designs around known capabilities and form factors.

As Palaric highlighted, the auto-industry tends to have long development times of four years or more for new vehicles. Having an evolving but predictable platform like Drive PX2, and eventually Xavier, means that they can plan for advances, rather than build old technology into the vehicles.

“This is where we are different,” Palaric said. “If you look at most modern cars, they have many different devices that do one task. We are trying to bring one platform that will be able to handle all of the new deep learning tasks, as well as replace all black box and infotainment hardware inside the car.”

“We are also providing a ton of software to help the car manufacturers to develop their own software based on our hardware platform.”

Once again Beaudoin had a concise way to encapsulate the problem Nvidia is trying to solve with its hardware. “For the past 25 years we have been focusing our efforts on simulating the real world in a computer. Now, what we’re trying to do is make computers understand the real world without simulations.”

Consumer graphics ‘drives’ it all

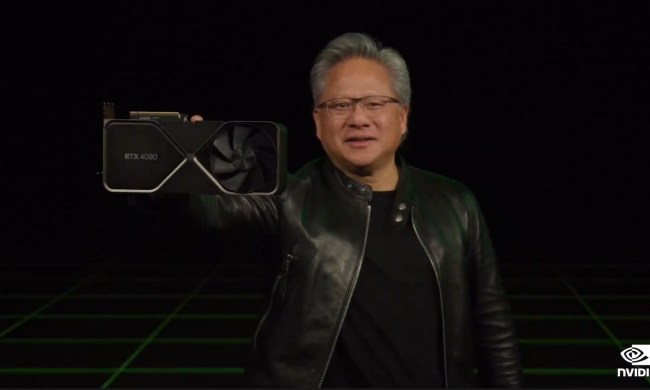

If this conversation has left you concerned that Nvidia may be doing all the above at the expense of its graphics card developments, don’t worry. Palaric made it very clear these advances don’t come at the expense of future graphics improvements. While desktop PC industry was contracting every year, gaming has continued to grow, and remains the foundation of the company.

“Our consumer business is growing a lot,” Palaric said. Further, it’s Nvidia’s GPU advancements that drive everything else. “We use the same GPU architecture in consumer in automotive, and other industries.”

“When we launched Pascal in July 2016, seven months later we could include Pascal architecture in TX2, so the difference between what we are doing at the consumer level and at the extreme in other markets, is lessening all the time.”

We did press Palaric on when we might expect to see the much promised HBM memory show up in Nvidia graphics cards. Unfortunately, he wouldn’t say much about the next-generation of consumer graphics hardware, but did tell us that we would “know soon.”

When asked about the difficulties Intel has faced developing 10nm parts, and whether as Nvidia approaches that die size it may run into similar problems, he didn’t seem phased.

“Intel has a challenge with 10nm,” he said, and suggested it would likely be one faced by most typical manufacturers. However, it’s not something he’s too concerned with. While he wouldn’t go into any detail about Nvidia’s plans for future GPU generations, he did say that few people were concerned about the size of Nvidia’s next die.

“Nobody is asking me what size our next GPU will be. They’re asking how they can use our technology to solve their problem,” he said.

Today’s graphics will be tomorrow’s AI

“We are at the start of deep learning and AI,” Palaric said. “It’s moving from universities and research centers into the enterprise world, and that is why we are putting a lot of energy into figuring out what technology people need to achieve their goals.”

In a move that seems likely to benefit us all, whether hardware enthusiast or future autonomous technology user, Nvidia plans to continue driving commercial graphics development to improve performance and efficiency, leading to more advanced local algorithmic processing in the future.

“The more powerful GPU we have, the more powerful things we can do in the consumer space, and that technology leads us to new innovations in deep learning and AI,” Palaric said.

Just as gaming is helping pave the way for the future of virtual reality, it may provide the hardware needed to make local AI possible, too. The next time you’re fretting over an insatiable love of graphics cards, remember that seeking maximum framerates in Fallout 4 also means seeking more capable automated cars, and smarter AI. It’s the same technology under the hood, after all.