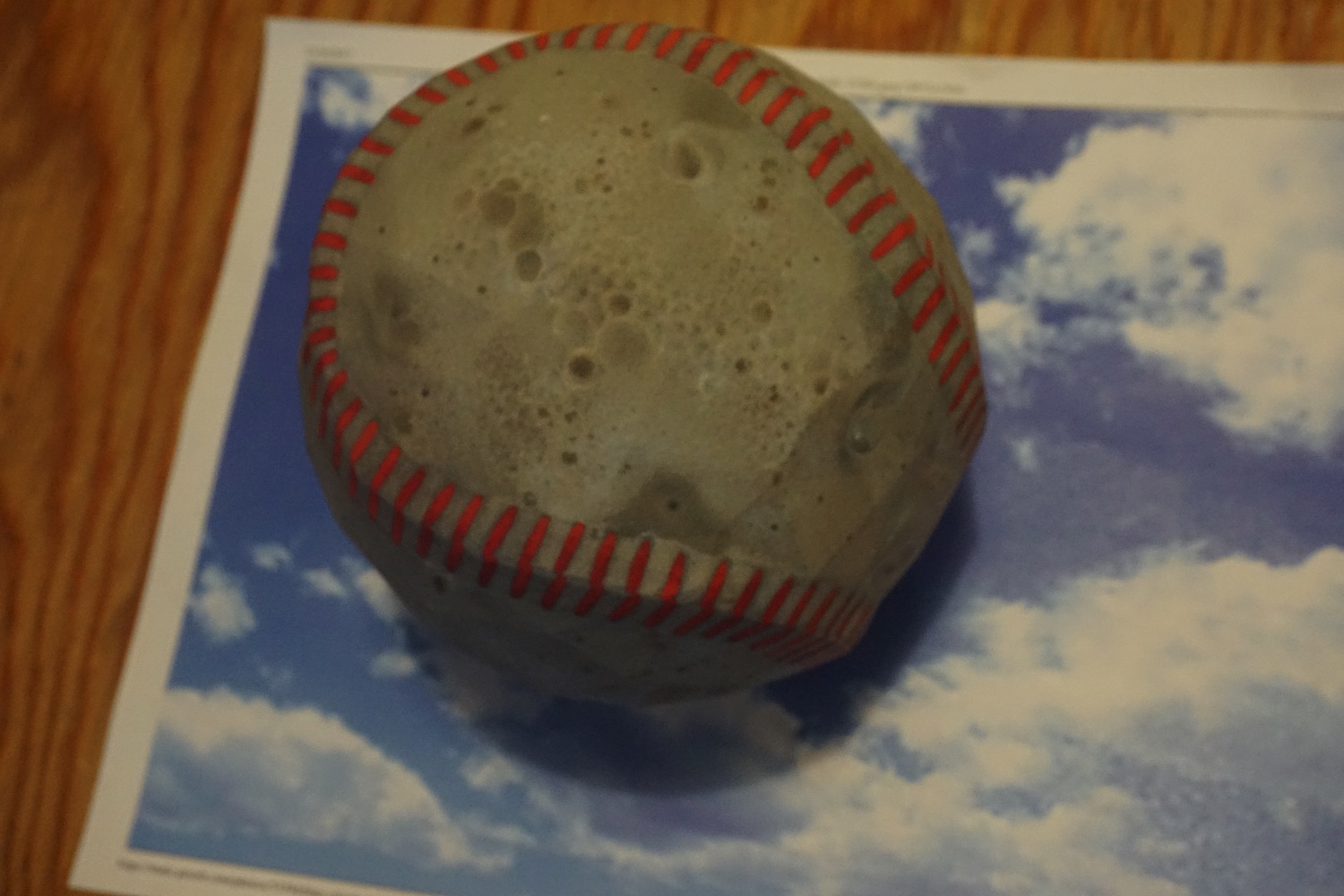

In their paper, the team of MIT researchers describe an algorithm which changes the texture of an object just enough that it can fool image classification algorithms. The proof of what the team calls “adversarial examples” turns out to be baffling to image recognition systems, regardless of the angle the objects are viewed from — such as the 3D printed turtle which is consistently identified as a rifle. That’s bad news for security systems which use A.I. for spotting potential security threats.

“It’s actually not just that they’re avoiding correct categorization — they’re classified as a chosen adversarial class, so we could have turned them into anything else if we had wanted to,” researcher Anish Athalye told Digital Trends. “The rifle and espresso classes were chosen uniformly at random. The adversarial examples were produced using an algorithm called Expectation Over Transformation (EOT), which is presented in our research paper. The algorithm takes in any textured 3D model, such as a turtle, and finds a way to subtly change the texture such that it confuses a given neural network into thinking the turtle is any chosen target class.”

While it might be funny to have a 3D-printed turtle recognized as a rifle, however, the researchers point out that the implications are pretty darn terrifying. Imagine, for instance, a security system which uses AI to flag guns or bombs, but can be tricked into thinking that they are instead tomatoes, or cups of coffee, or even entirely invisible. It also underlines frailty in the kind of image recognition systems self-driving cars will rely on, at high speed, to discern the world around them.

“Our work demonstrates that adversarial examples are a bigger problem than many people previously thought, and it shows that adversarial examples for neural networks are a real concern in the physical world,” Athalye continued. “This problem is not just an intellectual curiosity: It is a problem that needs to be solved in order for practical systems that use deep learning to be safe from attack.”