Replacing the background on a video typically requires advanced desktop software and plenty of free time, or a full-fledged studio with a green screen — but Google is developing an artificial intelligence alternative that works in real time, from a smartphone camera. On Thursday, March 1, Google shared the tech behind a new feature that’s now being beta tested on the YouTube Stories app.

Google’s video segmentation technique started out like most A.I.-based imaging programs — with people manually marking the background by hand on more than 10,000 images. With that data set, the group trained the program to separate the background from the foreground. (Adobe has a similar background removal tool now inside Photoshop for still images.)

That data set, however, was trained on single images — so how did Google go from a single image to replacing the background on every frame of video? Once the software masks out the background on the first image, the program uses that same mask to predict the background in the next frame. When that next frame has only minor adjustments from the first, such as slight movement from the person talking on camera, the program will make small adjustments to the mask. When the next frame is much different from the last — like if another person joins the video — then the software will discard that mask prediction entirely and create a new mask.

While the ability to separate the background is impressive by itself, Google wanted to go one step further and make that program run on the more limited hardware on a smartphone rather than a desktop computer. The programmers behind the video segmentation then made several adjustments to the program in order to improve the speed, including segmentation, downsampling, and reducing the number of channels. The team then worked to enhance quality by adding layers to create smoother edges between the foreground and background.

Google says those changes allow the app to replace the background in real time — applying changes at over 100 fps on an iPhone 7 and over 40 fps on the Google Pixel 2. The training set, Google says, had a 94.8 percent accuracy rating. All of the examples Google shared are videos of a person — the company didn’t say if the feature works on objects.

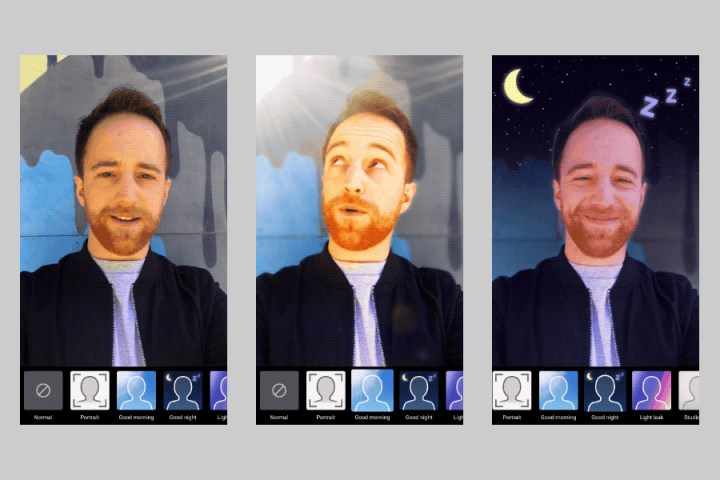

Inside the beta test of the feature, YouTubers can change the background in the video by selecting from the different effects, from a night scene to a blank background in black and white. Some of the effects in the sample even added lighting effects, like a lens flare at one corner.

The video segmentation tool is already rolling out — but only as a beta test, so the feature isn’t yet widely available. After taking in results of that test though, Google says it plans to expand the segmentation effects along with adding the feature to other programs, including augmented reality options.