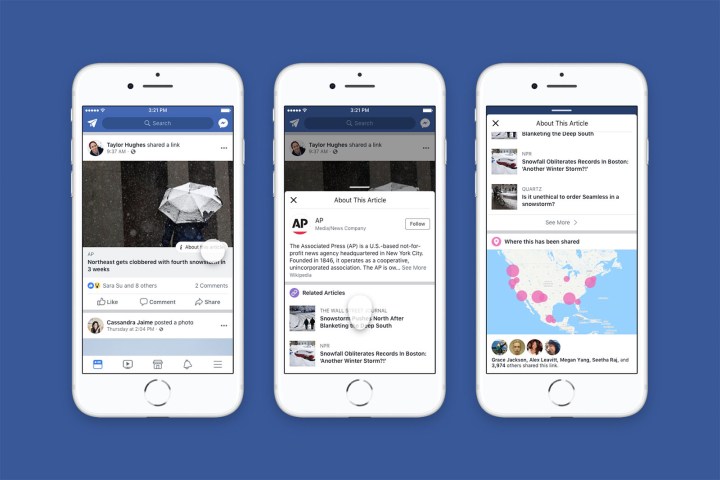

Facebook is launching new tools to help users better assess news sources — by using the crowdsourced Wikipedia. On Tuesday, April 3, Facebook began rolling out new “About This Article” tools in the U.S. after launching tests last year.

While those features were part of the tool’s early tests, Facebook is also expanding the data in the first widespread rollout. The About This Article can also display more articles from the same publication while another section will indicate which friends shared the same content.

Along with the U.S. rollout, Facebook is also testing additional features to add to the tool that look at not just the publication, but the author as well. Users that are part of this test will also see the author’s Wikipedia information, other articles from the same writer, and a button to choose to follow that author. The tool only works with publications using Facebook’s author tags to display the byline with the link preview, and for now, is just a test.

Facebook says that the tool was developed with the Facebook Journalism Project — and that the platform is continually looking for ways to add more context to news sources.

Facebook isn’t the first social platform to turn to Wikipedia to provide more details on a source. YouTube is adding Wikipedia links to videos that are related to a known list of conspiracy theories. That list is also created from Wikipedia. YouTube CEO Susan Wojcicki said that the feature is designed to help users determine which videos are trustworthy.

Social media platforms are facing backlash from the effects of fake news, but at the same time, are also facing scrutiny on regulations that could inhibit free speech or lean more towards one side of the political spectrum. By using a crowdsourced article, Facebook could be looking to avoid the responsibility of labeling whether or not a source is trustworthy by doling out that task to others. Wikipedia’s crowdsourced information can potentially help prevent one-sided presentations, but at the same time, academics often label the platform as unreliable for the same reason.