From the Grand Theft Auto franchise to the plethora of available Spider-Man titles, plenty of video games allow you to explore a three-dimensional representation of a real (or thinly fictionalized) city. Creating these cityscapes isn’t easy, however. The task requires thousands of hours of computer modeling and careful reference studies before players have the chance to walk, drive or fly through the completed virtual world.

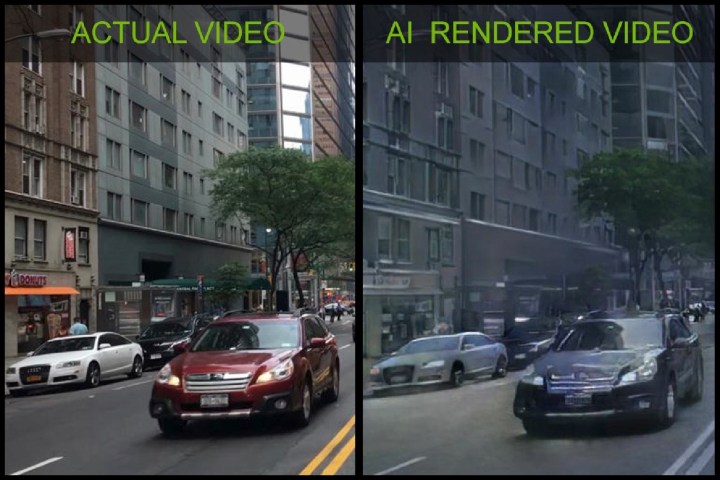

An impressive new tech demo from Nvidia shows that there is another way, however. Shown off at the NeurIPS artificial intelligence conference in Montreal, the tech company showcased how machine learning technology can generate a convincing virtual city simply by showing it dash cam videos. These videos were gathered from self-driving cars, during a one-week trial driving around cities. The neural network training process took around one week using Nvidia’s Tesla V100 GPUs on a DGX-1 supercomputer system. Once the A.I. had learned what it was looking at and figured out how to segment this into color-coded objects, the virtual cities were generated using the Unreal Engine 4.

“One of the main obstacles developers face is that creating content for virtual worlds is expensive,” Bryan Catanzaro, vice president of applied deep learning research at Nvidia, told Digital Trends. “It can take dozens of artists months to create an interactive world for games or VR applications. We’ve created a new way to render content using deep learning — using A.I. that learns from the real world — which could help artists and developers create virtual environments at a much lower cost.”

Catanzaro said that there are myriad potential real-world applications for this technology. For instance, it could allow users to customize avatars in games by taking a short video with their cell phone and then uploading it. This could also be used to create amusing videos in which the user’s features are mapped onto another body’s movement. (As seen in the above video, Nvidia had some fun making one of its developers perform the Gangnam Style dance.)

“Architects could [additionally] use it to render virtual designs for their clients,” Catanzaro continued. “You could use this technique to train robots or self-driving cars in virtual environments. In all of these cases, it would lower the cost and time it takes to create virtual worlds.”

He added that this is still early research, and will take “a few years” to mature and roll out in commercial applications. “But I’m excited that it could fundamentally change the way computer graphics are created,” Catanzaro said.