Nvidia wants to be the brains to help the next-generation of autonomous robots do the heavy lifting. The newly announced Jetson AGX Xavier module aims to do just that.

As a system-on-a-chip, Jetson Xavier is part of Nvidia’s bet to overcome the computational limits of Moore’s Law by relying on graphics and deep learning architectures, rather than the processor. That’s according to Deepu Talla, Nividia’s vice president and general manager of autonomous machines, at a media briefing at the company’s new Endeavor headquarters in Santa Clara, California on Wednesday evening. The company has lined up a number of partners and envisions the Xavier module to power delivery drones, autonomous vehicles, medical imaging, and other tasks that require deep learning and artificial intelligence capabilities.

Nvidia claims that the latest Xavier module is capable of delivering up to 32 trillion operations per second (TOPS). Combined with the latest artificial intelligence capabilities of the Tensor Core found within Nvidia’s Volta architecture, Xavier is capable of twenty times the performance of the older TX2 with 10 times better energy efficiency. This gives Xavier the power of a workstation-class server in a module that fits in the size of your hand, Talla said.

In a Deepstream demonstration, Talla showed that while the older Jetson TX2 can process two 1080p videos, each with four deep neural networks, the company’s high performance computing Tesla chip increases that number to 24 videos, each at 720p resolution. Xavier takes that even further, and Talla showed that the chipset was capable of processing thirty videos, each at 1080p resolution.

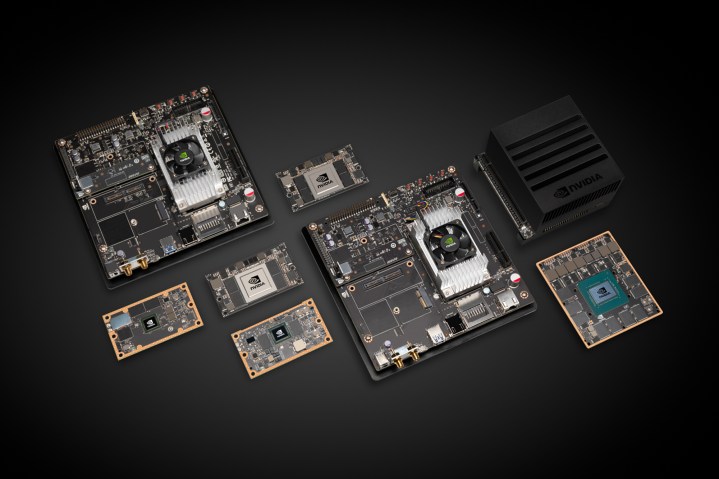

The Xavier module consists of an eight-core Carmel ARM64 processor, 512 CUDA Tensor Cores, dual NVDLA deep-learning accelerator, and multiple engines for video processing to help autonomous robots process images and videos locally. In a presentation, Talla claimed that the new Xavier module beats the prior Jetson TX2 platform and an Intel Core i7 computer coupled with an Nvidia GeForce GTX 1070 graphics card in both AI inference performance and AI inference efficiency.

Some of Nvidia’s developer partners are still building autonomous machines based on older Nvidia solutions like the TX2 platform or a GTX GPU. Some of these partners include self-driving deliver carts, industrial drones, and smart city solutions. However, many claim that these robots can easily be upgradeable to the new Xavier platform to take advantage of benefits of that platform.

While native on-board processing of images and videos will help autonomous machines learn faster and accelerate how AI can be used to detect diseases in medical imaging applications, it can also be used in the virtual reality space. Live Planet VR, which creates an end-to-end platform and an 16-lens camera solution to live stream VR videos, uses Nvidia’s solution to process the graphics and clips together all inside the camera without requiring any file exports.

“Unlike other solutions, all the processing is done on the camera,” Live Planet community manager Jason Garcia said. Currently, the company uses Nvidia’s GTX card to do stitch the video clips from the different lenses together and reduce image distortion from the wide angle lenses.

Talla said that videoconferencing solutions can also use AI to improve collaboration by tacking the speaker and switching cameras to either highlight the person talking or the whiteboard. Partner Slightech showed off one version of this by showing how face recognition and tracking can be implemented on a telepresence robot. Slightech used its Mynt 3D camera sensor, AI technology, and Nvidia’s Jetson technology to power this robot. Nvidia is working with more than 200,000 developers, five times the number from spring 2017, to help get Jetson into more applications ranging from healthcare to manufacturing.

The Jetson AGX module is now shipping with a starting price of $1,099 per unit when purchased in 1,000-unit batches.

“Developers can use Jetson AGX Xavier to build the autonomous machines that will solve some of the world’s toughest problems, and help transform a broad range of industries,” Nvidia said in a prepared statement. “Millions are expected to come onto the market in the years ahead.”

Updated December 20: This article originally mentioned that Live Planet VR has an 18-lens system. We’ve updated our reporting to reflect that Live Planet VR uses a 16-lens configuration.