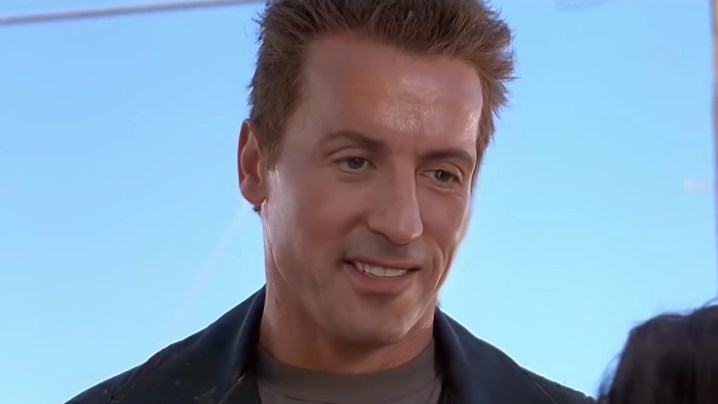

Of all the A.I. tools to have emerged in recent years, very few have generated as much concern as deepfakes. A combination of “deep learning” and “fake,” deepfake technology allows anyone to create images or videos in which photographic elements are convincingly superimposed onto other pictures. While some of the ways this tech has been showcased have been for entertainment (think superimposing Sylvester Stallone’s face onto Arnie’s body in Terminator 2), other use-cases have been more alarming. Deepfakes make possible everything from traumatizing and reputation-ruining “revenge porn” to misleading fake news.

As a result, while a growing number of researchers have been working to make deepfake technology more realistic, others have been searching for ways to help us better distinguish between images and videos which are real and those that have been algorithmically doctored.

At Drexel University, a team of researchers in the Multimedia and Information Security Lab have developed a deep neural network which can spot manipulated images with a high degree of accuracy. In the process, its creators hope that they can provide the means of fighting back against the dangers of deepfakes. It’s not the first time researchers have attempted to solve this problem, but it is potentially one of the most promising efforts to materialize so far in this ongoing cat-and-mouse game.

“Many [previous] deepfake detectors rely on visual quirks in the faked video, like inconsistent lip movement or weird head pose,” Brian Hosler, a researcher on the project, told Digital Trends. “However, researchers are getting better and better at ironing out these visual cues when creating deepfakes. Our system uses statistical correlations in the pixels of a video to identify the camera that captured it. A deepfake video is unlikely to have the same statistical correlations in the fake part of the video as in the real part, and this inconsistency could be used to detect fake content.”

The project started out as an experiment to see whether it was possible to create an A.I. algorithm able to spot to difference between videos captured by different cameras. Like a watermark, every camera captures and compresses videos slightly differently. Most of us can’t do it, but an algorithm that’s trained to detect these differences can recognize the unique visual fingerprints associated with different cameras and use this to identify the format of a particular video. The system could also be used for other things, such as developing algorithms to detect videos with deleted frames, or to detect whether or not a video was uploaded to social media.

How they did it

The Drexel team curated a large database of videos, running to around 20 hours, from 46 different cameras. They then trained a neural network to be able to distinguish these elements. As convincing as a deepfake video may look to your average person, the A.I. examines them pixel by pixel to search for elements which have been altered. Not only is the resultant A.I. able to recognize which pictures had been changed, it is also able to identify the specific part of the image that has been doctored.

Owen Mayer, a member of the research group, has previously created a system which analyzes some of these statistical correlations to determine if two parts of an image are edited in different ways. A demo of this system is available online. However, this latest work is the first time that such an approach has been conducted in video footage. This is a bigger problem, and one which is crucial to get right as deepfakes become more prevalent.

“We plan to release a version of our code, or even an application, to the public so that anyone can take a video, and try to identify the camera model of origin,” Hosler continued. “The tools we make, and that researchers in our field make, are often open-source and freely distributed.”

There’s still more work to be done, though. Deepfakes are only getting better, which means that researchers on the other side of the fence must not rest on their laurels. Tools will need to continue to evolve to make sure that they can continue to spot faked images and video as they dispense with the more noticeable visual traits that can mark out current deepfakes as, well, fakes. As audio deepfake tools, capable of mimicking voices, continue to develop, it will also be necessary to create audio-spotting tools to track them down.

For now, perhaps the biggest challenge is to raise awareness of the issue. Like fact-checking something we read online, the availability of information on the internet only works to our advantage if we know enough to second-guess whatever we read. Until now, finding video proof that something happened was enough to convince many of us that it actually took place. That mindset is going to have to change.

“I think one of the largest hurdles to getting everyone to use forensic tools like these is the knowledge gap,” Hosler said. “We as researchers should make not only the tools, but the underlying ideas, more palatable to the public if we truly have an impact.”

Whatever form these tools take — whether it’s as a web browser plug-in or an A.I. that’s automatically employed by internet giants to flag content before it’s shown to users — we sure hope the right approach is employed to make these as accessible as possible.

Hey, it’s only the future of truth as we know it that’s at stake…