During the GTC 2022 conference, Nvidia talked about using artificial intelligence and machine learning in order to make future graphics cards better than ever.

As the company chooses to prioritize AI and machine learning (ML), some of these advancements will already find their way into the upcoming next-gen Ada Lovelace GPUs.

Nvidia’s big plans for AI and ML in next-gen graphics cards were shared by Bill Dally, the company’s chief scientist and senior vice president of research. He talked about Nvidia’s research and development teams, how they utilize AI and machine learning (ML), and what this means for next-gen GPUs.

In short, using these technologies can only mean good things for Nvidia graphics cards. Dally discussed four major sections of GPU design, as well as the ways in which using AI and ML can drastically speed up GPU performance.

The goal is an increase in both speed and efficiency, and Dally’s example talks about how using AI and ML can lower a standard GPU design task from three hours to just three seconds.

Using artificial intelligence and machine learning can help optimize all of these processes.

This is allegedly possible by optimizing up to four processes that normally take a lot of time and are highly detailed.

This refers to monitoring and mapping power voltage drops, anticipating errors through parasitic prediction, standard cell migration automation, and addressing various routing challenges. Using artificial intelligence and machine learning can help optimize all of these processes, resulting in major gains in the end product.

Mapping potential drops in voltage helps Nvidia track the power flow of next-gen graphics cards. According to Dally, switching from using standard tools to specialized AI tools can speed this task up drastically, seeing as the new tech can perform such tasks in mere seconds.

Dally said that using AI and ML for mapping voltage drops can increase the accuracy by as much as 94% while also tremendously increasing the speed at which these tasks are performed.

Data flow in new chips is an important factor in how well a new graphics card performs. Therefore, Nvidia uses graph neural networks (GNN) to identify possible issues in data flow and address them quickly.

Parasitic prediction through the use of AI is another area in which Nvidia sees improvements, noting increased accuracy, with simulation error rates dropping below 10 percent.

Nvidia has also managed to automate the process of migrating the chip’s standard cells, cutting back on a lot of downtime and speeding up the whole task. With that, 92% of the cell library was migrated through the use of a tool with no errors.

The company is planning to focus on AI and machine learning going forward, dedicating five of its laboratories to researching and designing new solutions in those segments. Dally hinted that we may see the first results of these new developments in Nvidia’s new 7nm and 5nm designs, which include the upcoming Ada Lovelace GPUs. This was first reported by Wccftech.

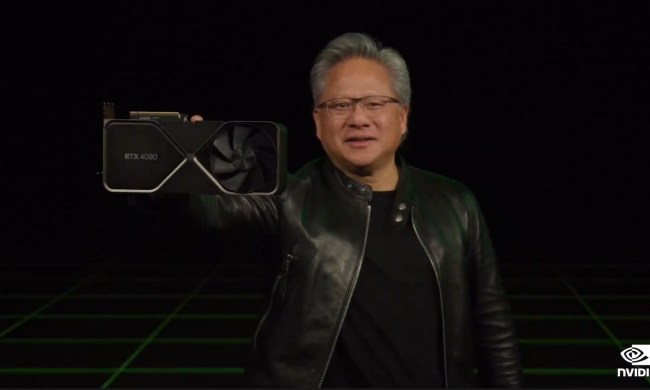

It’s no secret that the next generation of graphics cards, often referred to as RTX 4000, will be intensely powerful (with power requirements to match). Using AI and machine learning to further the development of these GPUs implies that we may soon have a real powerhouse on our hands.