Nvidia’s RTX 40-series graphics cards are arriving in a few short weeks, but among all the hardware improvements lies what could be Nvidia’s golden egg: DLSS 3. It’s much more than just an update to Nvidia’s popular DLSS (Deep Learning Super Sampling) feature, and it could end up defining Nvidia’s next generation much more than the graphics cards themselves.

AMD has been working hard to get its FidelityFX Super Resolution (FSR) on par with DLSS, and for the past several months, it’s been successful. DLSS 3 looks like it will change that dynamic — and this time, FSR may not be able to catch up anytime soon.

How DLSS 3 works (and how it doesn’t)

You’d be forgiven for thinking that DLSS 3 is a completely new version of DLSS, but it’s not. Or at least, it’s not entirely new. The backbone of DLSS 3 is the same super-resolution technology that’s available in DLSS titles today, and Nvidia will presumably continue improving it with new versions. Nvidia says you’ll see the super-resolution portion of DLSS 3 as a separate option in the graphics settings now.

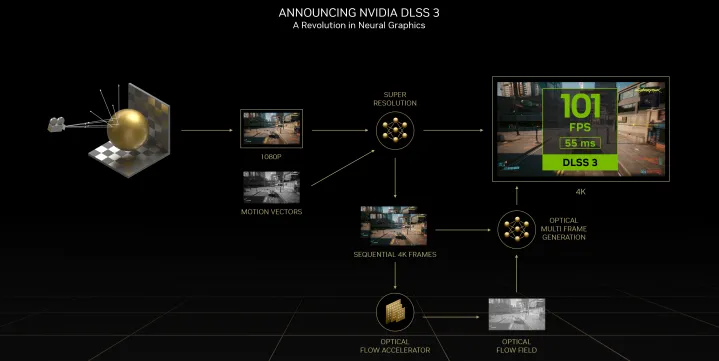

The new part is frame generation. DLSS 3 will generate an entirely unique frame every other frame, essentially generating seven out of every eight pixels you see. You can see an illustration of that in the flow chart below. In the case of 4K, your GPU only renders the pixels for 1080p and uses that information for not only the current frame but also the next frame.

Frame generation, according to Nvidia, will be a separate toggle from super resolution. That’s because frame generation only works on RTX 40-series GPUs for now, while the super resolution will continue to work on all RTX graphics cards, even in games that have updated to DLSS 3. It should go without saying, but if half of your frames are completely generated, that’s going to boost your performance by a lot.

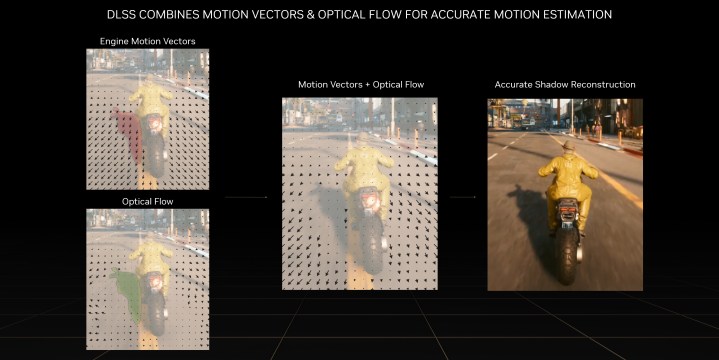

Frame generation isn’t just some AI secret sauce, though. In DLSS 2 and tools like FSR, motion vectors are a key input for the upscaling. They describe where objects are moving from one frame to the next, but motion vectors only apply to geometry in a scene. Elements that don’t have 3D geometry, like shadows, reflections, and particles, have traditionally been masked out of the upscaling process to avoid visual artifacts.

Masking isn’t an option when an AI is generating an entirely unique frame, which is where the Optical Flow Accelerator in RTX 40-series GPUs comes into play. It’s like a motion vector, except the graphics card is tracking the movement of individual pixels from one frame to the next. This optical flow field, along with motion vectors, depth, and color, contribute to the AI-generated frame.

It sounds like all upsides, but there’s a big problem with frames generated by the AI: they increase latency. The frame generated by the AI never passes through your PC — it’s a “fake” frame, so you won’t see it on traditional fps readouts in games or tools like FRAPS. So, latency doesn’t go down despite having so many extra frames, and due to the computational overhead of optical flow, the latency actually goes up. Because of that, DLSS 3 requires Nvidia Reflex to offset the higher latency.

Normally, your CPU stores up a render queue for your graphics card to make sure your GPU is never waiting for work to do (that would cause stutters and frame rate drops). Reflex removes the render queue and syncs your GPU and CPU so that as soon as your CPU can send instructions, the GPU starts processing them. When applied over the top of DLSS 3, Nvidia says Reflex can sometimes even result in a latency reduction.

Where AI makes a difference

AMD’s FSR 2.0 doesn’t use AI, and as I wrote about a while back, it proves that you can get the same quality as DLSS with algorithms instead of machine learning. DLSS 3 changes that with its unique frame generation capabilities, as well as the introduction of optical flow.

Optical flow isn’t a new idea — it’s been around for decades and has applications in everything from video-editing applications to self-driving cars. However, calculating optical flow with machine learning is relatively new due to an increase in datasets to train AI models on. The reason why you’d want to use AI is simple: it produces fewer visual errors given enough training and it doesn’t have as much overhead at runtime.

DLSS is executing at runtime. It’s possible to develop an algorithm, free of machine learning, to estimate how each pixel moves from one frame to the next, but it’s computationally expensive, which runs counter to the whole point of supersampling in the first place. With an AI model that doesn’t require a lot of horsepower and enough training data — and rest assured, Nvidia has plenty of training data to work with — you can achieve optical flow that is high quality and can execute at runtime.

That leads to an improvement in frame rate even in games that are CPU limited. Supersampling only applies to your resolution, which is almost exclusively dependent on your GPU. With a new frame that bypasses CPU processing, DLSS 3 can double frame rates in games even if you have a complete CPU bottleneck. That’s impressive and currently only possible with AI.

Why FSR 2.0 can’t catch up (for now)

AMD has truly done the impossible with FSR 2.0. It looks fantastic, and the fact that it’s brand-agnostic is even better. I’ve been ready to ditch DLSS for FSR 2.0 since I first saw it in Deathloop. But as much as I enjoy FSR 2.0 and think it’s a great piece of kit from AMD, it’s not going to catch up to DLSS 3 any time soon.

For starters, developing an algorithm that can track each pixel between frames free of artifacts is tough enough, especially in a 3D environment with dense fine detail (Cyberpunk 2077 is a prime example). It’s possible, but tough. The bigger issue, however, is how bloated that algorithm would need to be. Tracking each pixel through 3D space, doing the optical flow calculation, generating a frame, and cleaning up any mishaps that happen along the way — it’s a lot to ask.

Getting that to run while a game is executing and still providing a frame rate improvement on the level of FSR 2.0 or DLSS, that’s even more to ask. Nvidia, even with dedicated processors and a trained model, still has to use Reflex to offset the higher latency imposed by optical flow. Without that hardware or software, FSR would likely trade too much latency to generate frames.

I have no doubt that AMD and other developers will get there eventually — or find another way around the problem — but that could be a few years down the road. It’s hard to say right now.

What’s easy to say is that DLSS 3 looks very exciting. Of course, we’ll have to wait until it’s here to validate Nvidia’s performance claims and see how image quality holds up. So far, we just have a short video from Digital Foundry showing off DLSS 3 footage (above), which I’d highly recommend watching until we see further third-party testing. From our current vantage point, though, DLSS 3 certainly looks promising.

This article is part of ReSpec – an ongoing biweekly column that includes discussions, advice, and in-depth reporting on the tech behind PC gaming.