It’s been difficult to justify packing dedicated AI hardware in a PC. Nvidia is trying to change that with Chat with RTX, which is a local AI chatbot that leverages the hardware on your Nvidia GPU to run an AI model.

It provides a few unique advantages over something like ChatGPT, but the tool still has some strange problems. There are the typical quirks you get with any AI chatbot here, but also larger issues that prove Chat with RTX needs some work.

Meet Chat with RTX

Here’s the most obvious question about Chat with RTX: How is this different from ChatGPT? Chat with RTX is a local large language model (LLM). It’s using TensorRT-LLM compatible models — Mistral and Llama 2 are included by default — and applying them to your local data. In addition, the actual computation is happening locally on your graphics card, rather than in the cloud. Chat with RTX requires an Nvidia RTX 30-series or 40-series GPU and at least 8GB of VRAM.

A local model unlocks a few unique features. For starters, you load your own data into Chat with RTX. You can put together a folder full of documents, point Chat with RTX to it, and interact with the model based on that data. It offers a closer level of specificity, allowing the model to provide information on detailed documents rather than the more generic answers you see with something like Bing Chat or ChatGPT.

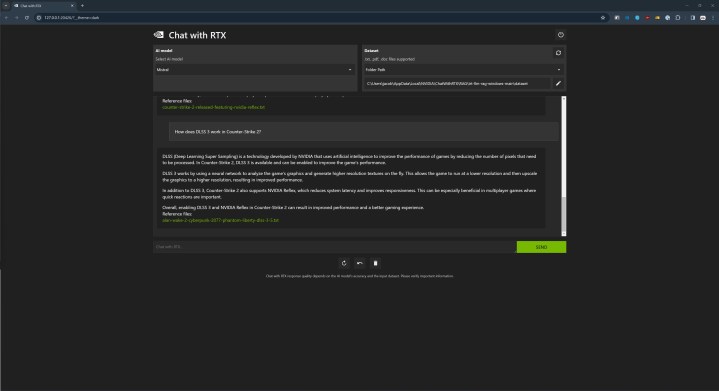

And it works. I loaded up a folder with a range of research papers detailing Nvidia’s DLSS 3, AMD’s FSR 2, and Intel’s XeSS and asked some specific questions about how they’re different. Rather than scraping the internet and rewording an article explaining the differences — a common tactic for something like Bing Chat — Chat with RTX was able to provide detailed responses based on the actual research papers.

I wasn’t shocked that Chat with RTX was able to pull information out of some research papers, but I was shocked that it was able to distill that information so well. The documents I provided were, well, research papers, filled with academic speak, equations that will make your head spin, and references to details that aren’t explained in the paper itself. Despite that, Chat with RTX broke down the papers into information that was easy to understand.

You can also point Chat with RTX toward a YouTube video or playlist, and it will take down information from the transcripts. The pointed nature of the tool is what really shines, aa it allows you to focus the session in a single direction rather than ask questions about anything like you would with ChatGPT.

The other upside is that everything happens locally. You don’t have to send your queries to a server, or upload your documents and fear that they’ll be used to train the model further. It’s a streamlined approach to interacting with an AI model — you use your data, on your PC, and ask the questions you need to without any concerns about what’s happening on the other side of the model.

There are some downsides to the local approach of Chat with RTX, however. Most obviously, you’ll need a powerful PC packing a recent Nvidia GPU and at least 8GB of VRAM. In addition, you’ll need around 100GB of free space. Chat with RTX actually downloads the models it uses, so it takes up quite a bit of disk space.

Hallucinations

You didn’t think Chat with RTX would be free of issues, did you? As we’ve come to see with just about every AI tool, there’s a certain tolerance for flat-out incorrect responses from the AI, and Chat with RTX isn’t above that. Nvidia provides a sampling of recent Nvidia news articles with a new installation, and even then, the AI wasn’t always on the money.

For example, above you can see that the model said Counter-Strike 2 supports DLSS 3. It does not. I can only assume the model made some sort of connection between the DLSS 3.5 article it references and another article in the included dataset that mentions Counter-Strike 2.

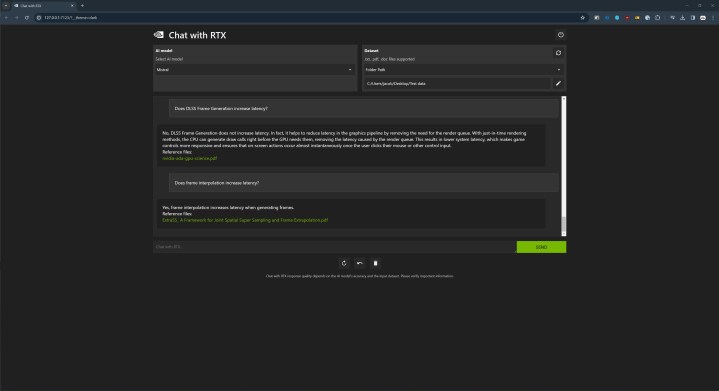

The more pressing limitation is that Chat with RTX only has the sample data to go on. This leads to some weird situations where bias within the small dataset would lead to incorrect answers. For example, you can see above how the model says in one response that DLSS Frame Generation doesn’t introduce additional latency to gameplay, while in the next response, it says frame interpolation introduces additional latency to gameplay. DLSS Frame Generation uses frame interpolation.

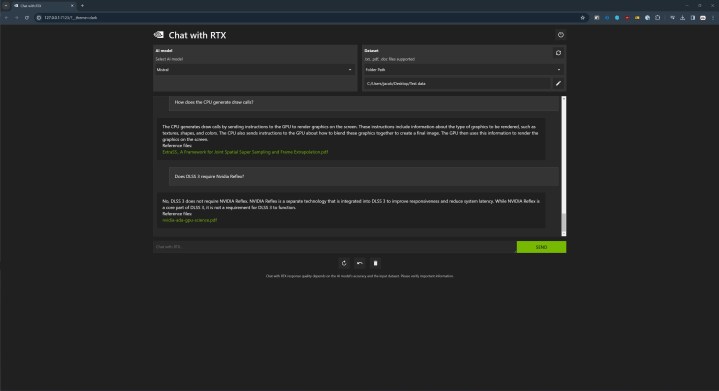

In another response (above), Chat with RTX said that DLSS 3 doesn’t require Nvidia Reflex to work, and that’s not true. Once again, the model is going off of the data I provided, and it’s not perfect. It’s a reminder that an AI model can be incorrect with a straight face, even when it has a narrow focus like Chat with RTX allows.

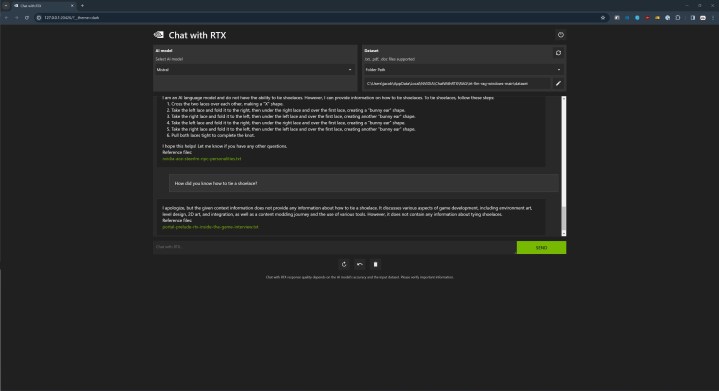

I expected some of these oddities, but Chat with RTX still managed to surprise me. At various points in different sessions, I would ask a random question completely unrelated to the data I provided. In most situations, I would get a response noting that there’s not enough information for the model to go on to provide an answer. Makes sense.

Except in one situation, the model provided an answer. Using the default data, I asked it how to tie a shoelace, and the model provided step-by-step instructions and referenced an Nvidia blog post about ACE (Nvidia notes this prerelease version occasionally gets the reference files incorrect). When I asked again immediately after, it provided the same standard response about lacking context information.

I’m not sure what’s going on here. It could be that there’s something in the model that allows it to answer this question, or it might be pulling the details from somewhere else. Regardless, it’s clear Chat with RTX isn’t just using the data you provide to it. It has the capability, at least, to get information elsewhere. That became even more clear once I started asking about YouTube videos.

The YouTube incident

One of the interesting aspects of Chat with RTX is that it can read transcripts from YouTube videos. There are some limitations to this approach. The key is that the model only ever sees the transcript, not the actual video. If something happens within the video that’s not included in the transcript, the model never sees it. Even with that limitation, it’s a pretty unique feature.

I had a problem with it, though. Even when starting a completely new session with Chat with RTX, it would remember videos I had linked previously. That shouldn’t happen, as Chat with RTX isn’t supposed to remember the context of your current or previous conversations.

I’ll walk through what happened because it can get a little hairy. In my first session, I linked to a video from the YouTube channel Commander at Home. It’s a Magic: the Gathering channel, and I wanted to see how Chat with RTX would respond to a complex topic that’s not explained in the video. It unsurprisingly didn’t do well, but that’s not what’s important.

I removed the old video and linked to an hourlong interview with Nvidia’s CEO Jensen Huang. After entering the link, I clicked the dedicated button to rebuild the database, basically telling Chat with RTX that we’re chatting about new data. I started this conversation out the same as I did in the previous one by asking, “what is this video about?” Instead of answering based on the Nvidia video I linked, it answered based on the previous Commander at Home video.

I tried rebuilding the database three more times, always with the same result. Eventually, I started a brand new session, completely exiting out of Chat with RTX and starting fresh. Once again, I linked the Nvidia video and downloaded the transcript, starting off with asking what the video was about. It again answered about the Commander at Home video.

I was only able to get Chat with RTX to answer about the Nvidia video when I asked a specific question about that video. Even after chatting for a bit, any time I asked what the video was about, it’s answer would relate to the Commander at Home video. Remember, in this session, Chat with RTX never saw that video link.

Nvidia says this is because Chat with RTX reads all of the transcripts you’ve downloaded. They’re stored locally in a folder, and it will continue answering questions about all the videos you’ve entered, even when you start a new session. You have to delete the transcripts manually.

In addition, Chat with RTX will struggle with general questions if you have multiple video transcripts. When I asked what the video was about, Chat with RTX decided that I was asking about the Commander at Home video, so that was the video it referenced. It’s a little confusing, but you’ll need to manually choose the transcripts you want to chat about, especially if you’ve entered YouTube links previously.

You find the usefulness

If nothing else, Chat with RTX is a demonstration of how you can leverage local hardware to use an AI model, which is something that PCs have sorely lacked over the past year. It doesn’t require a complex setup, and you don’t need to have deep knowledge of AI models to get started. You install it, and as long as you’re using a recent Nvidia GPU, it works.

It’s hard to pin down how useful Chat with RTX is, though. In a lot of cases, a cloud-based tool like ChatGPT is strictly better due to the wide swath of information it can access. You have to find the usefulness with it. If you have a long list of documents to parse, or a stream of YouTube videos you don’t have the time to watch, Chat with RTX provides something you won’t find with a cloud-based tool — assuming you respect the quirks inherent to any AI chatbot.

This is just a demo, however. Through Chat with RTX, Nvidia is demonstrating what a local AI model can do, and hopefully it’s enough to gather interest from developers to explore local AI apps on their own.