Data centers will be able to provide larger data rates and bandwidth in the future through this technology. In turn, this could expand the potential performance of cloud computing and Big Data applications.

Silicon photonics technology allows silicon chips to rely on pulses of light, rather than electrical signals. This enables data transfer at rapid speeds, even over long distances.

“Making silicon photonics technology ready for widespread commercial use will help the semiconductor industry keep pace with ever-growing demands in computing power driven by Big Data and cloud services,” said Arvind Krishna, senior vice president and director of IBM Research.

IT systems and cloud computing typically have to process large volumes of Big Data, and this isn’t always a fast process. Data needs to be able to be moved quickly between all of the system components in order for it to be efficient. Through silicon photonics, response times can be reduced, and data can be delivered faster.

“Just as fiber optics revolutionized the telecommunications industry by speeding up the flow of data — bringing enormous benefits to consumers — we’re excited about the potential of replacing electric signals with pulses of light,” Krishna continued.

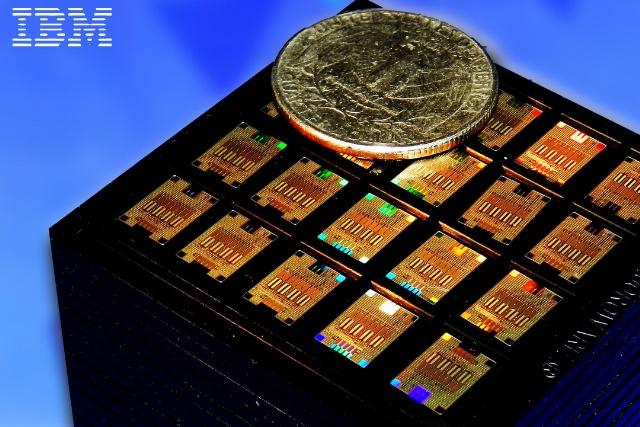

IBM’s silicon photonics chip uses four different colors of light traveling with an optical fiber. Within one second, it can share six million images, and it can download an entire high-definition movie within two seconds. The technology allows for the integration of various optical components side-by-side on a single chip.

In the future, this technology may allow computer hardware to communicate faster and more efficiently. Data centers might also be able to reduce the cost of space and energy while increasing performance for customers. For now though, it’s just a first step towards light speed computing.