Some of the most complex frames in Finding Dory took over 600 hours to render.

The team at Pixar doesn’t just color and animate though, and the tech side there is constantly searching for new ways to improve the work others are doing. That’s led to Presto, a program built for Pixar in cooperation with Maya, as well as a library of real-time rendering and modeling tools.

Related offer: Stream your favorite Pixar movies on Amazon Video now

At Nvidia’s GPU Technology conference three Pixar employees — graphics software engineer Pol Jeremias, lead software engineer Jeremy Cowles, and software engineer Dirk Van Gelder — explained how movie making led to software creation, with some appearances from favorite Pixar characters thrown in for good measure.

A unique challenge

As you might imagine, Pixar’s cutting-edge 3D animation demands impressive hardware. Part of the challenge specific to Pixar is that most machines are built for speed, not beauty. That’s why the company built its own systems purpose-built for movie making.

The standard machine at Pixar is powered by a 2.3GHz, 16-core Intel processor with 64GB of RAM, and a 12GB Nvidia Quadro M6000. If the team needs a little more oomph, there’s a dual-CPU configuration with two of the 16-core chips, a pair of M6000s, and 128GB of RAM.

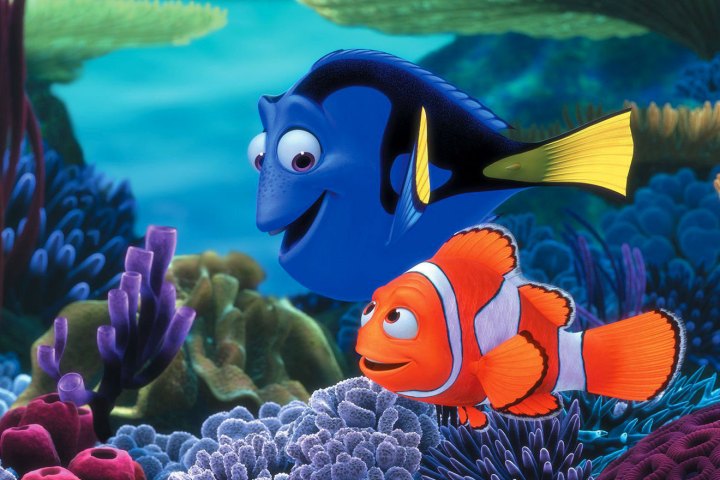

And even those machines are pushed to their limit during an active work day. There are over 100 billion triangles in a small shot, more than even the fastest gaming desktop could handle. Mater, from Cars, is made up of over 800 meshes, and almost all of them are deformed in some way. Add to that the schools of fish in Finding Nemo, or the swarms of robots in Wall-E, and the need to develop software in-house only becomes more pressing.

Presto

At the heart of Pixar’s software suite is the reclusive, proprietary Presto. The modeling software, built in cooperation with Maya, is responsible for everything from scene layout, to animation, to rigging, to even simulating physics and environments. Pixar doesn’t show it off in public often. Fortunately, during the presentation at GTC, we were treated to a live demo.

A lot of the Pixar’s articulation, animation, effects, and subdivision happen in real time.

Presto’s interface might look familiar to anyone who has spent time in 3D modeling applications like Maya or 3DSMax, but it has workflow innovations that help artists in different parts of the process stay focused on their work, and not have to deal with unnecessary information.

At the same time, animators and riggers can find an extensive amount of data relevant to their particular role, and multiple methods of articulating parts of the mesh. The models for characters aren’t just individual pieces. Grabbing Woody’s foot and moving it up and down also articulates his other joints, and the fabric in surrounding areas.

As a long-time Pixar fan, I couldn’t immediately point out any artifacts or graphical oddities in the live demo. It helps that it was just Woody and Buzz on a gray background, but textures were sharp, animation was clean, and reflections were accurate and realistic. Even a close-up focused on Woody’s badge looked spot-on. And it all happened in real time.

Harnessing collaborative power

One of Presto’s early limitations was its inability to handle collaborative work, so Pixar set out to bring the functionality into its workflow. The result is Universal Scene Description, or USD. This collaborative interface allows many Pixar artists to work on the same scene or model, but on different layers, without stepping on each other’s feet.

By managing each aspect of the scene individually — the background, the rigging, the shading, and more — an animator can work on a scene while an artist is touching up the characters’ look, and those changes will be reflected in renders across the board. Instead of frames, scenes are described in terms of layers and references, a much more modular approach to traditional 3D modeling.

Related Offer: Stream Monsters University on Amazon Video now

USD was first deployed at Pixar in the production of the upcoming film Finding Dory, and quickly became an integral part of the workflow. Its success hasn’t been limited to Pixar, and programs like Maya and Katana are already integrating USD. Assets in these programs can be moved and copied freely, but that’s not all there is to the story.

Van Gelden showed how Pixar is taking USD a step further with a new program called USDView. It’s meant for quick debugging and general staging, but even that’s becoming increasingly sophisticated. In a demo, USDView opened a short scene with 52 million polygons from Finding Dory in just seconds on a mobile workstation.

In fact, Van Gelden did it several times just to stress how snappy the software is. It’s not just a quick preview, either. There’s a limited set of controls for playback and camera movement, but it’s a great way for artists to get an idea of the blocking or staging of a scene without needing to launch it in Presto.

USD, with USDView built in, will launch as open-source software this summer. It will initially be available for Linux, but Pixar hopes to release it for Windows and Mac OS X later on.

Multiplying polygons

One of the main methods of refining 3D models is subdivision. By continually breaking down and redefining polygons, the complexity of the render increases — but so does the accuracy and level of detail. In video games, there’s a limit to how far subdivision can go before it hurts performance. In Pixar’s movies, though, the sky’s the limit.

To offer an example of how far subdivision could go, Jeremias showed an example of a simple 48-polygon mesh. The next image showed the polygon after a round of subdivision, looking much cleaner, and sporting 384 polygons. After another round, the shape had smoothed out completely, but the cost was a mesh with over 1.5 million polygons. Jeremias noted that these subdivisions are most noticeable at contact points between two models, and especially on a character’s fingertips.

Pixar relies on subdivision so much that the company built its own subdivision engine, OpenSubDiv. It’s based off Pixar’s original RenderMan libraries, but features a much broader API. It’s designed with USD in mind, as well, for easy integration into the workflow.

Summoning the Hydra

If you want to see how those elements are adding up without having to render a final scene, Hydra is the answer. It’s Pixar’s real-time rendering engine, built on top of OpenGL 4.4. Importantly, it’s built specifically for feature-length film production, and it’s built for speed.

Textures were sharp, animation was clean, and reflections were accurate and realistic.

It’s not an end-all be-all solution for final rendering, but it can help bring together a lot of effects and details for a more accurate representation of what a scene will look like than USDView can provide. It also supports features like hardware tessellated curves, highlighting, and hardware instance management.

Even other effects and media companies have been working with Pixar to integrate Hydra into their workflow. Industrial Light and Magic, the special effects company behind the Star Wars films, has built a hybrid version of its software that’s built around Pixar’s technology. In the case of the Millennium Falcon, that means 14,500 meshes and 140 textures at 8K each — no small feat, even for extreme workstations.

It’s not just about creating the models and animating them, however. A huge part of setting the mood and polishing a film involves post-processing effects. The artists and developers at Pixar wanted an equally intuitive and streamlined process for adding and managing effects.

And there are quite a few to manage. Cowles showed off a list of post-processing effects that wouldn’t look out of place in Crysis’ graphics settings. That includes ambient occlusion, depth of field, soft shadows, motion blur, a handful of lighting effects, and masks and filters in a variety of flavors. When you look closely at a rendering of an underwater scene with Dory and Nemo, the culmiative impact of these extras adds up quickly.

Real time, a recent development

Today, a lot of the Pixar’s articulation, animation, effects, and subdivision happen in real time. That wasn’t always the case. Van Gelder showed this by turning off the features that are now possible to instantly preview using the modern tool set. Shadows were gone, major details like pupils and markings disappeared, and all but the most basic color blocking vanished.

That example drove home the massive scale of each scene in these movies. The complexity of just a small scene far outweighs even the most advanced video games, and the payoff is immense.

Even with all of that impressive hardware and purpose-built software, some of the most complex frames in Finding Dory took over 600 hours to finish. It’s a cost that companies like Pixar have to consider in the budget for a film, but in-house, purpose-built software helps streamline the important areas.