When we think of the best graphics cards in this day and age, GPUs like the RTX 4090 or the RX 7900 XTX come to mind. However, for these beastly cards to be able to run, some much older GPUs had to crawl, and then walk, to get us to where we are today.

Looking back, the history of graphics cards shows us a few landmark GPUs that redefined graphics technology. Let’s take a trip back to 1996 and subsequent years to explore the graphics cards that made a difference, had an impact, and were smash hits with enthusiasts all around the globe.

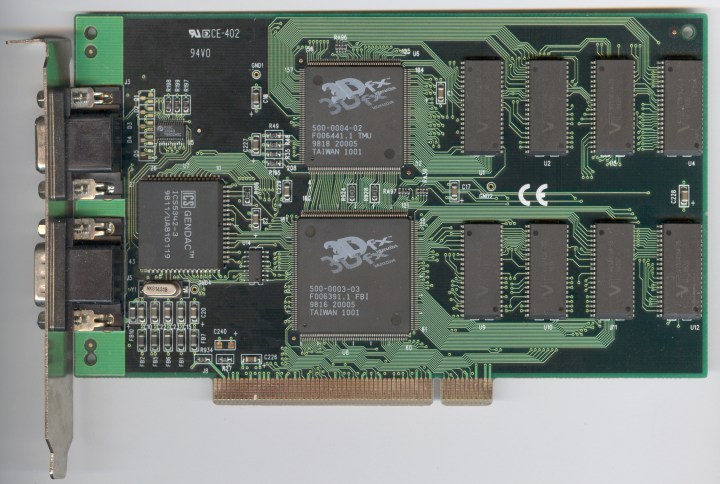

3dfx Voodoo

The 3dfx Voodoo marks the dawn of consumer 3D graphics acceleration. This graphics accelerator, equipped with a modest 50MHz clock speed and 4/6MB of memory, not to mention a 500nm process node, may not sound like much. However, it opened up gaming to a whole new market when it launched in 1996.

The card was primarily designed to cater to gamers. Its ability to render complex 3D environments was groundbreaking at the time, even if the specs sound laughable by today’s standards. The titles that supported Voodoo’s Glide API delivered an unmatched visual experience in 3D, at resolutions reaching up to 800 x 600, or 640 x 480 for the lower spec.

However, the 3dfx Voodoo (often referred to as Voodoo 1 later on) was not without fault. Since this was a 3D graphics accelerator, those who bought it still had to buy a separate 2D card. Without the 2D card, the 3dfx Voodoo wouldn’t be able to show any video at all, so this was more of a solution for enthusiasts. Like most of the graphics solutions of those times, it also struggled with heat.

Thanks to the launch of this accelerator, Voodoo Graphics became a market leader for several years, although the company later went on to release one of the worst GPUs of all time, the 3dfx Voodoo Rush. Ultimately, while its innovations were monumental and shaped the graphics landscape, 3dfx Interactive didn’t survive the volatile GPU market of the late 1990s and the early 2000s. Nvidia bought out most of its assets in late 2000 — mostly to secure the intellectual property rights.

ATI Radeon 9700 Pro

Just a few years later, in 2002, ATI’s Radeon 9700 Pro was the king of graphics for a while — and it certainly had much better specs than the 3dfx Voodoo. Based on a 150nm process node, it had a core clock of 325MHz and 128MB of DDR memory across a 256-bit interface. It could support resolutions of up to 2,048 x 1,536.

More importantly, it was the first graphics card to support DirectX 9.0, but that’s not all. This is the GPU that popularized anti-aliasing (AA) and anisotropic filtering (AF). Prior to this card, those features were not really mainstream, but this GPU made it possible. It also outperformed its competitors, such as Nvidia’s GeForce4 Ti 4600, by up to 100% with AA and AF enabled.

ATI’s early adoption of DirectX 9 allowed it to stand out compared to Nvidia’s GeForce4 Ti series, which didn’t offer hardware support for DX9. This was a period of time when ATI, later AMD, dominated Nvidia like never before, releasing a generation of graphics cards that defeated Nvidia with ease. The Radeon 9700 Pro was followed by the Radeon 9800 Pro, which was also a massive success among gamers and reviewers alike.

ATI went on to trade blows with Nvidia for a few more years, until it was acquired by AMD in 2006. The ATI brand name was killed off in 2010, but Radeon still remains in the names of AMD’s graphics cards.

Nvidia GeForce 8800 GTX

We’re moving on to 2006, when the battle between Nvidia and ATI (later AMD) was at its peak. This is when Nvidia launched the GeForce 8800 GTX, a massive (at the time) graphics card that spearheaded a key GPU architecture that’s still in use to this day. Launching as part of two flagships of the GeForce 8 series, the 8800 GTX was an enthusiast-level GPU with some fierce gaming capabilities.

The entire lineup was pretty exceptional for its time, but the 8800 GTX is remembered as a monster flagship with some pretty intense specs. It clocked at 575MHz and featured 768MB of GDDR3 VRAM across a 384-bit memory bus. It could handle resolutions of up to 2,560 x 1,600. More importantly, it introduced unified shader textures, propelling the GPU into a new era of graphics capabilities with unparalleled performance for that time.

Prior to the GeForce 8 series, graphics cards used separate shaders for different types of processing, such as vertex and pixel shaders. The unified architecture, which is still used in modern GPUs, combined these into a single, more flexible pipeline. This opened up GPU resources and made for more efficient processing.

Moreover, the 8800 GTX was the first to use DirectX 10, and that was a huge upgrade compared to the previous version. All in all, it was a hit upon release, and it stuck around for years even when newer GPUs hit the market.

AMD Radeon R9 290

When AMD launched the Radeon R9 290 in 2013, the graphics landscape was already vastly different from what we saw in the late 1990s and the early 2000s. It featured a clock speed of up to 947MHz and 4GB of GDDR5 memory across a 512-bit bus. For comparison, Nvidia has a version of the RTX 3050 that also sports 4GB of VRAM (and a 128-bit interface), although that’s GDDR6. The R9 290 could also handle resolutions of up to 4K, so it’s a lot closer to the top GPUs we’re seeing these days than any of the previous cards on this list.

Thanks to the spacious 512-bit memory interface, the R9 290 could provide high memory bandwidth, and that unlocked high-end gaming. It also supported AMD’s Mantle API, which was an early attempt at creating a low-level graphics API that later influenced the development of Vulkan. Some also claim that DirectX 12 was also influenced by Mantle in some way. The Radeon R9 290 was also one of the first times we’ve seen dedicated audio processing hardware on the GPU side.

The legacy of the AMD R9 290 lies not just in new features, but in the birth of AMD’s performance-per-dollar approach. It was a graphics card capable of delivering high-end performance at a much more affordable price ($400) than enthusiasts were used to at the time. As said in this Anandtech review, the R9 290 was able to deliver 106% of the performance offered by the Nvidia GeForce GTX 780 — and at $100 less. Let’s not forget that it was almost as powerful as the R9 290X, all the while being $150 cheaper.

The high-end gaming GPU market was never cheap, although these days, it’s pricier than ever. But with the R9 290, AMD showed the masses that it’s entirely possible to make a premium GPU and price it in a way that had Nvidia scrambling to offer discounts. Let’s have more of that in 2024, please.

Nvidia GeForce GTX 1080 Ti

Ah, the GTX 1080 Ti — the cherry on top of Nvidia’s excellent Pascal architecture. That entire generation of GPUs aged so well that they still rank high in the monthly Steam Hardware Survey, and I personally only got rid of my GTX 1060 just a few months ago. And yes, I do play AAA games.

Nvidia’s flagship, launched in 2017, came with a maximum clock of 1,582MHz and a massive (at the time, and even today) 11GB of GDDR5X memory across a 352-bit bus. With 3,584 CUDA cores and support for up to 8K resolution, this GPU still holds up well to this day, easily outperforming older budget graphics cards.

To this day, I think that the GTX 1080 Ti was one of Nvidia’s greatest achievements. At just 250 watts, the GPU wiped the floor with the Titan X Pascal. That’s not all — when Nvidia launched the Titan Xp sometime later, it was only a few percent faster than the GTX 1080 Ti. At $1,200 for the Titan and $700 for the 1080 Ti, it was an obvious choice as to which card to pick.

At its peak, the GTX 1080 Ti was every gamer’s goal, and using two of them via Nvidia’s SLI was the dream that some enthusiasts splurged on. While SLI is pretty much dead now, and using two 1080 Ti GPUs was never worth it, the card was a powerhouse that redefined the idea of high-end gaming. If only we could get that kind of relative performance for just $700 these days. Of course, the $700 stung more in 2017, when GPUs were generally cheaper.

Nvidia GeForce RTX 3060 Ti

Nvidia’s RTX 3060 Ti is no longer one of the best graphics cards. It’s largely been replaced by the RTX 40-series, or, on the budget side of things, by AMD’s RX 7000 series. However, it’s such a good GPU that I’d still recommend it over many current-gen offerings — provided you can find a good deal on it.

The RTX 3060 Ti marks one of the first cards that offered reasonable ray tracing performance at a mainstream price. Priced at $400 at launch, the GPU has a max clock speed of 1,665MHz, 8GB of GDDR6 memory, a 256-bit bus, and 4,864 CUDA cores. Compared to the disappointing RTX 4060 Ti, the RTX 3060 Ti is sometimes faster than its successor, and even when it’s not, it keeps up. Considering that the RTX 4060 Ti arrived two and a half years later, it speaks volumes about the potential and capabilities of the RTX 3060 Ti.

This was also one of the first GPU releases where upgrading from the GTX 1080 Ti even made any sense. It’s a testament to the power of Pascal that the RTX 3060 Ti is only about 15% faster than the 1080 Ti — but it offers access to all of Nvidia’s RTX software stack, including the ever-impressive DLSS.

The impact of the RTX 3060 Ti may seem smaller now that we know that Nvidia later on introduced DLSS 3, available only on RTX 40-series cards, which crushed DLSS 2. However, even if the RTX 3060 Ti didn’t introduce any standout features all on its own, it was a landmark GPU for its generation, offering access to AAA gaming on a budget during a time when the GPU shortage ravaged the entire market. To this day, it’s the fifth-most popular graphics card in the Steam Hardware Survey, and it’s still an option that makes sense to buy for a budget PC build. That’s saying a lot for a GPU that was launched at the end of 2020.