People were in awe when ChatGPT came out, impressed by its natural language abilities as an AI chatbot originally powered by the GPT-3.5 large language model. But when the highly anticipated GPT-4 large language model came out, it blew the lid off what we thought was possible with AI, with some calling it the early glimpses of AGI (artificial general intelligence)

What is GPT-4?

GPT-4 is the latest generation language model, GPT-4o being the latest specific version, created by OpenAI. It advances the technology used by ChatGPT, which was previously based on GPT-3.5 but has since been updated. GPT is the acronym for Generative Pre-trained Transformer, a deep learning technology that uses artificial neural networks to write like a human.

According to OpenAI, this next-generation language model is more advanced than ChatGPT in three key areas: creativity, visual input, and longer context. In terms of creativity, OpenAI says GPT-4 is much better at both creating and collaborating with users on creative projects. Examples of these include music, screenplays, technical writing, and even “learning a user’s writing style.”

The longer context plays into this as well. GPT-4 can now process up to 128k tokens of text from the user. You can even just send GPT-4 a web link and ask it to interact with the text from that page. OpenAI says this can be helpful for the creation of long-form content, as well as “extended conversations.”

GPT-4 can also view and analyze uploaded images. In the example provided on the GPT-4 website, the chatbot is given an image of a few baking ingredients and is asked what can be made with them. ChatGPT cannot, however, analyze video clips in the same way.

OpenAI also says GPT-4 is significantly safer to use than the previous generation. It can reportedly produce 40 percent more factual responses in OpenAI’s own internal testing, while also being 82 percent less likely to “respond to requests for disallowed content.”

OpenAI says it’s been trained with human feedback to make these strides, claiming to have worked with “over 50 experts for early feedback in domains including AI safety and security.”

In the initial weeks after it first launched, users posted some of the amazing things they’ve done with it, including inventing new languages, detailing how to escape into the real world, and making complex animations for apps from scratch. One user apparently made GPT-4 create a working version of Pong in just sixty seconds, using a mix of HTML and JavaScript.

GPT-4’s inference capabilities have enabled OpenAI to roll out a host of new features and capabilities to its ChatGPT platform in recent months. In September, for example, the company released its long-awaited Advanced Voice Mode, which enables users to converse with the AI without the need for text-based prompts. The feature arrived on PC and Mac desktops in late October. The company is currently working to integrate video feeds from the device’s camera into AVM’s interface as well.

The company also recently rolled out two new search capabilities. Chat History Search allows users to reference and recall details from previous conversations with the AI. ChatGPT Search, conversely, scours the web to provide conversational, up-to-date answers directly to a user’s query, rather than a list of potential websites as Google Search does. Early results from GPT Search have been rather disappointing as the system struggles with returning accurate answers.

OpenAI also plans to roll out its new AI agent feature, which will empower the chatbot to take independent action — like summarizing meetings and generating follow-up action lists, or booking flights, restaurants, and hotels — in January, though that release might coincide with that of OpenAI’s next-generation model, code named “Orion.”

How to use GPT-4

GPT-4 is available to all users at every subscription tier OpenAI offers. Free tier users will have limited access to the full GPT-4 modelv (~80 chats within a 3-hour period) before being switched to the smaller and less capable GPT-4o mini until the cool down timer resets. To gain additional access GPT-4, as well as be able to generate images with Dall-E, is to upgrade to ChatGPT Plus. To jump up to the $20 paid subscription, just click on “Upgrade to Plus” in the sidebar in ChatGPT. Once you’ve entered your credit card information, you’ll be able to toggle between GPT-4 and older versions of the LLM.

If you don’t want to pay, there are some other ways to get a taste of how powerful GPT-4 is. First off, you can try it out as part of Microsoft’s Bing Chat. Microsoft revealed that it’s been using GPT-4 in Bing Chat, which is completely free to use. Some GPT-4 features are missing from Bing Chat, however, and it’s clearly been combined with some of Microsoft’s own proprietary technology. But you’ll still have access to that expanded LLM and the advanced intelligence that comes with it. It should be noted that while Bing Chat is free, it is limited to 15 chats per session and 150 sessions per day.

There are lots of other applications that are currently using GPT-4, too, such as the question-answering site, Quora.

When was GPT-4 released?

GPT-4 was officially announced on March 13, as was confirmed ahead of time by Microsoft, and first became available to users through a ChatGPT-Plus subscription and Microsoft Copilot. GPT-4 has also been made available as an API “for developers to build applications and services.” Some of the companies that have already integrated GPT-4 include Duolingo, Be My Eyes, Stripe, and Khan Academy. The first public demonstration of GPT-4 was livestreamed on YouTube, showing off its new capabilities.

What is GPT-4o mini?

GPT-4o mini is the newest iteration of OpenAI’s GPT-4 model line. It’s a streamlined version of the larger GPT-4o model that is better suited for simple but high-volume tasks that benefit more from a quick inference speed than they do from leveraging the power of the entire model.

GPT-4o mini was released in July 2024 and has replaced GPT-3.5 as the default model users interact with in ChatGPT once they hit their three-hour limit of queries with GPT-4o. Per data from Artificial Analysis, 4o mini significantly outperforms similarly sized small models like Google’s Gemini 1.5 Flash and Anthropic’s Claude 3 Haiku in the MMLU reasoning benchmark.

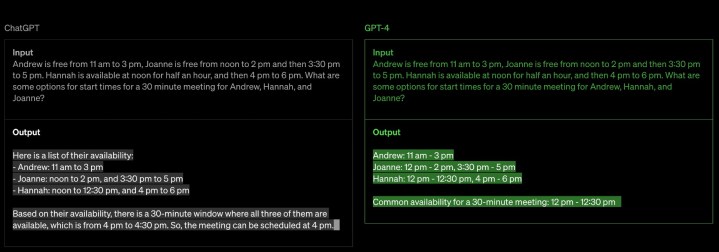

Is GPT-4 better than GPT-3.5?

The free version of ChatGPT was originally based on the GPT 3.5 model; however, as of July 2024, ChatGPT now runs on GPT-4o mini. This streamlined version of the larger GPT-4o model is much better than even GPT-3.5 Turbo. It can understand and respond to more inputs, it has more safeguards in place, provides more concise answers, and is 60% less expensive to operate.

The GPT-4 API

GPT-4 is available as an API to developers who have made at least one successful payment to OpenAI in the past. The company offers several versions of GPT-4 for developers to use through its API, along with legacy GPT-3.5 models. Upon releasing GPT-4o mini, OpenAI noted that GPT-3.5 will remain available for use by developers, though it will eventually be taken offline. The company did not set a timeline for when that might actually happen.

The API is mostly focused on developers making new apps, but it has caused some confusion for consumers, too. Plex allows you to integrate ChatGPT into the service’s Plexamp music player, which calls for a ChatGPT API key. This is a separate purchase from ChatGPT Plus, so you’ll need to sign up for a developer account to gain API access if you want it.

Is GPT-4 getting worse?

As much as GPT-4 impressed people when it first launched, some users have noticed a degradation in its answers over the following months. It’s been noticed by important figures in the developer community and has even been posted directly to OpenAI’s forums. It was all anecdotal, though, and an OpenAI executive even took to X to dissuade the premise. According to OpenAI, it’s all in our heads.

No, we haven't made GPT-4 dumber. Quite the opposite: we make each new version smarter than the previous one.

Current hypothesis: When you use it more heavily, you start noticing issues you didn't see before.

— Peter Welinder (@npew) July 13, 2023

Then, a subsequently published study suggested that there was, indeed, a worsening quality of answers with future updates of the model. By comparing GPT-4 between the months of March and June, the researchers were able to ascertain that GPT-4 went from 97.6% accuracy down to 2.4%.

In November 2024, GPT-4o’s capabilities were again called into question. “We have completed running our independent evals on OpenAI’s GPT-4o release yesterday and are consistently measuring materially lower eval scores than the August release of GPT-4o,” Artificial Analysis announced via an X post at the time, noting that the model’s Artificial Analysis Quality Index score had dropped to par with the company’s smaller GPT-4o mini model. GPT-4o’s performance on the GPQA Diamond benchmark similarly dropped 11 points from 51% to 39% while its MATH benchmarks decreased from 78% to 69%. The researchers did find, conversely, that GPT-4o’s response time to user queries nearly doubled over the same time period.

Where is the visual input in GPT-4?

One of the most anticipated features in GPT-4 is visual input, which allows ChatGPT Plus to interact with images not just text, making the model truly multimodal. Uploading images for GPT-4 to analyze and manipulate is just as easy as uploading documents — simply click the paperclip icon to the left of the context window, select the image source and attach the image to your prompt.

What are GPT-4’s limitations?

While discussing the new capabilities of GPT-4, OpenAI also notes some of the limitations of the new language model. Like previous versions of GPT, OpenAI says the latest model still has problems with “social biases, hallucinations, and adversarial prompts.”

In other words, it’s not perfect. It’ll still get answers wrong, and there have been plenty of examples shown online that demonstrate its limitations. But OpenAI says these are all issues the company is working to address, and in general, GPT-4 is “less creative” with answers and therefore less likely to make up facts.

The other primary limitation is that the GPT-4 model was trained on internet data up until December 2023 (GPT-4o and 4o mini cut off at October of that year). However, since GPT-4 is capable of conducting web searches and not simply relying on its pretrained data set, it can easily search for and track down more recent facts from the internet.

GPT-4o is the latest release of the GPT-4 family (not counting the new o1) and GPT-5 is still incoming.