We all know that ChatGPT is great at speeding up mundane tasks. What could be drier than explaining the rules of a complicated game at board game night?

There’s no substitute for just knowing the game, but being able to reach for AI instead of the rulebook could make things a whole lot easier. Nothing derails a great game of Twilight Imperium like breaking out the Living Rules and endlessly scrolling. So, if I were to bring ChatGPT to board game night, I could definitely see it coming in handy. But before I subjected my friends to a robot reading them the rules, I decided to test it out with some basic questions to see if it was up to snuff.

A defined ruleset sounds ideal

I have no idea why ChatGPT knows the rules of so many games, but it does. Or at least thinks it does. While it might be tricky to find an online manual for some of my collection, ChatGPT seems to have it all — some of those billions of data points it was trained on reportedly included the errata for something as obscure as the third Battlestar Galactica expansion.

That’s great, though, because it should know all the rules I don’t, right? Within the limited, constrained, and very particular environment of a board game, ChatGPT with its limited understanding of anything, but extensive knowledge of certain topics, should be great at it.

Unfortunately, as with everything else ChatGPT confidently posits to know, it’s often not quite right, and sometimes it’s outright wrong.

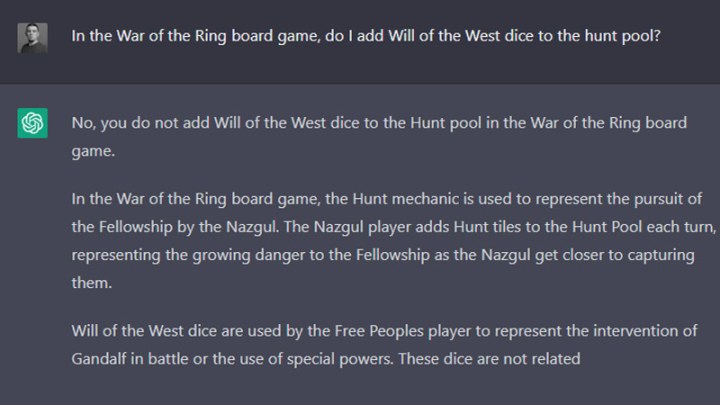

The initial answer is quite correct: you don’t add the dice to the hunt pool. But hunt tiles aren’t added every turn. Maybe it’d be better if I had ChatGPT tell me where in the rulebook I can find this information?

Hmm. Apparently putting it on the spot means it then corrects itself and gets the rule more wrong than it did before. It thinks there might be multiple “Gandalfs” in the Fellowship, and that there are special “Will of the West,” dice, rather than that being one of the possible results on the game’s action dice.

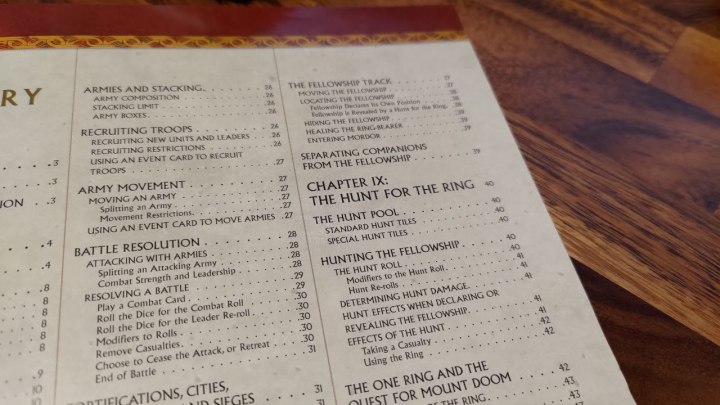

It then goes on double down on that error by citing a page in the rulebook that doesn’t have anything to do with “The Hunt.” There’s a section called “The Hunt For the Ring,” but it’s not until Page 40.

But maybe this isn’t ChatGPT’s best game. Let’s give it one more chance to help with a game that’s somehow even bigger and more complicated than War of the Ring: Twilight Imperium.

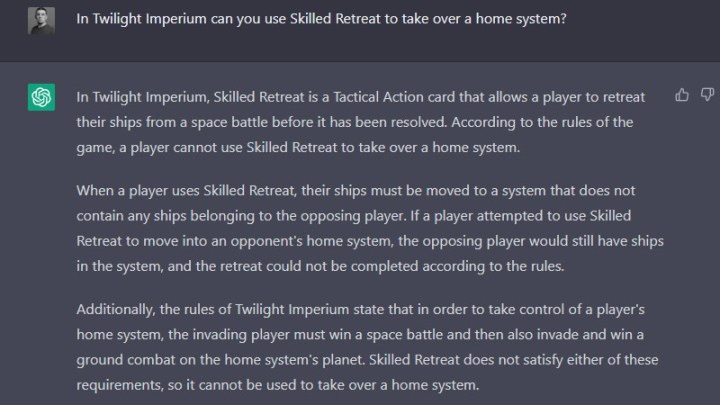

Here, ChatGPT does an admirable job, in that it does get the answer right, but for the wrong reasons. You can’t take a home system because you can’t invade it, not because you can’t move ships there.

If that seems pedantic, I get it. I don’t like telling my friend they can’t do something because they’ve misunderstood the very specifically worded text on the card they’ve played. These details matter in games, and if I’m going to get ChatGPT to do it for me, I need to be able to fully trust it.

It’s back to reading the rulebooks over and over

This was just a snippet of my time quizzing ChatGPT on how to play my favorite games. It knew how to launch ships in Battlestar Galactica, even if it wasn’t clear what part of your turn you do it in. It had a good idea of how to get cave tokens in Quest for El Dorado, but was very wrong on the cost you had to pay for them.

It did know Kingdom Death: Monster quite well, though, accurately reporting the stats of some of the monsters, and even making suggestions on how to modify those stats to my advantage.

It was a fun exercise seeing what ChatGPT knows about games, and it feels like one area where in the future, it could be invaluable. It wouldn’t even need to know all games. I can imagine a scenario where game publishers could have their own AI to help teach you their games, and I wouldn’t be surprised if it could act as a stand-in player one day too.

And who knows, maybe GPT-4 in ChatGPT Plus would have already solved this problem.

For now though, since I can’t trust it, it’s back to reading rulebooks on the toilet so that when one of the players has a question, I can answer it. Because ChatGPT can’t. Yet.