Native resolution is dead, or so the story goes. A string of PC games released this year, with the most recent being Alan Wake 2, have come under fire for basically requiring some form of upscaling to achieve decent performance. Understandably, there’s been some backlash from PC gamers, who feel as if the idea of running a game at native resolution is quickly becoming a bygone era.

There’s some truth to that, but the idea that games will rely on half-baked upscalers to achieve reasonable performance instead of “optimization” is misguided at best — and downright inaccurate at worst. Tools like Nvidia’s Deep Learning Super Sampling (DLSS) and AMD’s FidelityFX Super Resolution (FSR) will continue to be a cornerstone of PC gaming, but here’s why they can’t replace native resolution entirely.

The outcry

Let’s start with why PC gamers have the impression that native resolution is dead. The most recent outcry came over Alan Wake 2 when the system requirements revealed that the game was built around having either DLSS or FSR turned on. That’s not a new scenario, either. The developers of Remnant 2 confirmed the game was designed around upscaling, and you’d be hard-pressed to find a AAA release in the last few years that didn’t pack in upscaling tech.

You can see an example of the reaction to Remnant 2 below that focuses on the “consequences” of tools like DLSS on the gaming industry. Another example, which I won’t post, is when a commenter on Reddit jokingly recommended forming a self-harm cult so “they will see the error of their ways and repent,” referring to developers requiring upscaling. Obviously a joke, but it’s a good illustration of the general reaction from the PC gaming community to games increasingly relying on upscaling to improve performance.

It’s not the upscaling alone that is the problem here. For as many great games have been released this year, there have been a string of disastrous PC ports. It’s understandable why PC gamers would look at that, factor in upscaling requirements, and come to the conclusion that features like DLSS and FSR are a crutch for poor optimization. It’s understandable, but that doesn’t make it true.

On top of that, the larger PC community has doubled down on this conversation, further reinforcing the idea that upscaling has been a crutch for poor optimization. A clip from The WAN Show podcast, for example, is titled “Native Res Gaming is Dead” and talks about Nvidia’s new DLSS 3.5 feature. And in Tom’s Hardware’s coverage of an interview Digital Foundry conducted with Nvidia, the headline reads: “Nvidia says native resolution gaming is out, DLSS is here to stay.” You can see a reaction to that article from a reader below.

As is usually the case, these conversations carry nuance to them, but for PC gamers casually browsing headlines with preformed ideas about game optimization, it’s easy to see why the backlash against tools like DLSS and FSR have become an easy, default position. And now that we’re seeing games that basically require upscaling to run, there’s a slippery slope sentiment looming over the PC gaming community.

Once you look outside of the PC gaming community, the progression of graphics in gaming starts to become clearer, and it’s not a straight line.

The life cycle

PC gaming can be siloed off from the rest of the gaming world. Xbox and PlayStation compete so directly with each other that players on one platform are usually aware of what’s happening on the other platform. On PC, at least, you can stick your head in the sand and mostly ignore what’s going with the consoles. They provide a lot of context for this conversation, though.

We’re just now reaching the point where developers aren’t releasing games on last-gen consoles. We’ve seen a handful over the last few years, most of which have been exclusive to one console or the other. Now, most games are releasing exclusively on next-gen consoles, allowing developers to dial up the quality. Here’s a small sampling of games released on next-gen consoles and on PC this year that didn’t receive a last-gen version:

- Dead Space

- Forspoken

- Star Wars Jedi: Survivor

- Redfall

- Remnant 2

- Baldur’s Gate 3

- Immortals of Aveum

- Starfield

- Payday 3

- Cyberpunk 2077: Phantom Liberty

- Forza Motorsport

- Lords of the Fallen

- Alan Wake 2

With a couple of exceptions, all of the above games have released with some amount of controversy surrounding their PC performance. By contrast, games that also released on last-gen consoles like Lies of P, Atomic Heart, and Armored Core VI: Fires of Rubicon arrived without too many issues. New games releasing on next-gen consoles are able to push visual quality further, putting more strain on PC hardware.

If you wind the clock back to the previous generation of consoles, the situation wasn’t much different. In a game like Hitman 2016, which didn’t support the Xbox 360 or PlayStation 3, the GTX 970 couldn’t even reach 60 frames per second (fps) at 1080p, and that GPU was only two years old at the time. The same was true in The Division, which was also not released on the PS3 or Xbox 360.

Looking at numbers from a PC Gamer review from around that time, you can see that games that were also on the PS3 and Xbox 360 like Middle-Earth: Shadow of Mordor and Tomb Raider clearly allowed the GTX 970 to hit above 60 fps at 1080p. That’s not dissimilar from what we’re seeing now, but back then, you didn’t have tools like DLSS or FSR to improve performance on PC.

This laborious explanation is all to say: consoles are the lowest common denominator, and developers will design their games around what the consoles are capable of. Graphical fidelity doesn’t follow a linear path where each year we see the same jump in how demanding games are. As the consoles continue to age, developers will also need to continue to dial back visual quality to achieve acceptable performance on consoles. If games like Starfield are releasing now locked at 30 fps on console, what do you think games in two or three years will do?

Consoles gamers will need to wait for the next version of the Xbox and PlayStation to release to see a big jump in graphics quality, but graphics cards in PCs will keep chugging along in the meantime. In two to three years from now, when we’re a couple of generations down the road for GPUs, new games probably won’t look as demanding on PC as they do today, and the reliance on upscaling to achieve the visual quality we see today will become less important.

There will likely be exceptions as games pack in new forms of rendering to justify the need for more powerful graphics cards, but the visual quality we see in most games today will plateau as consoles struggle to keep up, all while graphics cards push ahead. That’s the cadence we’ve seen for the past two decades, at least, and even among conversations about GPUs getting more expensive and Moore’s Law dying out, that pace doesn’t seem to be slowly down.

Upscaling and AA are two sides of the same coin

I don’t want to leave the impression that features like DLSS and FSR are stopgap solutions as games start to leverage the power of the PS5 and Xbox Series X, though. That’s not the case. I suspect DLSS, FSR, and Intel’s XeSS will continue to be essential features in games, and maybe even requirements. But that doesn’t mean they’ll be on upscaling duty.

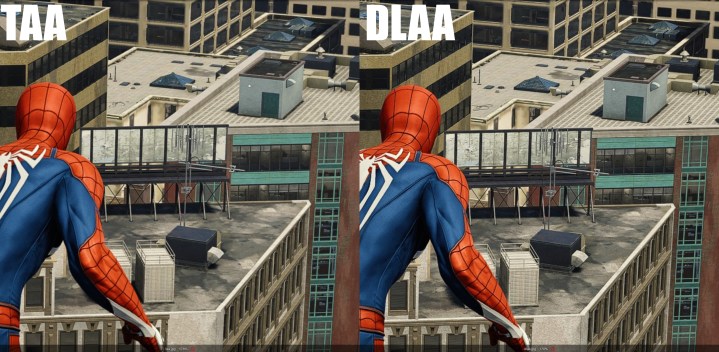

If you’re unaware of how these tools work, upscaling is only part of what they do. DLSS, FSR, and XeSS also handle anti-aliasing. You might notice in games like Alan Wake 2 that you don’t even have an anti-aliasing option. Instead, the game relies on the Temporal Anti-Aliasing (TAA) inside of DLSS and FSR, which is why you can’t turn the features off, even if you’re running the game at native resolution.

It’s clear that Nvidia and AMD, at least, recognize that there will be games where you don’t need to use upscaling. That’s likely why we already have features like Deep Learning Anti-Aliasing (DLAA) and AMD’s FSR 3 Native AA mode, both of which ditch upscaling for better anti-aliasing. If there’s any major trend happening here, it’s that more games are using DLSS and FSR for anti-aliasing instead of their own solutions.

We also need to talk about frame generation, which is a key feature for DLSS 3 and FSR 3. Frame generation has enabled things like path tracing in Cyberpunk 2077 and Alan Wake 2, so the new goal post for graphics cards will be to run these features without any frame generation. Once again, I’m sure we’ll see new ways that developers will be able to push frame generation on PC, but it’s not exactly clear what those techniques are right now.

Never simple

Trying to nail down trends in the world of PC gaming is never simple, with influences reaching far beyond whatever Nvidia and AMD are doing at the time. For anyone worried that we’re barreling toward a future where upscaling and frame generation will completely replace the need for graphics cards, that’s not much of an issue right now. That could change in the future, but not before these features are indistinguishable from a natively rendered game.

My prediction in the short term is that the need for upscaling and frame generation will start to slow down as the consoles age, with developers understandably limiting the scope of their games to support the most common platform. Eventually, we’ll be in this situation again when the next next-gen consoles release. But making predictions about what that will look like right now is a fool’s errand — for better or worse.