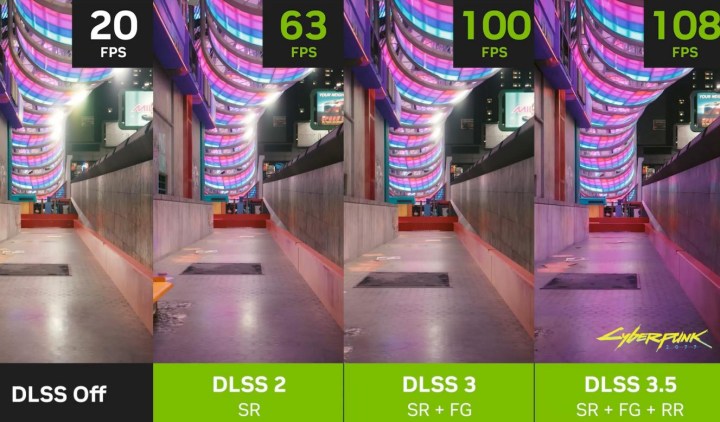

I won’t lie: Nvidia did a good job with Deep Learning Super Sampling (DLSS) 3, and there’s almost no way that this success didn’t contribute to sales. DLSS 3, with its ability to turn a midrange GPU into something much more capable, is pretty groundbreaking, and that’s a strong selling point if there ever was one.

What comes next, though? The RTX 40-series is almost at an end, and soon, there’ll be new GPUs for Nvidia to try and sell — potentially without the added incentive of gen-exclusive upscaling tech. DLSS 3 will be a tough act to follow, and if the rumors about its upcoming graphics cards turn out to be true, Nvidia may really need DLSS 4 to be a smash hit.

When the GPU barely matters

As we are on the cusp of a new GPU generation, it feels safe to look back at the RTX 40 series and judge it for what it was: not without flaws, but still huge.

Following in the footsteps of the RTX 30-series, there wasn’t much Nvidia had to do in order to sell new GPUs. The market’s just been through a massive shortage, after all. The bar was set pretty low — consumers wanted GPUs that were affordable, did the job, and were available without too much hassle. Assuming that was the criteria for many gamers, Nvidia managed to deliver on two out of three. The RTX 40-series is easy to come by and some of the GPUs in this generation are truly impressive. That missing point, though — that’s where it gets trickier.

Nvidia launched the RTX 40 series with two GPUs that cost $1,600 and $1,200, respectively, and strangely enough, the pricier card offered better value for the money. The GPUs that followed weren’t all fantastic, with the performance-per-dollar factor falling short of what you’d expect to see in a new generation. Some cards, like the RTX 4060 Ti, ended up offering almost the same performance as their last-gen counterparts. That’s not what you want to see in a next-gen product.

But Nvidia had a major saving grace in this gen regardless of the specific card: DLSS 3.

We have plenty of examples of how transformative DLSS 3 can be for an entry-level to midrange graphics card. In the titles that support it, DLSS 3 offers performance that’s far above what you’d expect on some cards.

Let’s take the RTX 4070 Super, for example. When we attempted to run Cyberpunk 2077 at 4K with ray tracing enabled, the GPU rightfully struggled, pulling a measly 19 frames per second (fps). Toggle DLSS 3 on and it’s suddenly running at a smooth 77 fps. To run that game comfortably at 4K without DLSS, you’d need a much pricier GPU. Twice as expensive.

Nvidia’s got itself a good piece of tech with DLSS, and it was smart about it. It locked it behind a paywall to end all paywalls by making it available only on a single generation of GPUs. Although the previous iteration of DLSS is available to all owners of an RTX card, DLSS 3 is exclusive to the RTX 40 series. How’s that for an incentive to upgrade?

Given that it’s a far cry from DLSS 2, there’s no way DLSS 3 wasn’t enough to entice some buyers to go for the latest-gen card, or to go for Nvidia at all. Personally, when I weighed the differences between the RTX 4080 and the AMD RX 7900 XTX, DLSS 3 played a major part in my decision to stick with Nvidia.

Some RTX 40-series cards are excellent. Some are just vessels for DLSS 3, and thanks to the power of Nvidia’s frame generation, they still get sold. DLSS 3 made it so that the graphics card itself matters a lot less, and Nvidia might have to repeat that for the RTX 50-series.

Grim speculations

Despite the fact that the RTX 50-series is rumored to be launching later this year, we still don’t know much about it that’s not based on speculation. In fact, beyond the fact that the generation is called Blackwell, I’m not sure that Nvidia’s ever actually confirmed anything. So, we turn to leakers to fill us in with information that may or may not be true, and it’s not all great.

The most coveted leaks regarding the RTX 50-series are all about the specs, as it’s a little too early to hope for a glimpse at the pricing. To that end, the most recent leak comes from kopite7kimi, and Moore’s Law Is Dead pitched in with some speculation of his own.

The leaker revealed the rumored streaming multiprocessor (SM) count for each GPU, ranging from the high-end GB202 to the entry-level GB207, by showing the number of graphics processing clusters (GPCs) multiplied by texture processing clusters (TPCs). Doubling that number gives us the total number of SMs. This, in turn, tells us how many CUDA cores each GPU sports, and that’s a good indicator of how it will compare to its predecessors.

Calculations aside, what we’re potentially seeing in the RTX 50-series seems like a repeat of the RTX 40-series. The top GPU, GB202, should offer a massive uplift across the board, with a reported 192 SMs (versus 142 SMs in AD102), or a 33% improvement in SMs. Moving down to the GB203, which has reportedly been cut down significantly and may appear in the RTX 5080, there’s only an improvement of 5%.

The GB205 GPU is where it gets truly dicey. It’s not just that there’s no SM boost — there’s actually a downgrade of 17% compared to AD104 (there’s no GB204 in this generation), from 60 SMs down to 50. Next, GB206 is said to sport the exact same SM count, while GB207 once again features a 17% decrease in SMs: from 24 down to 20.

If this checks out, we’re looking at subtle improvements across the board, apart from the RTX 5090. Even then, it’s unclear how much of the chip the graphics card will actually utilize; the RTX 4090 didn’t harness the full power of the AD102 chip, so the final SM count might be smaller in the finished product.

GB202 12*8 512-bit GDDR7

GB203 7*6 256-bit GDDR7

GB205 5*5 192-bit GDDR7

GB206 3*6 128-bit GDDR7

GB207 2*5 128-bit GDDR6— kopite7kimi (@kopite7kimi) June 11, 2024

Of course, there are more benefits to the new generation than just an increase in compute power. Moore’s Law Is Dead speculates that the GB203 chip (RTX 5080) should offer an up to 10% increase in clock speeds, better instructions per lock (IPC), and a great increase in bandwidth. The latter stems from the fact that Nvidia is said to be switching to faster GDDR7 memory, so that alone should help a lot.

These predictions are more optimistic. The YouTuber estimates a boost of 15-30% in every tier below the RTX 5090, and for the flagship, we might see an increase of as much as 60%. That’s still less than the RTX 3090 to the RTX 4090, though, and a 15% boost may not be enough to lure in new buyers. It depends on the price, and although Nvidia appears to have learned its lesson with the RTX 40 Super cards, I don’t expect the RTX 50-series to be cheap.

If the predictions come true and we’ll get new GPUs with a not-so-significant upgrade in gaming performance, but with a price hike, Nvidia will need another selling point. It’ll need DLSS 4, and it needs to be outstanding.

What can we expect from DLSS 4?

Much like the RTX 50-series, the next generation of Nvidia’s AI upscaling technology is steeped in mystery. We know that it’s most likely going to happen, but will it be this year? What will it bring? We have to resort to speculation yet again, but this time, it’s fueled by Jensen Huang himself, the CEO of Nvidia.

In a post-Computex Q&A (shared by More Than Moore), Huang spoke about the use of AI in games. We all know that Nvidia loves AI, and with things like G-Assist on the horizon, we’re only going to see more AI in games going forward.

“In the future, we’ll even generate textures and objects, and the objects can be of lower quality and we can make them look better. We’ll also generate characters in the games — think of a group of six people, two may be real, and the others may be long-term use AIs,” Huang said.

The overwhelming use of AI continued throughout his response. He added: “The games will be made with AI, they’ll have AI inside, and you’ll even have the PC become AI using G-Assist. You can use the PC as an AI assistant to help you game.”

Huang’s response doesn’t mention DLSS, but it came as an answer to a question about both DLSS and Nvidia ACE. But will these features end up in DLSS 4? Will they only be fully realized in time for DLSS 5? Will they become something else entirely? It’s too early to say, but it’s clear that Nvidia hopes to make AI the very foundation of your gaming experience.

Generating in-game assets instead of just frames may not sound like something that could boost performance, but it very much can. This will shift some of the work from CUDA cores toward tensor cores, which are made to deal with AI and machine learning workloads. As a result, the GPU should have more resources available to simply focus on performance while tensor cores handle the AI side of things.

Asset generation is yet another step up from the frame generation we know from DLSS 3. It’s not just in-game assets that Nvidia hopes to generate but also NPCs, presumably powered by Nvidia ACE to bring them to life. If even half of those things make it to DLSS 4, Nvidia may have a real gem on its hands, and it’s already drawing closer. DLSS 3 is now, in fact, DLSS 3.7; version 3.5 brought us ray reconstruction, while 3.7 offered more minor upgrades.

Backward compatibility? Probably not

Let’s assume that DLSS 4 will launch soon — within the year (and that’s only based on the assumption that it’ll launch alongside the RTX 50 series, so don’t quote me on this). Let’s also assume that it’ll be outstanding. Will DLSS 4 be backward compatible with RTX 40-series, though? That’s a stretch that I’m not willing to bet on. All hardware considerations aside, I find it hard to believe that Nvidia may miss out on the opportunity to milk DLSS 4 for all of its potential once it makes it to market.

AMD has a different approach to Nvidia. Its upscaling tech is available on GPUs of all vendors, although FSR 3.0 is suffering from very slow adoption. Meanwhile, DLSS 3 is slowly, but surely, making its way into more and more games. DLSS 4 may reset the counter and start with a blank slate, appearing in select titles before becoming more widespread.

One way or another, in order to impress the masses, Nvidia may need a bold move at this point — a 15% gen-on-gen boost in gaming won’t cut it when there are other options readily available. It should have some pretty tough competition from AMD’s RDNA 4 at the midrange, so cards like the RTX 5070 could use the extra help to justify their prices.

If DLSS 4 arrives on time, I won’t be surprised if it becomes an RTX 50-exclusive, working hard behind the scenes to turn “meh” GPUs into something quite brilliant. We’ll have to wait and see.