A blog post published by hardware engineer Norm Jouppi reveals that the TPU started its life as a “stealthy project” several years ago. The technology has now been implemented in Google’s data centers for more than a year, and it appears that the chips offer distinct advantages over standard processors.

Google claims that its TPUs offer “better-optimized performance per watt for machine learning.” Jouppi goes on to state that the chip is “roughly equivalent to fast-forwarding technology about seven years into the future.”

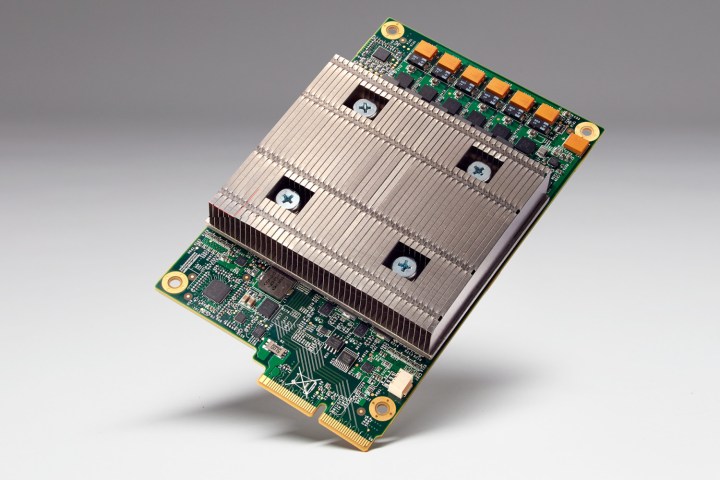

TPU was built from the ground up with machine learning in mind, utilizing a design that makes the chip better suited to the reduced computational precision of its intended application. The processor requires fewer transistors per operation, which allows it to accomplish more operations per second.

Google claims that it only took 22 days from the first test of TPU hardware to implement the processors in its data centers, running applications at speed. The technology has since been used to help improve several of the company’s most popular services.

TPUs have apparently helped improve the accuracy of maps and navigation aids offered via Street View, as well as the relevancy of search results thanks to their application on the RankBrain project. TPUs also helped give the AlphaGo computer program the thinking power it needed to defeat Go world champion Lee Sedol earlier this year.

Google intends to become an industry leader in machine learning, and wants to share its capabilities with its customers. To that end, the company open-sourced TensorFlow late last year — but it remains to be seen whether or not this hardware will remain an internal innovation.