That’s the idea being proposed by South African digital security company Thinkst. It wants to add a honeypot to enterprise networks that represents too valuable a target for hackers to pass up. When they attempt to read its contents or bypass its lax security, network admins and potentially even the authorities, can be alerted.

Related: Do theaters still matter? Amazon knows they do, even after The Interview

Of course this isn’t some brand new technique that has just been thought up. The problem with a traditional honeypot though is it requires regular management and a lot of technical know-how to make it consistently tempting to hackers, without looking too good to be true. Where Thinkst comes in, is that it’s created a piece of hardware that can sit on a network and reliably report intrusions without much maintenance.

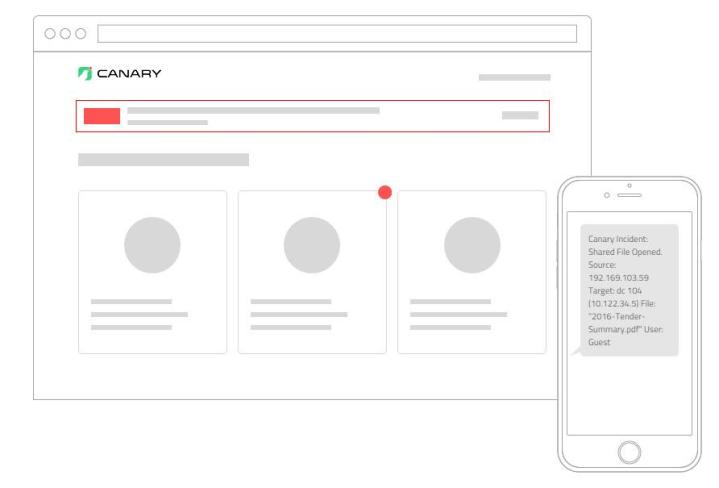

The piece of kit is called Canary, after the poor avians that were taken into coal mines back in the day. Its simple set up involves the pressing of a single button, after which an admin can connect to it over Bluetooth to adjust how the system appears on the network, with several OS options. They can also choose to add tempting looking files that sound like they’re related to valuable data.

If any are ever accessed, an alert is sent out.

Installation of two honeypots and their annual management from Thinkst costs $5,000. While unlikely to be perfect, they offer what sounds like a solid solution for use in augmenting other security features.