There didn’t seem to be anything strange about the new teaching assistant, Jill Watson, who messaged students about assignments and due dates in professor Ashok Goel’s artificial intelligence class at the Georgia Institute of Technology. Her responses were brief but informative, and it wasn’t until the semester ended that the students learned Jill wasn’t actually a “she” at all, let alone a human being. Jill was a chatbot, built by Goel to help lighten the load on his eight other human TAs.

“We thought that if an A.I. TA would automatically answer routine questions that typically have crisp answers, then the (human) teaching staff could engage the students on the more open-ended questions,” Goel told Digital Trends. “It is only later that we became motivated by the goal of building human-like A.I. TAs so that the students cannot easily tell the difference between human and A.I. TAs. Now we are interested in building A.I. TAs that enhance student engagement, retention, performance, and learning.”

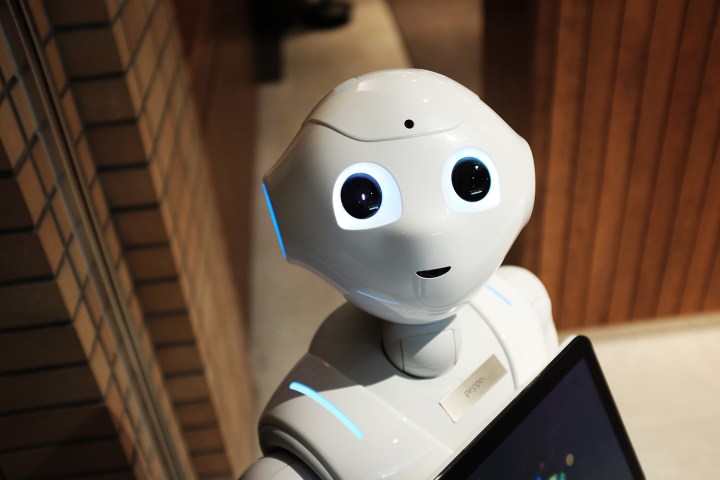

A.I. will alter both the face and function of education.

A.I. is quickly integrating into every aspect of our lives and, like the students in Goel’s class, we’re not always aware when we’re engaging with it. But A.I.’s influence on education will be clear in the coming years as these systems ease into classrooms everywhere.

Like computers and the internet, A.I. will alter both the face and function — the what, why, and how — of education. Many students will be taught by bots instead of teachers. Intelligent systems will advise, tutor, and grade assignments. Meanwhile, courses themselves will fundamentally change, as educators prepare students for a job market in which millions of roles have been automated by machines.

A.I.-aided education might sound like something from a far-off future, but it’s already a topic of interest for academics and businesses alike. A.I.-powered educational toys have flooded the market over the past few years, many of them via crowdfunding platforms like Kickstarter and Indiegogo, where they often exceed their financial goals.

Professor Einstein, for example, teaches kids about science via goofy facial expressions and a robotic German lilt. Developed by Hanson Robotics and supported by IBM’s Watson, the company’s Kickstarter campaign raised nearly $113,000. Meanwhile, the startup Elemental Path offers CogniToys, an array of educational smart dinosaurs designed to play games, hold conversations, and help kids learn to spell. Their Kickstarter campaign raked in a whopping $275,000 from backers in 2015.

“Big things are in store for A.I.-powered educational toys,” Danny Friedman, director of curriculum and experience at Elemental Path, told Digital Trends. “I foresee them in every classroom, as a supplemental learning tool that is not only integrated in a teacher’s curriculum but connected to a student’s personalized data, such as preferred learning methods and areas of interest. I also foresee them in every home, not only to help answer questions, but to help instill pro-social interactions. A.I.-powered toys will be as ubiquitous in households as the cell phone.”

“Big things are in store for A.I.-powered educational toys.”

A student’s engagement with A.I. will only increase as he/she graduates through the school system. Educational A.I. toys will be replaced by tutors whose job it will be to identify subjects of weakness and facilitate additional training.

Teachers will be freed from the humdrum task of grading papers, in subjects from science to social studies. Systems like Wolfram Alpha can already answer complex math equation and queries in language that’s informative and accessible. Integrating an engine like this into an automated grading system – particularly for quantitative problems — would be a breeze. Educators will rejoice as they’re empowered to focus on the more personal aspects of education.

“When it comes to A.I. in teaching and learning, many of the more routine academic tasks (and least rewarding for lecturers), such as grading assignments, can be automated,” write researchers Mark Dodgson, director of the Technology and Innovation Management Centre, University of Queensland Business School, and David Gann, Imperial College’s vice president, in a report on A.I. and higher education for the World Economic Forum.

Once a student reaches high school, she may well enter freshmen year alongside what A.I. education experts of a Pearson report call a “lifelong learning companion.” For the past nine-plus years, this digital companion would have accompanied her in class, helped her with homework, and learned along with her.

The learning partner — which might manifest as a robotic T-Rex or, more likely, something subtler, like a smartphone application — would even occasionally act as a pupil itself, allowing the human student to teach it what she’s learned and help reinforce her knowledge.

“This companion would be accessible to the student throughout [his or her education],” Wayne Holmes, co-author of the Pearson report and lecturer at The Open University’s Institute of Educational Technology, told Digital Trends. “At any one time, it might suggest work they can be doing or support them with work they’re finding difficult. It will also be providing information to the teacher so the teacher can engage … The idea is that over time the learning companion can build this profile of the individual that can be used to support them moving forward.”

These digital learning partners are meant to support teachers rather than replace them, Holmes insisted. Indeed, he expects educators will have A.I. assistants of their own to make their jobs easier and more effective.

These digital learning partners are meant to support teachers rather than replace them.

“Teachers would have their own companion, they’re own A.I. teaching assistant,” he said, adding that a student’s companion and a teacher’s A.I. assistant “would be communicating so the teaching assistant would know what’s going on with the individual student’s profile and would be able to interact with that.”

By the time a student enrolls at a university, she’ll be the product of two “minds,” if you will: the one contained in her brain, and the A.I. that she’s developed as a learning partner. And at the university itself A.I. will be everywhere — as TAs in the classroom, support in the enrollment office, and even as academic counselors. This year, the Technical University of Berlin employed a chatbot named Alex to help students plan their course calendar.

“I think the advantages of the chatbot system are the completeness and availability of the information,” said Thilo Michael, currently a Ph.D student at TU Berlin who designed the system as a part of his master’s studies. “The chatbot tries to translate the questions of students into searchable queries, just like a human counselor would, but it has all the information available at once. Human counselors would need to search in different online systems and would maybe even provide an incomplete set of information.”

Michael emphasized that the system is not designed to replace humans. “The system is able to answer pragmatic questions about the courses and majors available, but is not able to answer questions on a broader level,” he said. “I think the system could very well be used in combination with counseling to have the best of both worlds.”

Outside of conventional learning institutions, A.I. has the potential to make education accessible for more people. In developing regions, where teachers are few and far between, a robust A.I. system may be used to teach students with minimal or no engagement from a human educator.

The XPrize Foundation, which designs moonshot competitions to encourage “radical breakthroughs for the benefit of humanity,” is currently offering $10 million to the team that develops the best basic learning application capable of replacing a teacher for children with access to a tablet but no human educator. In June, XPrize chose eleven semi-finalists from almost 200 teams that entered the Global Learning competition. It’s likely that the winning system will be supported by A.I. in order to provide more personalized and dynamic lessons.

Still, there is no shortage of ethical issues to address before fully implementing A.I. in education, something Holmes and his colleagues are quick to recognize. For one, educators will have to consider the privacy and confidentiality of the data collected, especially when this data pertains to children. Who will own the information, for example? And who will have access to it?

“There isn’t an obvious answer to this problem but it’s a problem that must be taken into account,” Holmes said.

And, before raising a generation with A.I. teaching companions, psychologists should have some understanding of their implications on development. Will students become dependent on the technology? And what happens if the system malfunctions or fails? Similarly difficult questions but ones that are worth the challenge to answer for the future of our greatest resource — the minds of the next generation of humanity.