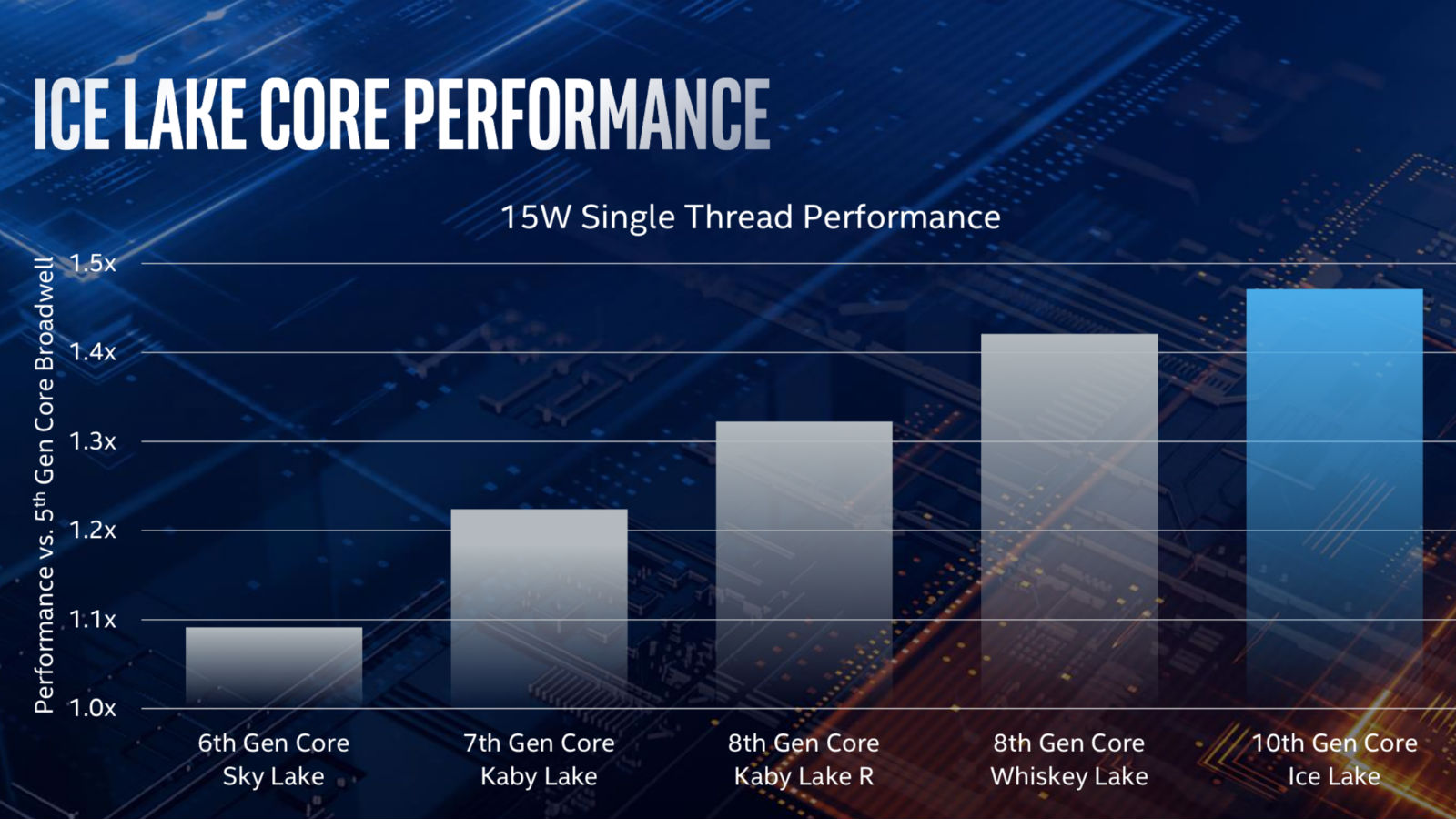

Earlier this year, Intel finally announced its first 10nm (nanometer) architecture Ice Lake chips, a product many, many years in the making. The company revealed very little about the actual processors at the time, especially in terms of what kind of performance we could expect from them.

But at Computex 2019, Intel unveiled the details we’ve all been waiting for. Specs on the three new Ice Lake mobile processors, and information on just how the ambitious performance claims were made true. According to Intel, the use of A.I. in conjunction with Ice Lake’s new Sunny Cove processing core gives its 10th-gen mobile processors an improvement of up to 2.5 times that of its previous 8th-gen Whiskey Lake processor.

Welcome to Ice Lake

Intel’s 10th-generation processor family includes Core i3, Core i5, and Core i7 models in either 9-watt, 15-watt, or 28-watt configurations. The processor scales up to four cores and eight threads and up to 4.1GHz. If that doesn’t sound super specific, it’s because Intel shied away from the details. Things like individual processor names and clock speeds were left out of the presentation, instead focusing on some of the technology behind other elements of Ice Lake.

What we do know is that the core count hasn’t changed from the previous generation. The Core i3 is still just a dual-core processor, while both the Core i5 and Core i7 are quad-core. We also know that the chips span both fan and fanless designs, meaning we’re getting both Y-series and U-series versions of these chips. Regardless, Intel claims they are making significant jumps in single-thread performance in this generation.

“We’ve really touched on every single IP, included new platform integrations as well as new transistor technologies,” Intel Fellow and Chief Client Architect Becky Loop said. “We integrated and optimized for our 10nm process.”

So, how does this all work? Well, at the heart of Intel’s 10th-gen chip are two major components: A Sunny Cove core based on 10nm architecture, as well as the platform controller hub (PCH) that uses a more mature 14nm design. Sunny Cove represents a big design step for Intel, and the company has made important changes to the main processing core to bring more bandwidth to the system, integrate artificial intelligence, build in support for Wi-Fi 6, add machine learning capabilities, and improve graphics performance to 10th-gen chips. Describing Ice Lake as the next foundational architecture for Intel, Loop said that 10th-gen is the next step forward “in terms of a transistor standpoint, a CPU architecture standpoint, as well as a platform” for the company.

Some of the changes detailed include a new memory controller that not only delivers more bandwidth to Intel’s redesigned space-saving Thunderbolt routing system, but also allows the chip to dynamically adjust workloads by leaning in on A.I. capabilities.

“We integrated a 4×32 LPDDDR4 3733 dual-rank system that supports up to 32GB,” Loop explained. “That gives us 50GB to 60GB of bandwidth that actually feeds your display, graphics, media, multi-threaded performance for your cores. On top of just providing the LPDDDR4, we also have gearing mode to handle autonomously in hardware the ability to dynamically change the frequency of memory on the system. So based on the workload, we can optimize the power and performance on the system to give you better performance and responsiveness.” Intel calls this gearing, DL Boost.

Sunny Cove also doubles the amount of L1 and L2 data cache from the respective 32kb and 256kb amounts, respectively, on prior Haswell and Skylake designs. “We have expanded the backend to be wider,” Director of CPU Computing Architecture Ronak Singhal said, leading to more execution units with smarter algorithms. “We’ve overhauled the core microarchitecture so you get performance out of the box.”

Intel also added new capabilities, including instruction sets that accelerate important algorithms to support crypto and security, like AES, RSA, and more. “We offer new vector capabilities for people looking to do low-level bit manipulation, which we see in a wider and wider range of algorithms,” he said.

The first notebooks with 10th-gen chips will ship as early as next month, and Intel expects more than 30 laptop designs with this upgraded CPU by the holidays. There is, obviously, concerns with Intel’s supply, though the company sounds confident in its ability to deliver these at the volume it needs.

Gen 11 Iris Plus graphics for 1080p gaming

While laptops today are great at handling productivity tasks, they often struggled in the graphics department. This compromise forced people to choose between a thin and light system that can do most things or a heavier workstation or gaming laptop for added power. Intel’s executives didn’t like this tradeoff, and the company wanted to make improvements to allow users to edit videos, make 3D renderings, or even game on the go.

“It really does represent a fundamental inflection point of an improved trajectory of gen and gen performance that we’re after going forward,” said Intel VP of Intel Architecture, Graphics, and Software Lisa Pearce. “As we approached Gen 11, it was important for us that it was a major leap forward in the journey to a full scalable GPU architecture. We wanted to make sure we’re absolutely driving power efficiency in all aspects of the implementation. And getting to the point of over a teraflops of compute performance with FP32 and double that for single precision.”

With Gen 11, there are two independent media pipelines. “These pipelines can be used for concurrent usage, transcode and playback, or can be ganged together for higher performance in one stream,” Pearce explained. There are also two fixed function HEVC encoders, with 30% improvement gen-over-gen. “With that we added support for 4:2:4 high end video production quality. 4:4:4, you have a productivity app with federal lines or text encoding, and then also the traditional 4:2:0 format.” Creators taking advantage of HEVC encode will experience twice the speeds with higher quality in video software.

For displays, Intel focused on high resolution, screen quality, and high refresh rates, qualities that will be appealing to creators and gamers alike. Three simultaneous 4K displays are supported on Gen 11 graphics, or two 5K panels, or one 8K monitor. DP1.4 and HDMI 2.0b are also supported. To make HDR efficient, HDR capability is integrated in the hardware pipeline, and both HDR10 and Dolby Vision are supported, Pearce said. The 3D pipeline has also been tweaked to drive more performance and efficiency.

Intel promises that day zero graphics drivers will be ready at launch for most AAA games, and the software tools will allow game developers to take advantage of dynamic variable rate shading through the GPU pipeline. In an unfinished game demo, the variable rate shading allowed one game developer to realistically — and naturally — render a forest scene in a game that looked like it could have been pulled from straight from a video feed. Hybrid tile-based rendering is also supported on Gen 11.

Compared to Intel’s previous integrated graphics, Gen 11 graphics delivers up to 1.8 times faster frames per second (FPS). Intel is also adding support for VESA Adaptive Sync, so gamers will experience no stuttering, ghosting, or screen tearing, she said. Intel claims that popular games such as CS:Go, Rainbow Six Siege, Rocket League, and Fortnite will be playable on mobile at 1080p. On prior generations, many of today’s popular 1080p games were unplayable, as framerates dipped below 30 FPS, but with Gen 11 graphics, these games will see up to 40% improvements in framerates.

For example, compared to 8th-gen processor with UHD 640 graphics, Gen 11 Iris Plus graphics on CS:Go on medium setting played at approximately 80 FPS versus approximately 50 FPS. Pearce said that Gen 11 delivered a 1.8x improvement over Whiskey Lake UHD graphics. Average improvement is 1.5x to 1.6x, depending on the configuration. Even Fortnite performed above 40 FPS, compared to below 30 FPS on UHD 640 graphics.

And by leveraging DL Boost, Intel’s Adaptix technology can recognize when you’re playing a game to optimize system performance. Advance users can create different tuning profiles, and Intel’s Graphics Command Center delivers single-click graphics optimization that’s supported on 44 titles currently, including Titanfall 2, Heroes of the Storm, Portal 2, Apex Legends, and more.

Unfortunately, Intel didn’t provide specifics as to which processors would include the basic Intel UHD graphics and which would get the Iris Plus treatment.

Using A.I. for increased battery life

In a series of demos, Intel showed how A.I. can be leveraged to not only get work done faster — but also improve battery life. This is a similar tactic that smartphone manufacturer Huawei has been doing with the Neural Processing Unit (NPU) on its Kirin system-on-chip (SoC) design.

“There is a new capability within Ice Lake that allows us use machine learning to actively optimize the performance and power of our processors,” Loop said. “By using machine learning algorithms, we can actually optimize within PL2 and PL4 the thermal envelopes, the behavior of our overall system so you can have the best performance and best responsiveness based on the workload that you’re doing right now versus it being optimized in the lab beforehand. So every user is going to see the best performance of their system based on how they’re using it.”

Similar to how some Android phones can keep the screen brightly lit if it senses your face and attention on the display, Walker’s team stated that A.I. can be used to detect if you’re looking at the display. If you are, the system can give you full power. If the system senses you’re not there, it can dim the screen.

A.I. can be used to remove unwanted background noise for clearer audio in Skype calls. Though the capability is available today, the use of A.I. will result in less stress on the processor, meaning users will get to experience longer battery life. A.I. can also be used to accelerate creative workflows, like removing blur in a photo resulting in motion or unsteady hands when using a camera, surfacing and annotating large numbers of photos in an image library, and applying filters — like a Van Gogh effect — to videos by utilizing the capabilities of Gen 11 graphics in Power Director. In total, Intel envisions three main use cases right now for A.I.-based DL Boost.

“The CPU allows you to have low latency for things like inference tasks,” Singhal said. “This is the beginning of what you should expect to be journey on we’re going to improve A.I. over the course of multiple generations in each of these different engines.”

The second mode is to do high throughput A.I. workloads on the graphics engine, while the third low power accelerator mode is also referred to as the Gaussian Neural Acceleration. This allows A.I. to run on the SoC in a very low power state. In one demo, intel showed how the graphics core can be turned off during a meeting for long-running tasks, like transcriptions of audio in the background.

“For different use cases, for different ways that people want to take advantage of A.I. on a PC, we offer different solutions instead of trying to have one solution try solve every problem,” Singhal said.

Wireless faster than wired Gigabit Ethernet

Whereas rival Qualcomm is promoting mobile broadband connectivity as a differentiating factor for its ARM-based Always Connected PC platform with Microsoft, Intel’s dead 5G modem business means that the company is instead leaning more heavily on Wi-Fi 6 for connectivity.

“We think that Wi-Fi 6 is going to be a big improvement and a big step up for the experience in the PC,” said Project Athena Chief Architect Kris Fleming. “Wi-Fi 6 is the biggest upgrade for Wi-Fi in a decade.”

By allowing devices to simultaneously communicate by leveraging orthogonal frequency division multiple access, Wi-Fi 6 allows up to a 75% reduction in latency, Fleming said, with latency going under 10ms. The standard also delivers up to five times the capacity of previous generations and is best able to take advantage of higher speed networks today — up to 40% faster on a 2×2 QAM solution.

“And the reason why Wi-Fi 6 is so important is if you have a very poor Wi-Fi device, it really affects your overall performance,” Fleming said. “Even if you have the best CPU platform, the Wi-Fi network has been really slowed down and reduces your applications, leaving you with very poor user experience.” By improving Wi-Fi, Intel hopes that network speeds, which is becoming increasingly important for connected apps and cloud storage, users will see better performance overall on their systems.

To achieve this gain in performance, Intel is innovating on top of the Wi-Fi 6 standard, and the solution is called Intel Wi-Fi 6 Gig Plus. “And the reason why we wanted to describe it this way is that we’re providing two additional features that are really optional features that we’re making standard as part of our Wi-Fi 6 implementations.”

In Ice Lake, that means Wi-Fi 6 is integrated in the PCH. This leads to a significant size reduction as well — up to 70% reduction compared to Intel’s existing M.2 Wi-Fi module today.

A key differentiation here is that while base Wi-Fi 6 only supports 80MHz channels, Intel’s Gig Plus standard doubles that to 160MHz channels. Fleming claims that this solution delivers three times the performance of previous Wi-Fi and gives you gigabit speeds, which is “almost twice as fast as wired gigabit Ethernet network today.” Another change is that Wi-Fi 6 will also reduce interference giving you the best possible connection depending on your network, thanks to overlapping basic service set, or OBSS, which is important for office environments with multiple access points.

Intel is still working on branding for its Wi-Fi 6 Gig Plus standard for commercial products, but all consumer routers that support Intel’s additional feature will come with the company’s Gig Plus moniker.

The architecture for Thunderbolt support on Ice Lake has also been redesigned to allow manufacturers to easily accommodate ports on both sides of the system, similar to Apple’s MacBook Pro implementation. In addition, simplifying the Thunderbolt design will also reduce power consumption by 300 milliwatt per port when the port is fully utilized.

“We have two PCIe controllers connected to each CIO router,” Eldis explained. “So basically, we double the bandwidth that’s going to the ports.”

Intel also contributed Thunderbolt 3 technology to the development of USB4, Eldis said. Part of the USB4 specifications require the port to address USB and Thunderbolt tunneling, and although Intel has this supported in silicon, but the company did not have the sync side to test it. As a result, a few bugs could show up, and that’s the reason why Intel is hesitant on claiming that 10th-gen is USB4 compliant.