Intel has just announced an interesting update to its upcoming Meteor Lake chips: The integrated graphics are about to receive an unprecedented boost, allowing them to rival low-end, discrete GPUs. Now equipped with hardware-supported ray tracing, these chips have a good chance of becoming the best processors if you’re not buying a discrete graphics card. Performance gains are huge, and it’s not just the gamers who stand to benefit.

The information comes from a Graphics Deep Dive presentation hosted by Intel fellow Tom Petersen, well-known to the GPU market for working on Intel Arc graphics cards. This time, instead of discrete graphics, Petersen focused on the integrated GPU (iGPU) inside the upcoming Meteor Lake chip. Petersen explained the improvements at an architectural level, introducing the new graphics as Intel Xe-LPG.

For the first time, Intel is adding hardware ray tracing to its integrated graphics chip, and Petersen claims that it’s going to be some impressive ray tracing indeed. The chip is going to come with eight hardware RT units, which will enable it to perform ray tracing in real time. To show off the tech, Petersen played a demo of the new UL Solar Bay benchmark that was locked to 60 frames per second (fps). This was a Vulkan demo, and although it’s fairly hard to see based on a recording, Petersen explained that the computer shaders in Meteor Lake work to simulate light particles and create hardware reflections with VulkanRT.

For gamers, this could be exciting news — although it’s hard to imagine an iGPU doing any serious ray tracing work in modern games, given the fact that even some of the best discrete graphics cards can struggle with it. Petersen pointed out that content creators and producers may enjoy the new hardware support just as much.

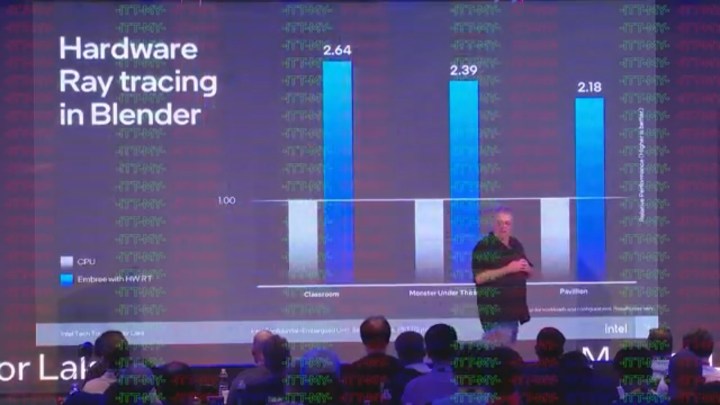

For instance, those who use Intel’s Embree software will find GPU acceleration, including hardware ray tracing, inside Embree. In addition, Intel’s testing shows that Meteor Lake will be between 2 to 3 times faster in hardware ray tracing in Blender than Raptor Lake was. Intel, in general, seems eager to revamp the ray tracing experience on iGPUs going forward, so future improvements are likely.

Aside from ray tracing, the iGPU in Meteor Lake sounds impressive indeed. To make improvements possible, Intel went all-in on improving the architectural efficiency of the Arc graphics chip, making it wider and dedicating more silicon to the iGPU compared to Intel Raptor Lake. It also improved clock speeds across the board, making the chip faster at every voltage. Petersen explained that at any particular frequency, Meteor Lake will consume less power, and conversely, at every voltage, it’ll be able to put out higher clock speeds. Going forward, the iGPU inside Meteor Lake will sport eight Xe-cores, meaning a total of 128 vector units, which is a significant improvement over the 96 vector units found in Raptor Lake.

Up until now, ray tracing has been something reserved for higher-end discrete graphics cards. While it’s hard to expect miracles from an integrated chip, one thing is clear — both Intel and AMD are serious about making their one-chip solutions more viable, and that bodes well for the future of lightweight laptops.

We’ve seen some impressive performance out of the new chips, as well. PCGamer’s Jacob Ridley demoed Dying Light 2 on the integrated graphics, saying they offered “perfectly playable” performance in the game at 1080p (though with some significant help from Intel’s XeSS). Intel’s Tom Peterson told Ridley that the iGPU is “close to a 3050,” which is impressive indeed.