- Great 1080p and 1440p gaming performance

- Competitive ray tracing performance

- Relatively inexpensive

- Resizable BAR is necessary

- XeSS needs some work

Intel’s Arc A770 and A750 seemed destined to fail. It’s been decades since Intel first conceived the idea of making a discrete gaming graphics card, but the Arc A770 and A750 are the first cards to make it out of the prototype stage. And despite rumors of cancelation, various delays, and seemingly endless bugs, the Arc A770 and A750 GPUs are here. And here’s the shocker: They’re really good.

With GPU prices on an upward trajectory, the Arc A770 and A750 could represent a massive price correction. They’ll surely be beaten out by upcoming Nvidia and AMD GPUs around the same price when the time comes, but for the next year at least, Intel is a serious competitor in the $250 to $350 range.

A note on compatibility

Before I get into the juicy performance testing, a word needs to be said about compatibility.

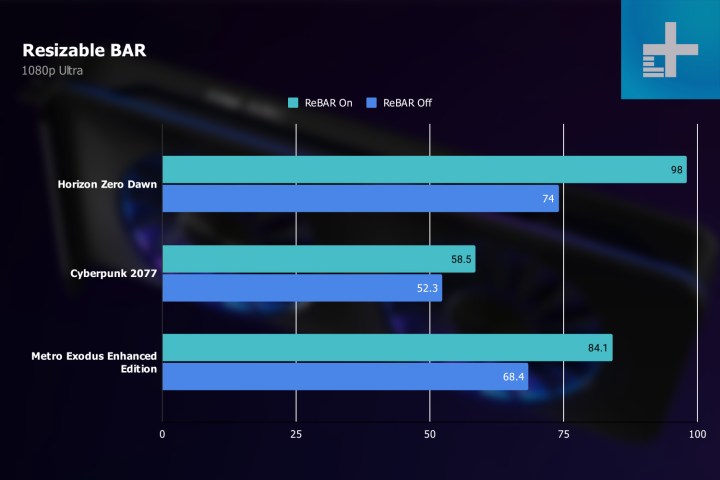

Intel says the Arc A770 and A750 require a 10th-gen Intel CPU or AMD Ryzen 3000 CPU or newer. That’s because Arc Alchemist cards benefit a lot from Resizable BAR, which is only available on the last few generations of processors. The cards will work with older CPUs, but you’ll have much lower performance if ReBAR is turned off.

I didn’t retest my entire suite because the differences became clear within a few games. With the A770 at 1080p, my frame rate dropped by 24% in Horizon Zero Dawn. In Metro Exodus, the drop was nearly 19%. Keep in mind that the only difference between the results was turning ReBAR off in the BIOS. Nothing else was changed.

That’s a large enough performance gap to call ReBAR an essential feature. Although it’s best to keep ReBAR on for the best performance, even recent AMD and Nvidia architectures don’t need it to perform at the level they should.

If you were planning on throwing an Arc GPU into an older system without ReBAR support, you’re going to have a much worse experience.

Arc A770 and A750 specs

There’s nothing too interesting about the Arc A770 and A750’s specs. The biggest note is that Intel is using dedicated ray tracing cores unlike AMD’s RX 6000 graphics cards. That gives Arc a big boost in ray tracing, as I’ll explore later in this review.

| A770 | A750 | A580 | A380 | |

| Xe Cores | 32 | 28 | 24 | 8 |

| XMX Engines | 512 | 448 | 384 | 128 |

| Ray tracing cores | 32 | 28 | 24 | 8 |

| Clock speed | 2,100MHz | 2,050MHz | 1,700MHz | 2,000MHz |

| VRAM | 8/16GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 | 6GB GDDR6 |

| Memory bus | 256-bit | 256-bit | 256-bit | 96-bit |

| PCIe interface | PCIe 4.0 x16 | PCIe 4.0 x16 | PCIe 4.0 x16 | PCIe 4.0 x8 |

| Power draw | 225W | 225W | 175W | 75W |

| List price | $330 (8GB), $350 (16GB) | $290 | TBA | TBA |

| Release date | October 12, 2022 | October 12, 2022 | TBA | TBA |

I included the A580 and A380 specs for reference, but we still don’t have pricing or a release date for those cards yet. For the cards we have, Intel is offering its Limited Edition models at the prices listed above. These cards aren’t actually limited — they’re similar to Nvidia’s Founder’s Edition cards. Board partners will release their own models, which you can expect to sell for slightly higher than list price.

The Arc A770 is the only oddball in the lineup, as Intel has 8GB and 16GB versions. They’re identical otherwise, and Intel says it will only sell the 16GB version as a Limited Edition. It’s tough to say how many 8GB models we’ll see or if third-party 16GB models will sell for much more right now.

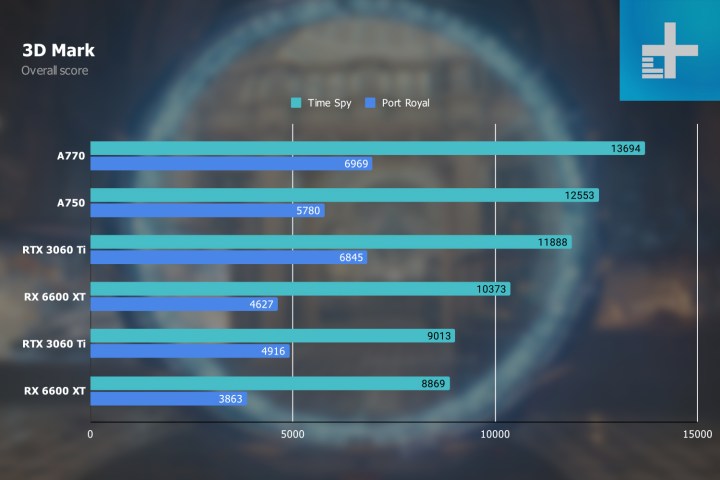

Synthetic and rendering

Before getting into actual games, let’s start with some synthetic benchmarks. Across 3DMark’s Port Royal and Time Spy, the Arc A770 and A750 lead compared to all of the competing GPUs from Nvidia and AMD. That seems cut and dried, but Arc has specific optimizations for 3D Mark, allowing the Arc GPUs to achieve a higher score in Time Spy.

The Port Royal ray tracing benchmark is much more competitive, with the Arc A770 matching the RTX 3060 Ti but the Arc A750 falling short. AMD’s GPUs are leagues behind in Port Royal, which isn’t surprising considering the limited ray tracing power AMD’s graphics cards currently have.

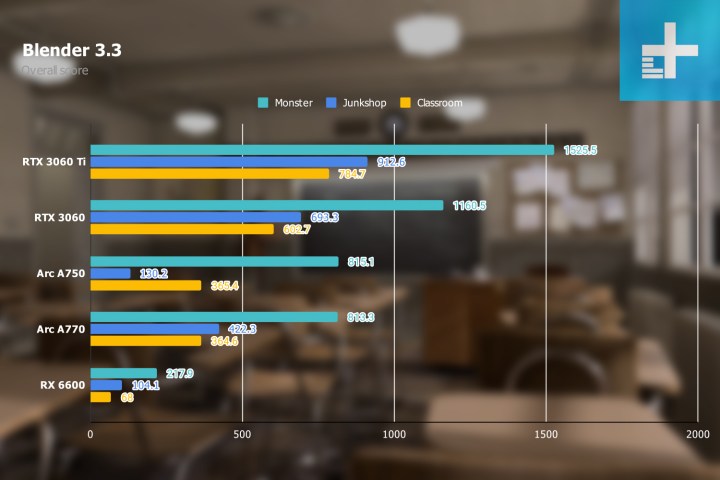

Blender is a bit more interesting. Nvidia leads the pack due to CUDA acceleration inside Blender, and AMD’s cards aren’t even close. The Arc GPUs are still far behind Nvidia, but they’re much closer than anything AMD is offering right now.

There’s a lot of inconsistency in my results, too, with the Arc A750 actually putting up slightly higher numbers than the A770. That suggests there’s some untapped power with rendering apps like Blender, but it remains to be seen if Intel will optimize this workload.

1080p gaming

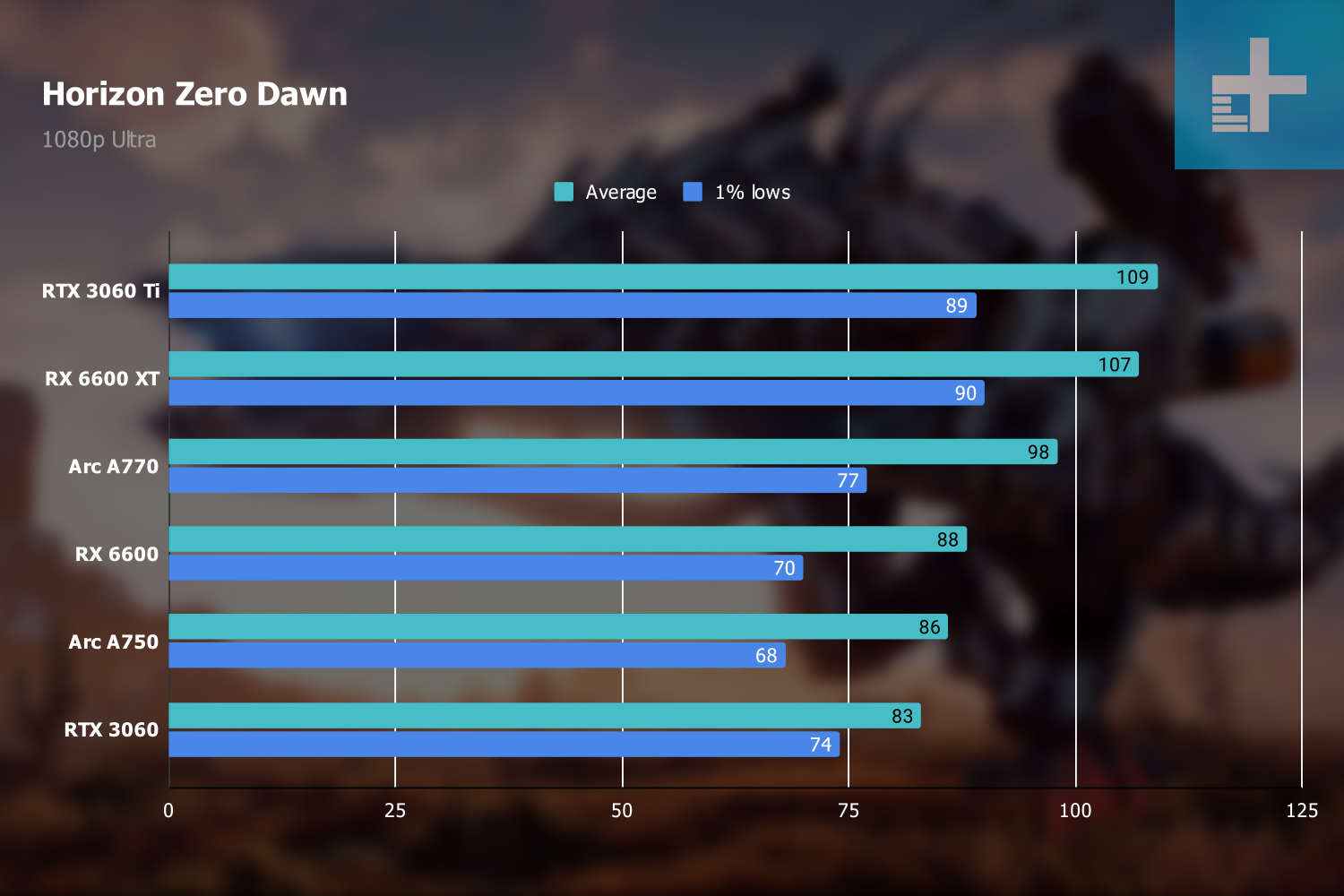

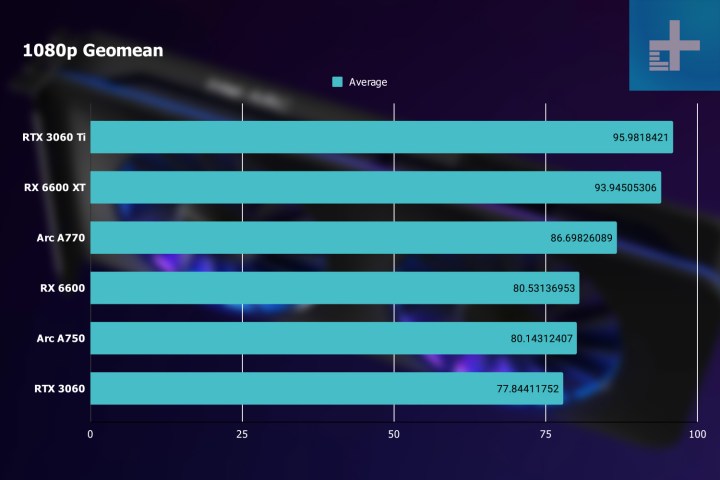

The Arc A770 and A750 are targeting 1080p, and compared to the RTX 3060, they shine. Across my suite of six games, the A750 manages a small lead of 3%, but the A770 shoots ahead with an 11% boost. Keep in mind the price here. Intel is asking $350 for the 16GB A770, while the cheapest RTX 3060 I could find at the time of writing is $380.

If anything, Intel has more competition from AMD. The RX 6600 XT has a solid 8% lead over the A770 and a 17% lead over the A750, and it’s around $350 right now. There’s a little more to the story here, though.

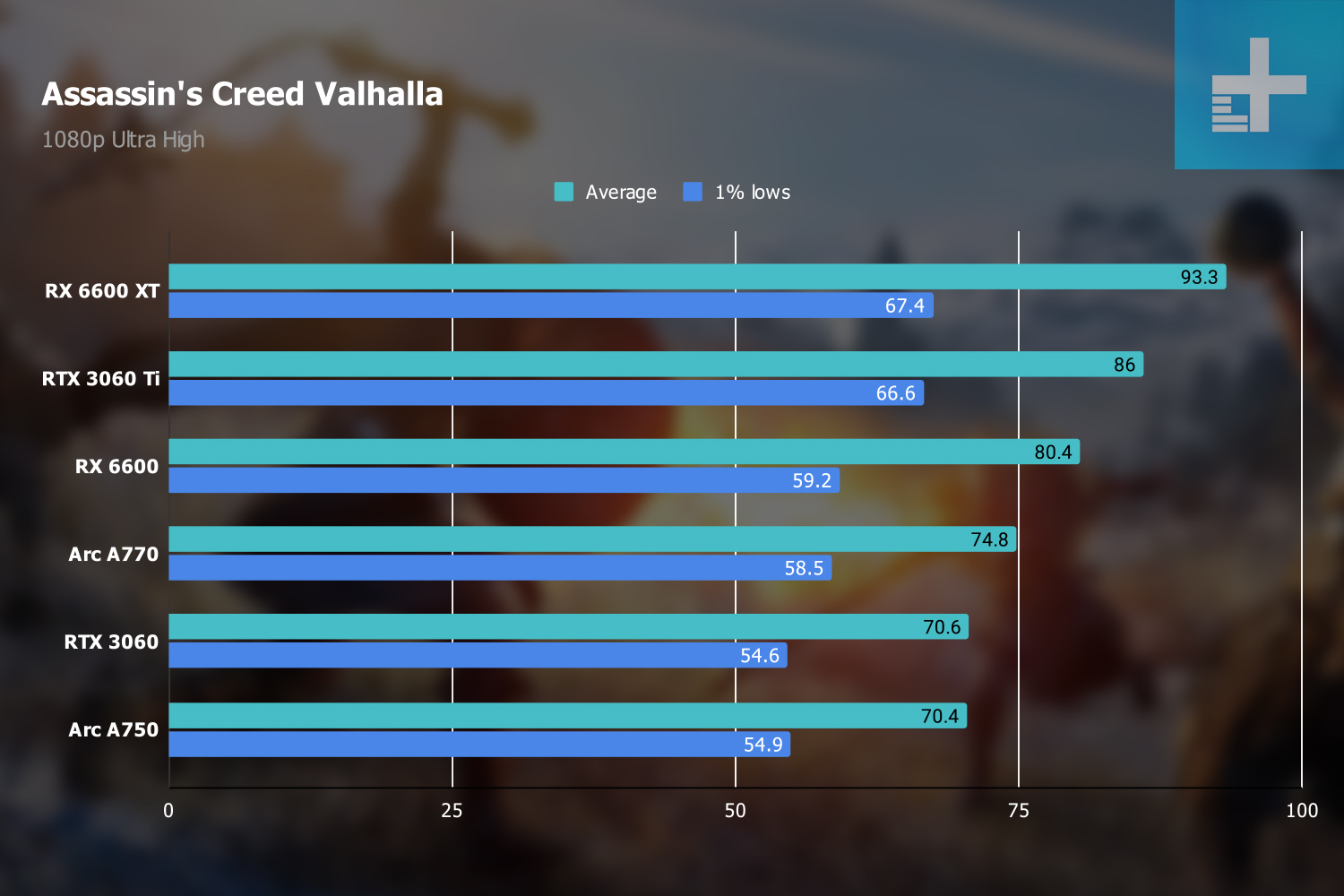

These tests were run on my Ryzen 9 7950X test bench. Intel strongly recommends using Resizable BAR for Arc GPUs, which means that the AMD cards saw a boost from Smart Access Memory (SAM). SAM favors 1080p, and in titles like Assassin’s Creed Valhalla, it can provide a huge boost to AMD’s GPUs. That’s pushing the RX 6600 XT up higher in these results, which starts to disappear at 1440p.

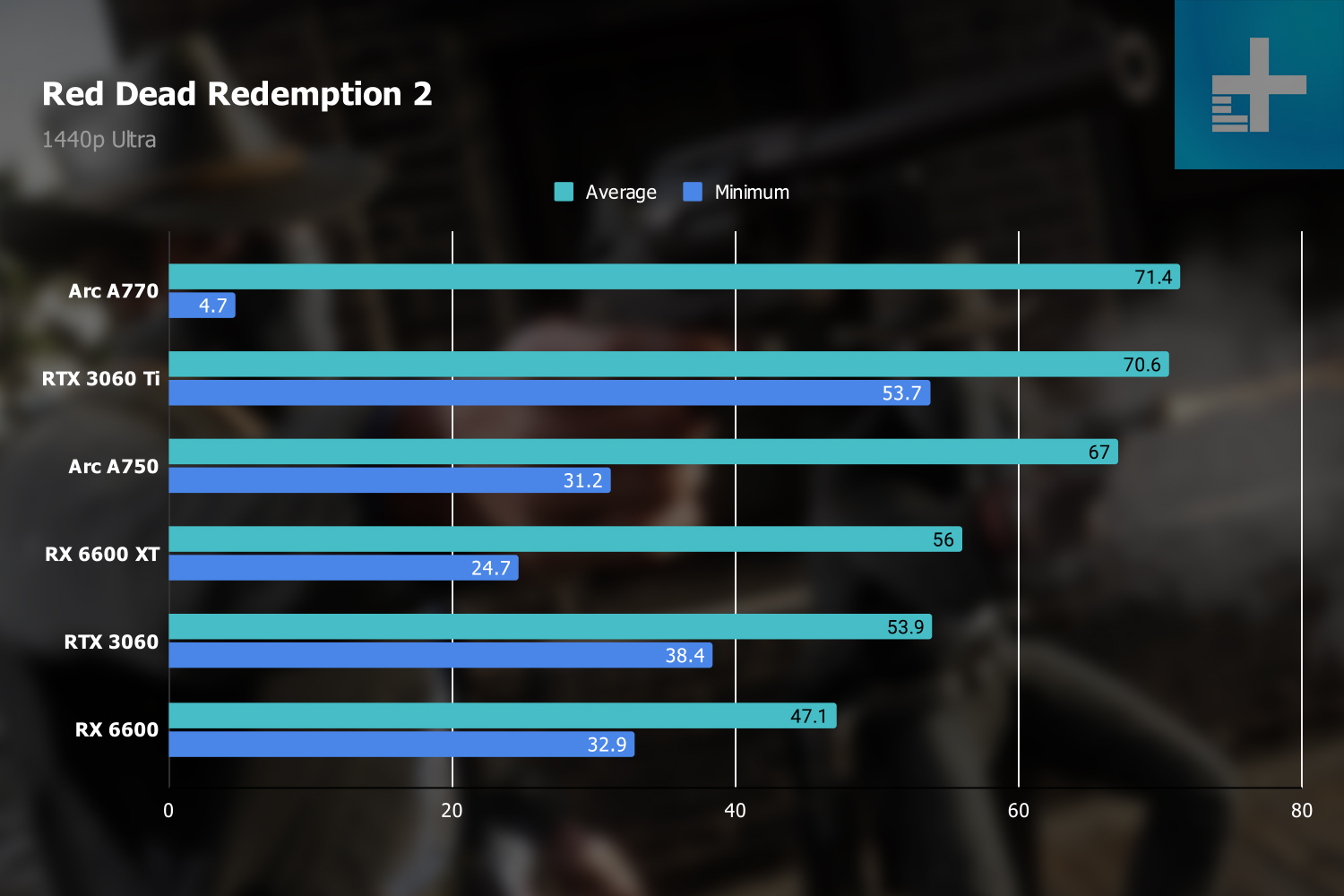

Digging into specifics, Red Dead Redemption 2 was a surprising win for Intel’s GPUs. In this test, the A750 nearly matched the RTX 3060 Ti while the A770 went even higher. This is the only game in my test suite that uses the Vulkan API, and Intel’s drivers seem particularly optimized for Vulkan titles.

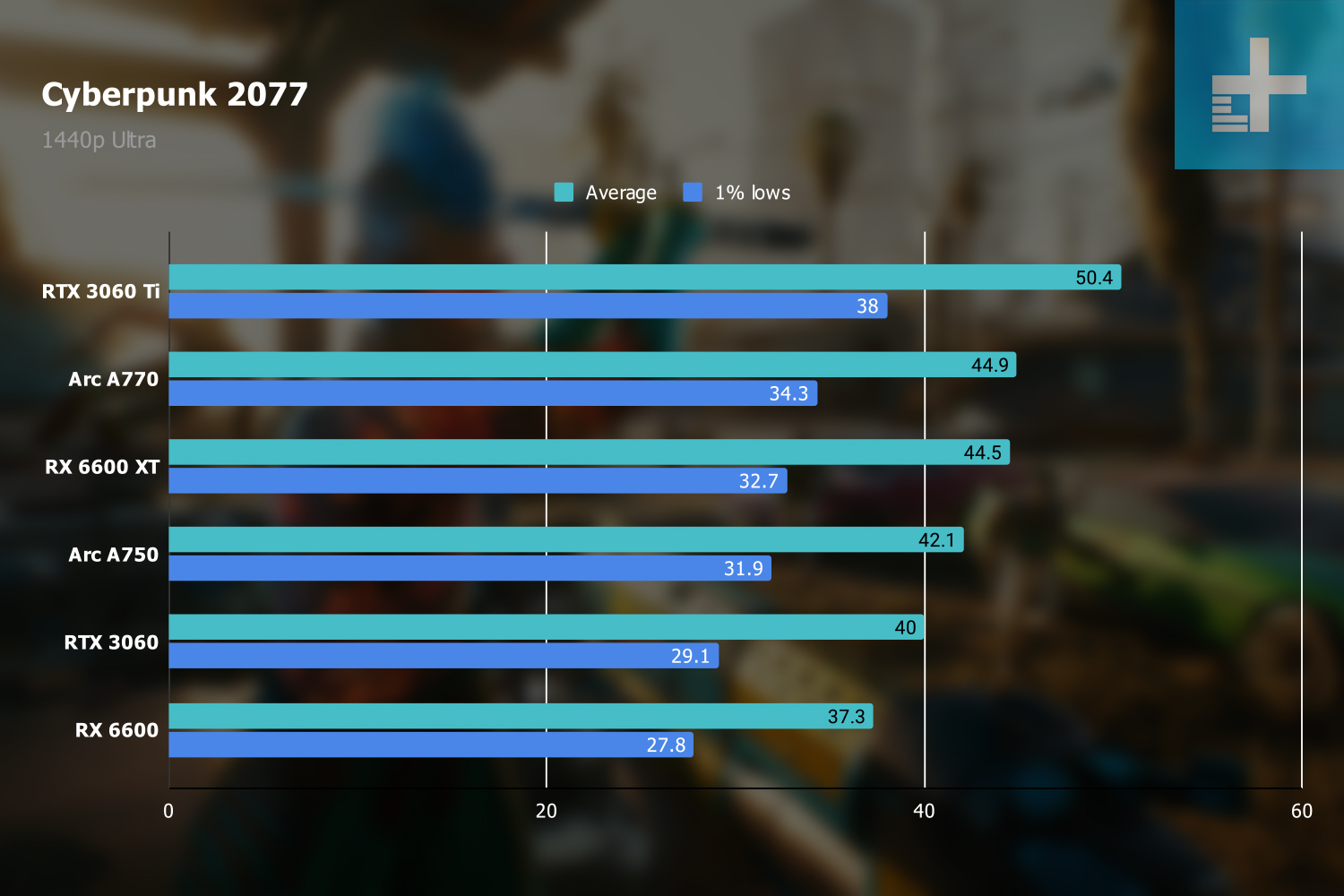

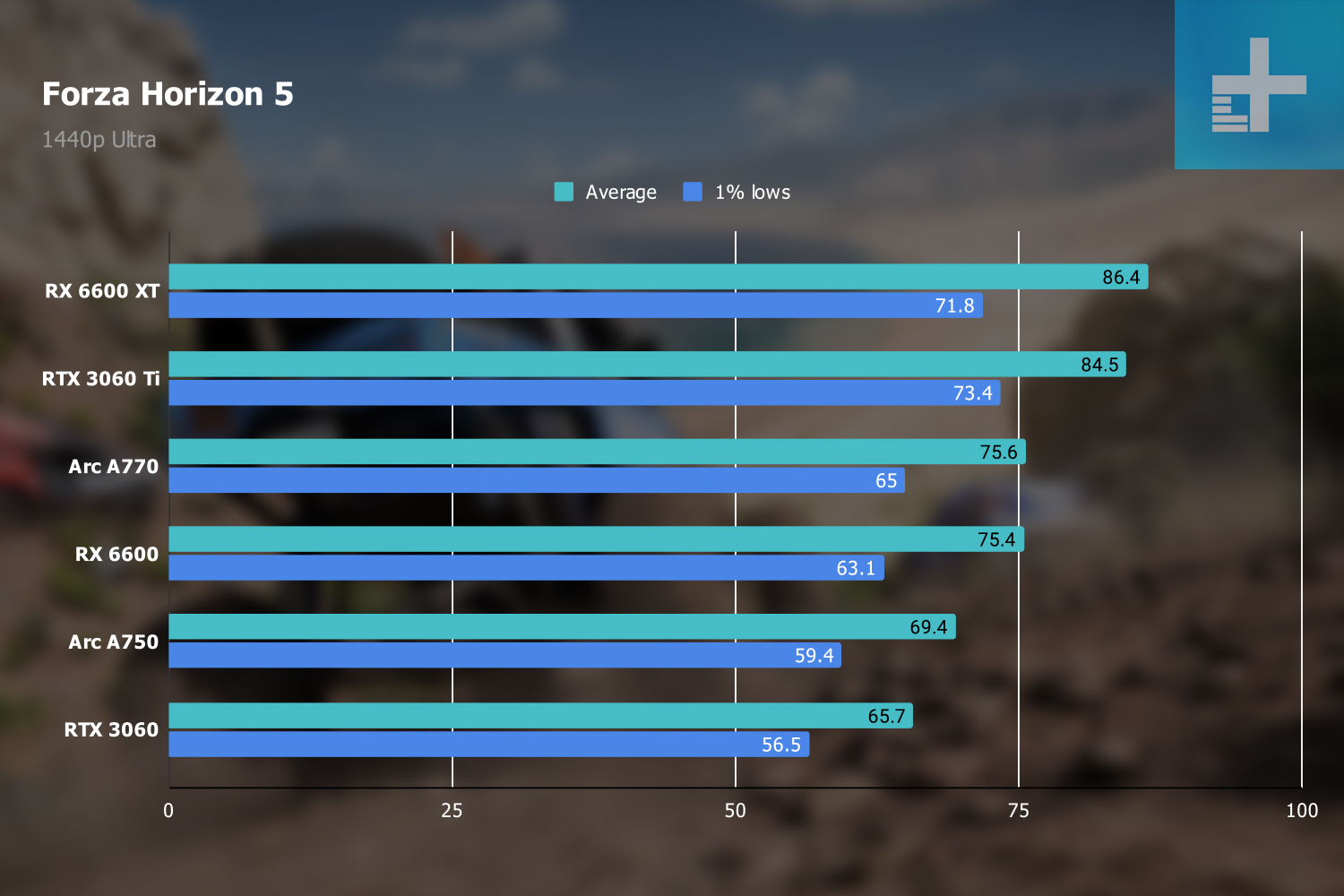

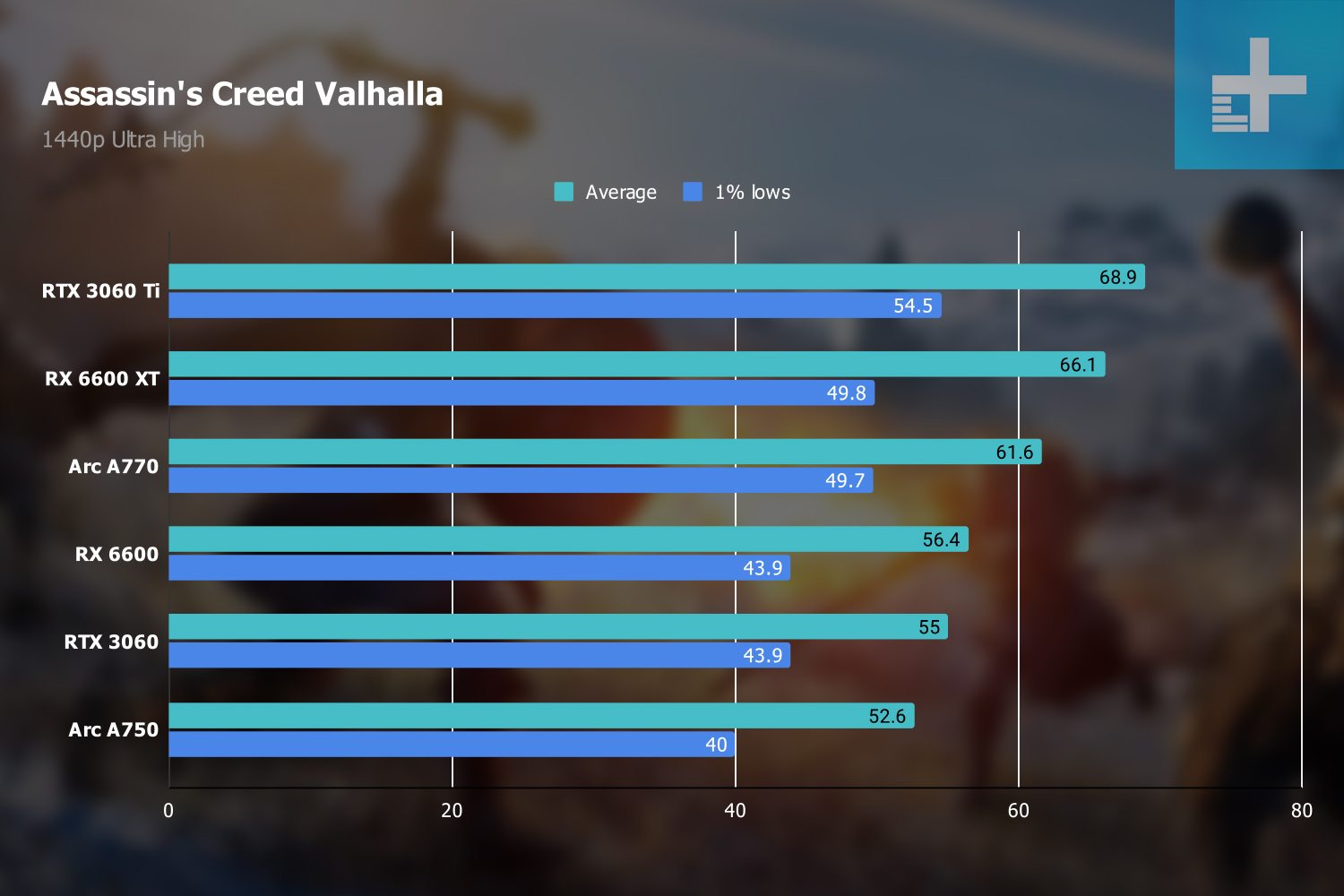

DirectX 12 titles, which comprise the rest of my test suite, don’t show such stark leads. In Cyberpunk 2077, for example, the Arc GPUs trail the entire pack. And in AMD-promoted titles like Forza Horizon 5, the Arc GPUs manage to beat the RTX 3060 but fall vastly short of AMD’s RX 6600 XT.

Intel delivered on its promise of providing a better value over Nvidia’s current offerings. Short of Cyberpunk 2077, even the Arc A750 managed to beat the RTX 3060 in all my tests at 1080p. But, if you don’t care about ray tracing and don’t need the AI-boosted XeSS tech available in Arc GPUs, the RX 6600 XT is a much more compelling GPU at 1080p given you have an AMD CPU.

1440p gaming

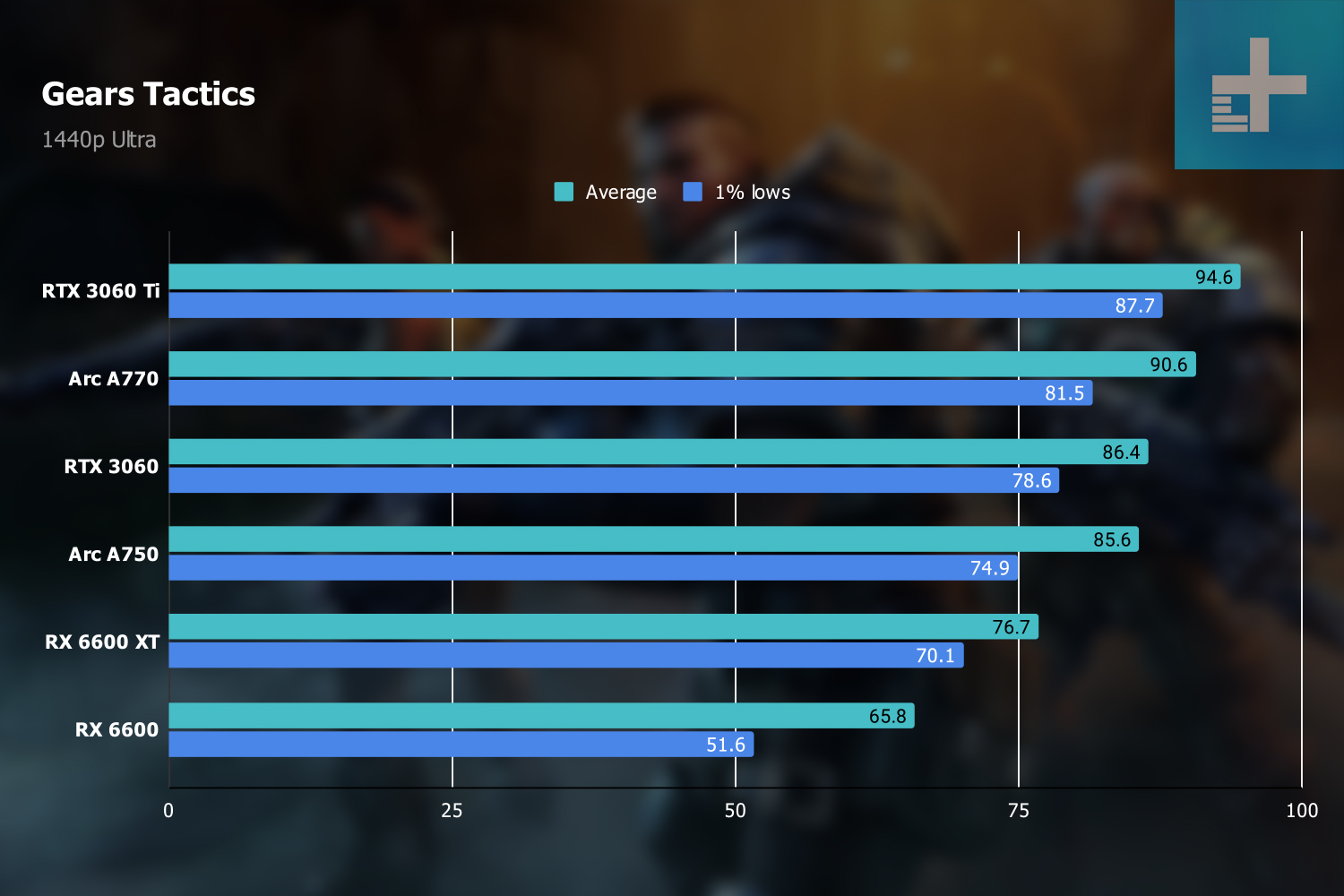

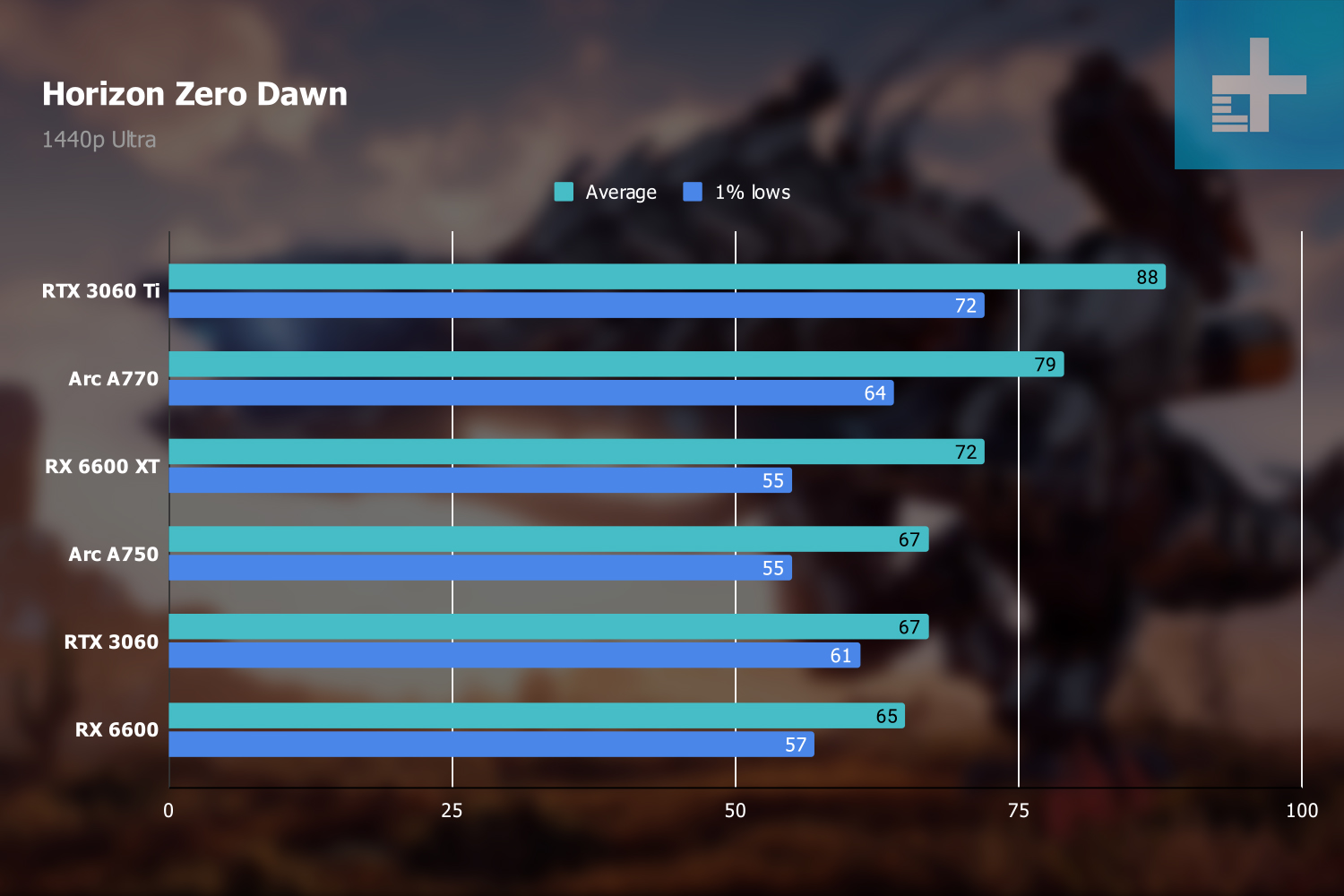

1440p is where things get interesting for the Arc A750 and A770. ReBAR and SAM still apply, but AMD’s cards aren’t seeing nearly as large of a boost as titles start becoming limited by the GPU. Here, the A770 and A750 flank the RX 6600 XT while still managing a lead of 15% and 5% over the RTX 3060, respectively.

5% isn’t much of a lead for the A750, but it’s still impressive considering Intel’s pricing. Even the cheapest RTX 3060 will run you $90 more than the A750, and with a performance disadvantage. The A770 trades blows with the RTX 3060 Ti, as well. Although the RTX 3060 Ti edges out a solid 8% lead, it’s also at least $100 more expensive than the Arc A770 at the time of writing.

In some cases, the A770 actually leads. Once again, Intel’s GPUs favor Vulkan in Red Dead Redemption 2, which helped the A770 to beat the RTX 3060 Ti overall. Both the A770 and A750 had stronger showings in the DirectX 12 titles, as well. In Cyberpunk 2077, for example, the RTX 3060 and RX 6600 trail the pack instead of Intel’s GPUs, with the A770 nabbing the second to highest result.

It’s not all perfect, though. In AMD-promoted titles like Assassin’s Creed Valhalla and Forza Horizon 5, the RX 6600 XT still offers the best performance, even without as large of a boost from SAM.

Although the A770 and A750 aren’t targeting 1440p, they put up impressive results. A 79 fps average in Horizon Zero Dawn and 61 fps average in Assassin’s Creed Valhalla, both with maxed-out settings, is nothing to sneeze at. AMD’s GPUs may edge out a lead with SAM in specific titles, but Intel’s offerings are bringing better performance overall.

The competition from Nvidia isn’t even close. The RTX 3060 Ti leads in overall performance, sure, but the price delta is too big to ignore. Even many RTX 3060 models sell above $400 despite the fact that the cheaper A750 and A770 can outperform the RTX 3060 at 1080p and 1440p.

Competitive ray tracing

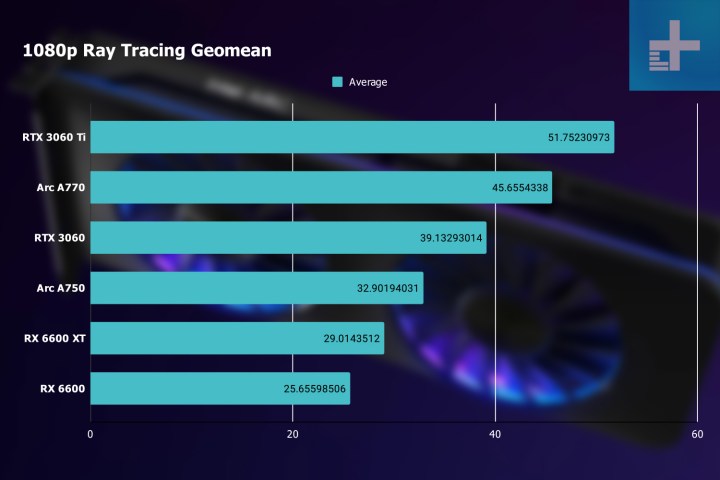

Raw performance for the Arc A750 and A770 is competitive, but Arc really gets exciting when ray tracing is brought into the mix. The RX 6600 and RX 6600 XT are solid value alternatives to Nvidia’s RTX 3060 cards, but they have terrible ray tracing performance. The Arc A750 and A770, on the other hand, are going toe-to-toe with Nvidia on ray tracing.

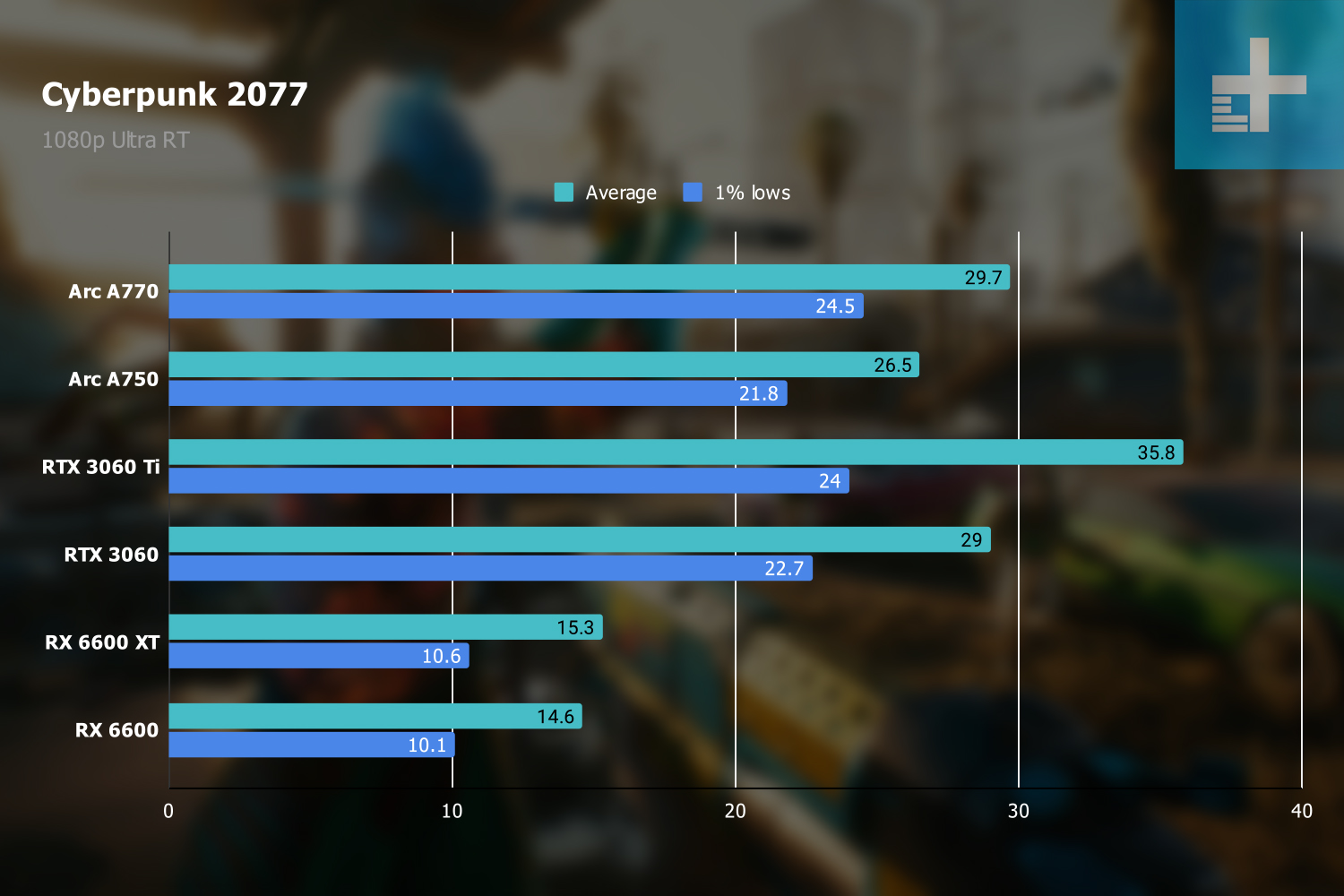

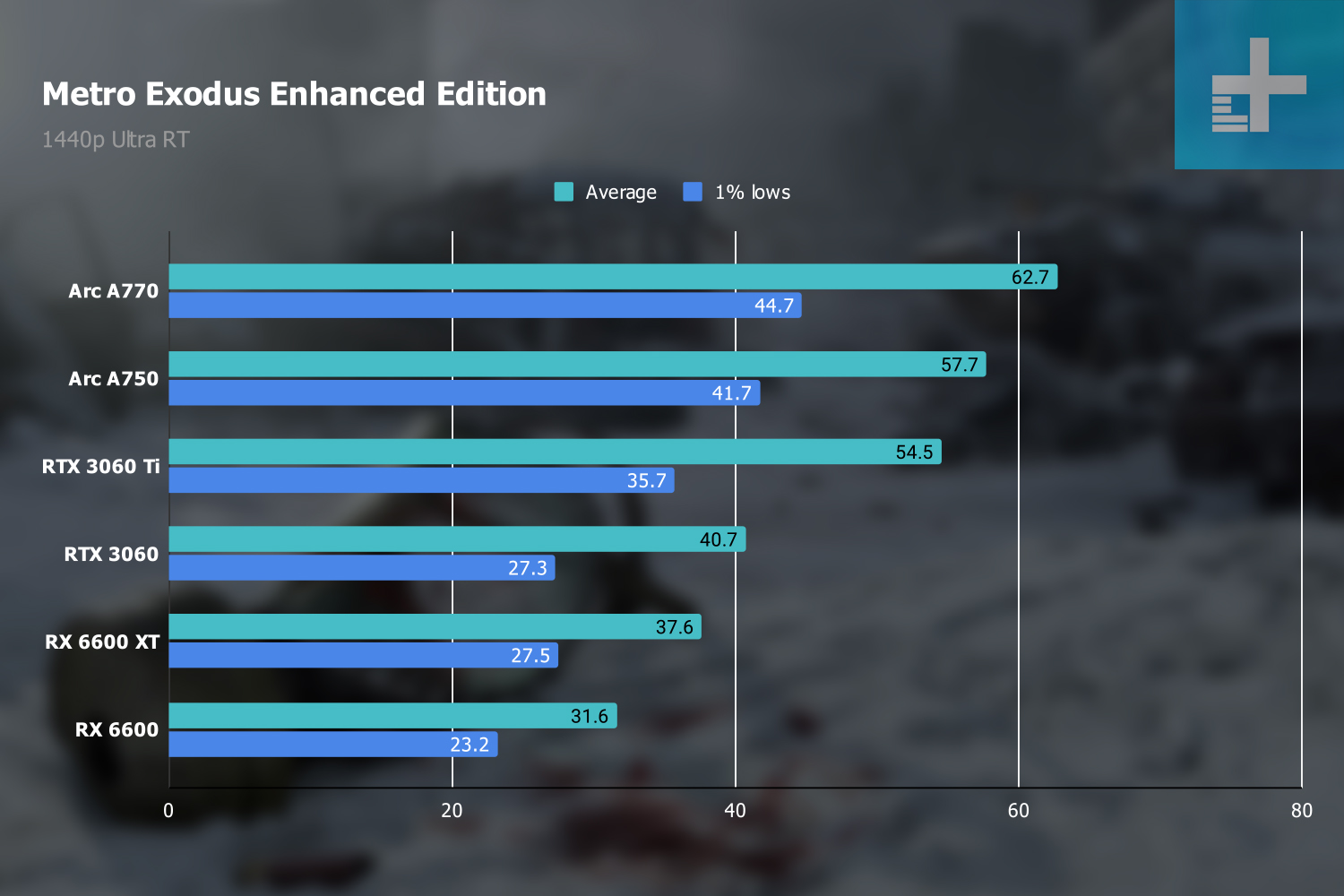

At 1080p, the RTX 3060 is about 19% ahead of the A750 in my ray tracing benchmarks, but the A770 16GB is 17% ahead the RTX 3060. Nvidia still holds the crown when it comes to ray tracing, but it no longer wins by default. The ray tracing power inside Arc GPUs is much higher than what AMD is currently offering, and in some cases, even higher than what Nvidia has to offer.

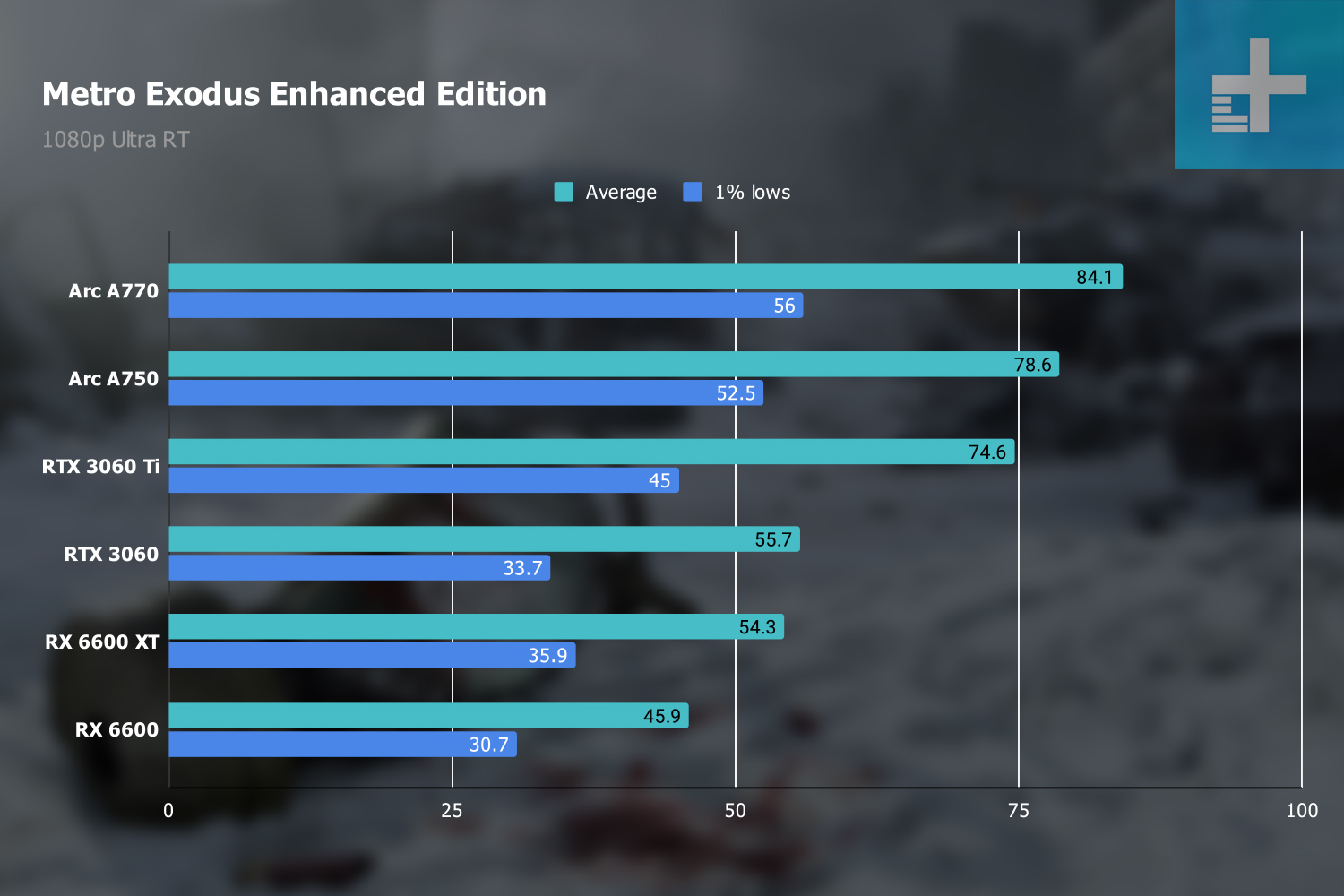

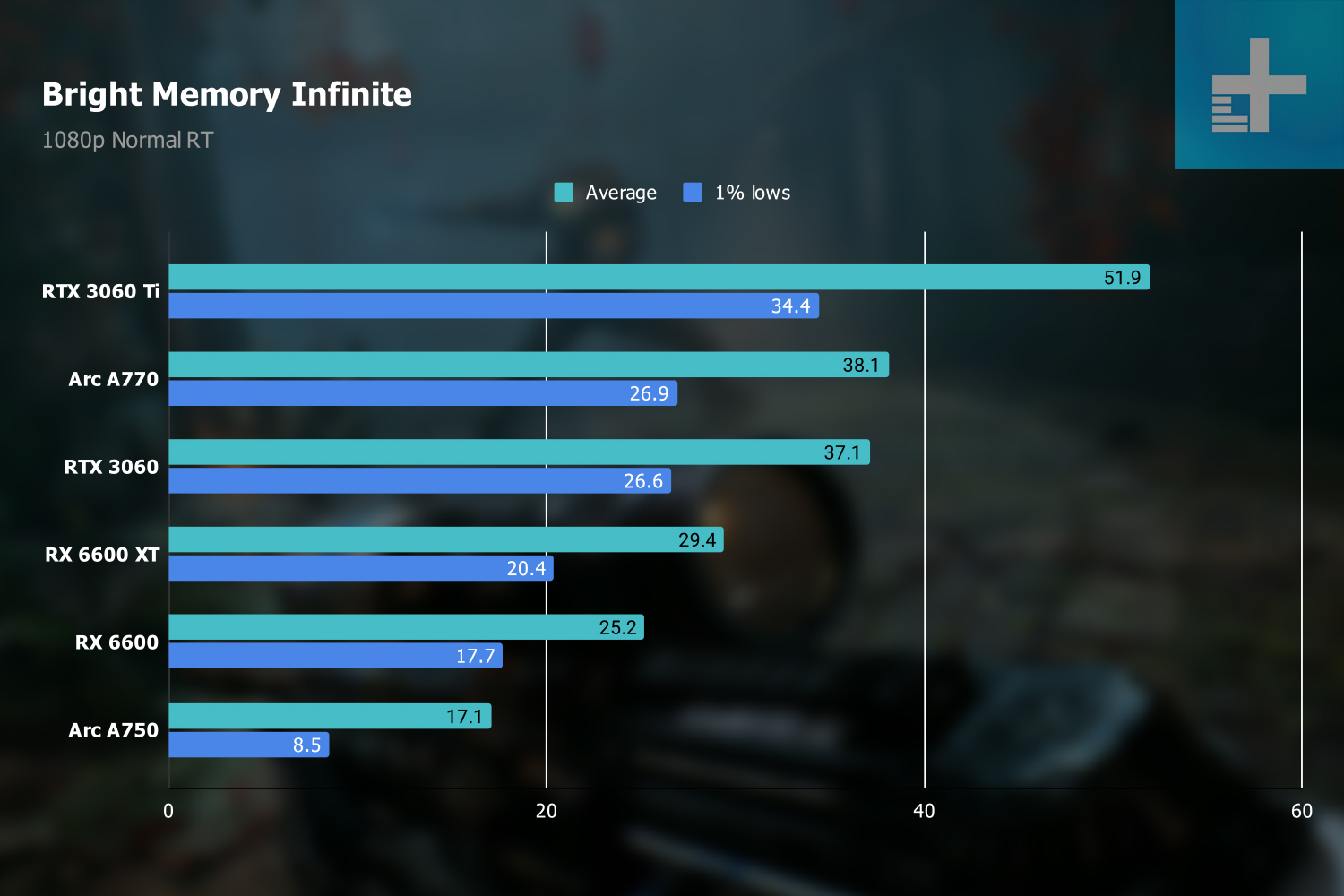

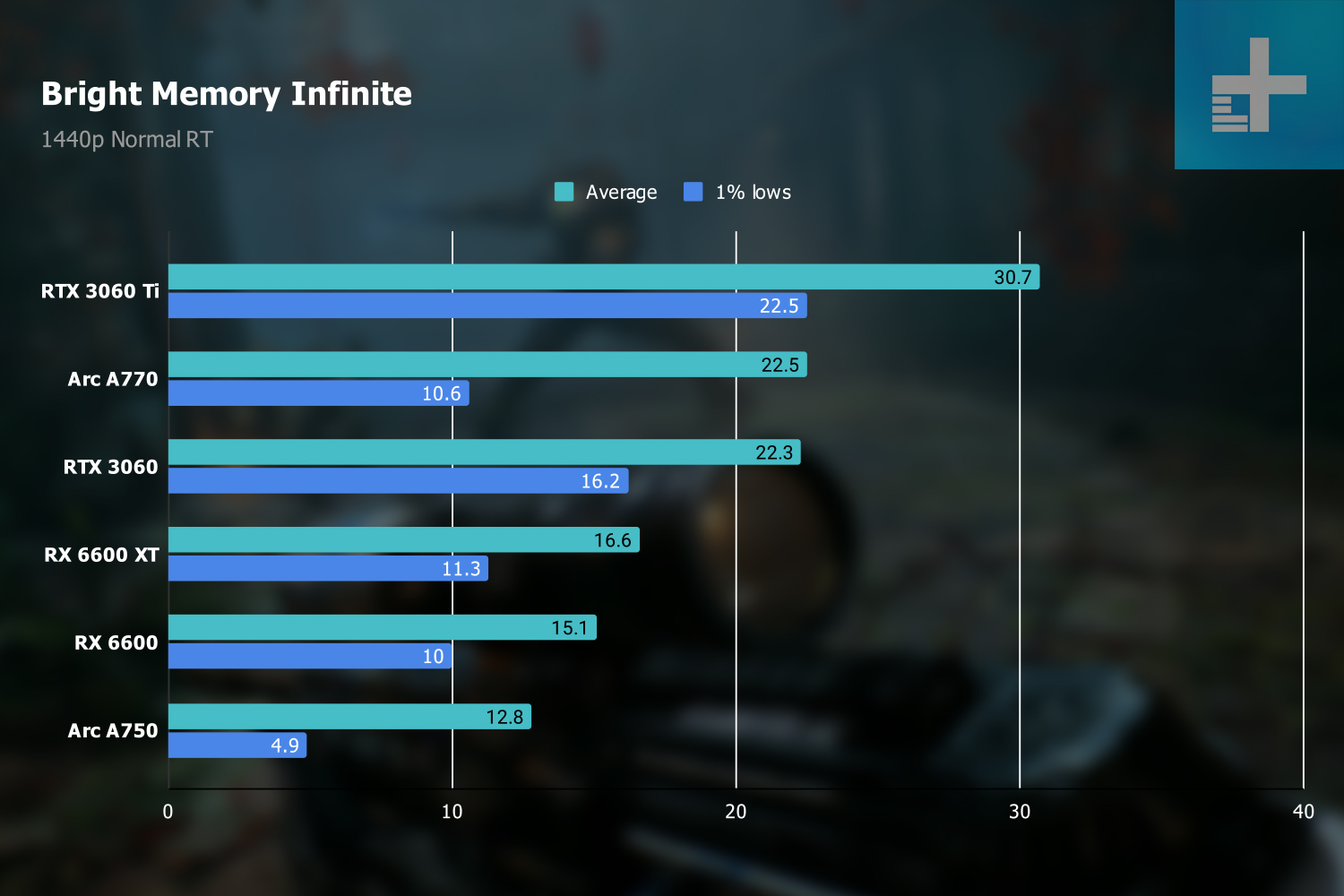

In Metro Exodus Enhanced Edition, for example, both Arc GPUs lead the pack, with the Arc A770 showing a 13% lead over even the RTX 3060 Ti. The A770 still takes a back seat in Cyberpunk 2077 and Bright Memory Infinite, but Intel’s lineup is trading blows well. This is the first time since real-time ray tracing has made its way to games that Nvidia has a serious competitor.

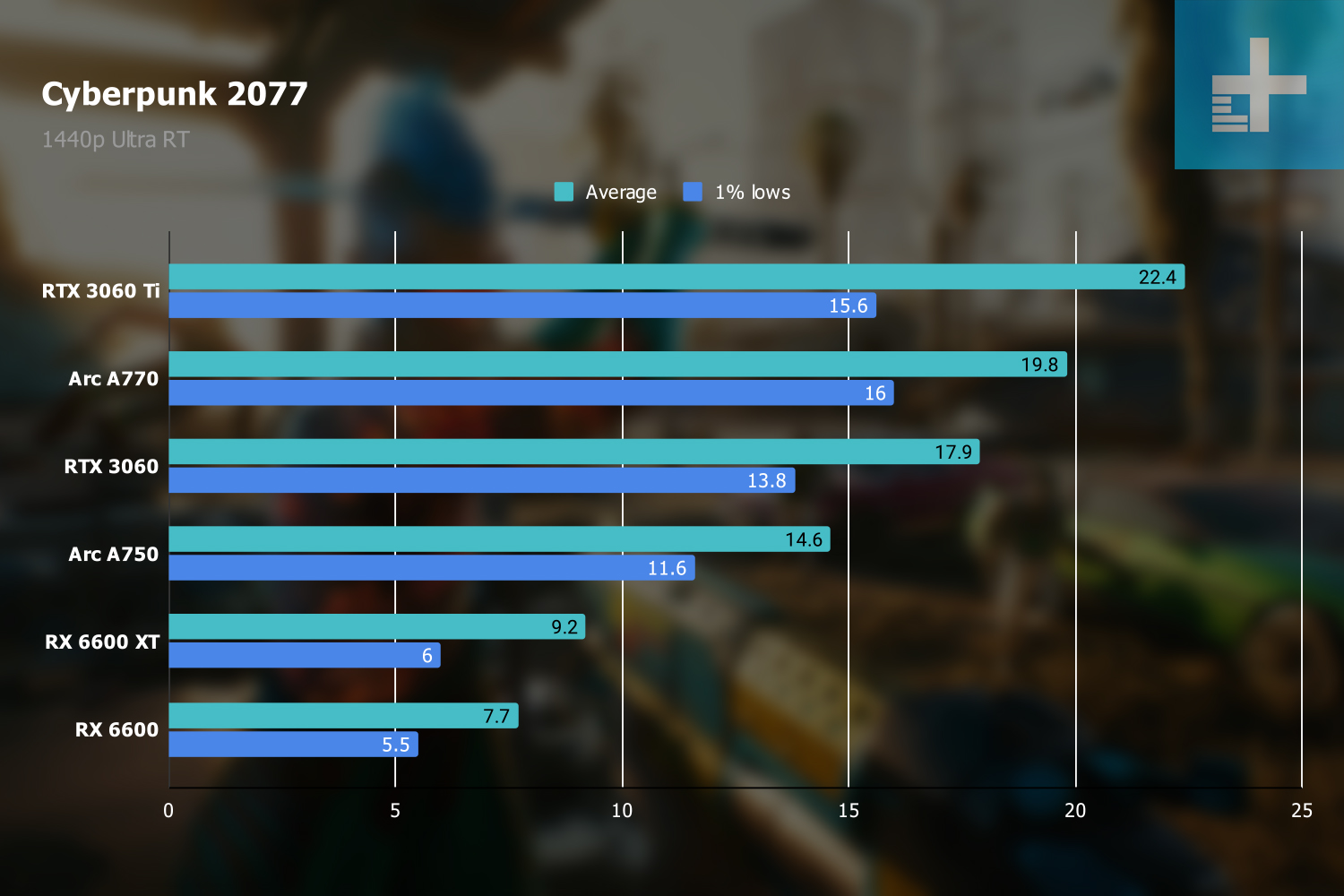

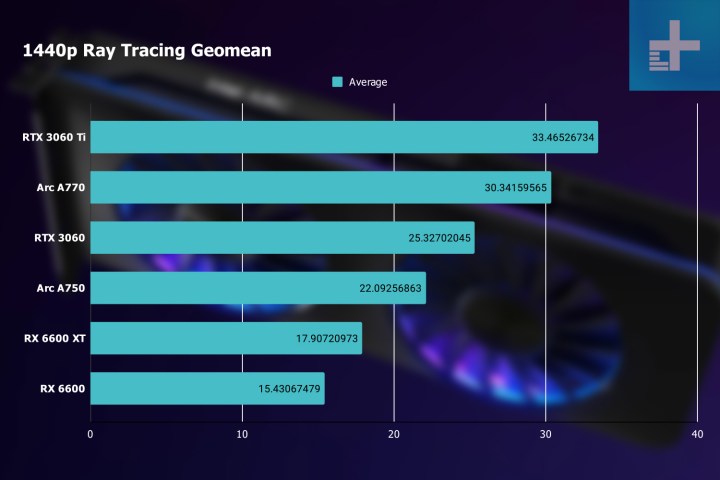

My 1440p results tell the same story, but the competition between Nvidia and Intel is hotter as the results tighten. The RTX 3060’s lead over the A750 shrinks to 12%, but the A770 actually shoots slightly ahead with a 19% lead over the RTX 3060. Similarly, the 13% lead the RTX 3060 Ti shows over the A770 at 1080p jumps down to a 10% lead at 1440p.

AMD’s GPUs hold up in rasterized performance and sometimes even look like a better value compared to what Intel is offering. Ray tracing flips that story. The Arc A750, and especially the A770, are true RTX 3060 competitors in that they not only compete on rasterized performance but also when ray tracing is factored in.

Testing Intel’s XeSS

Outside of ray tracing, Intel also has Xe Super Sampling (XeSS) to compete against Nvidia’s Deep Learning Super Sampling (DLSS). XeSS works a lot like DLSS. In supported titles, the game is rendered at a lower resolution and upscaled, utilizing dedicated AI hardware inside the GPU to reproduce an image that looks as close to native resolution as possible. That’s the idea, but Intel has a spin: XeSS doesn’t require an Intel graphics card. DLSS requires an Nvidia RTX GPU.

How is Intel doing this? There are two versions of XeSS, and you’ll get whichever one your GPU supports by default. The main version is for Arc GPUs. This uses an advanced upscaling model and is accelerated by Arc’s dedicated XMX AI cores. The other relies on a simpler upscaling model and uses DP4a instructions. In short, both versions use AI, but DP4a can’t handle the complexity that the XMX cores can, so it uses a simpler model on GPUs from AMD and Nvidia.

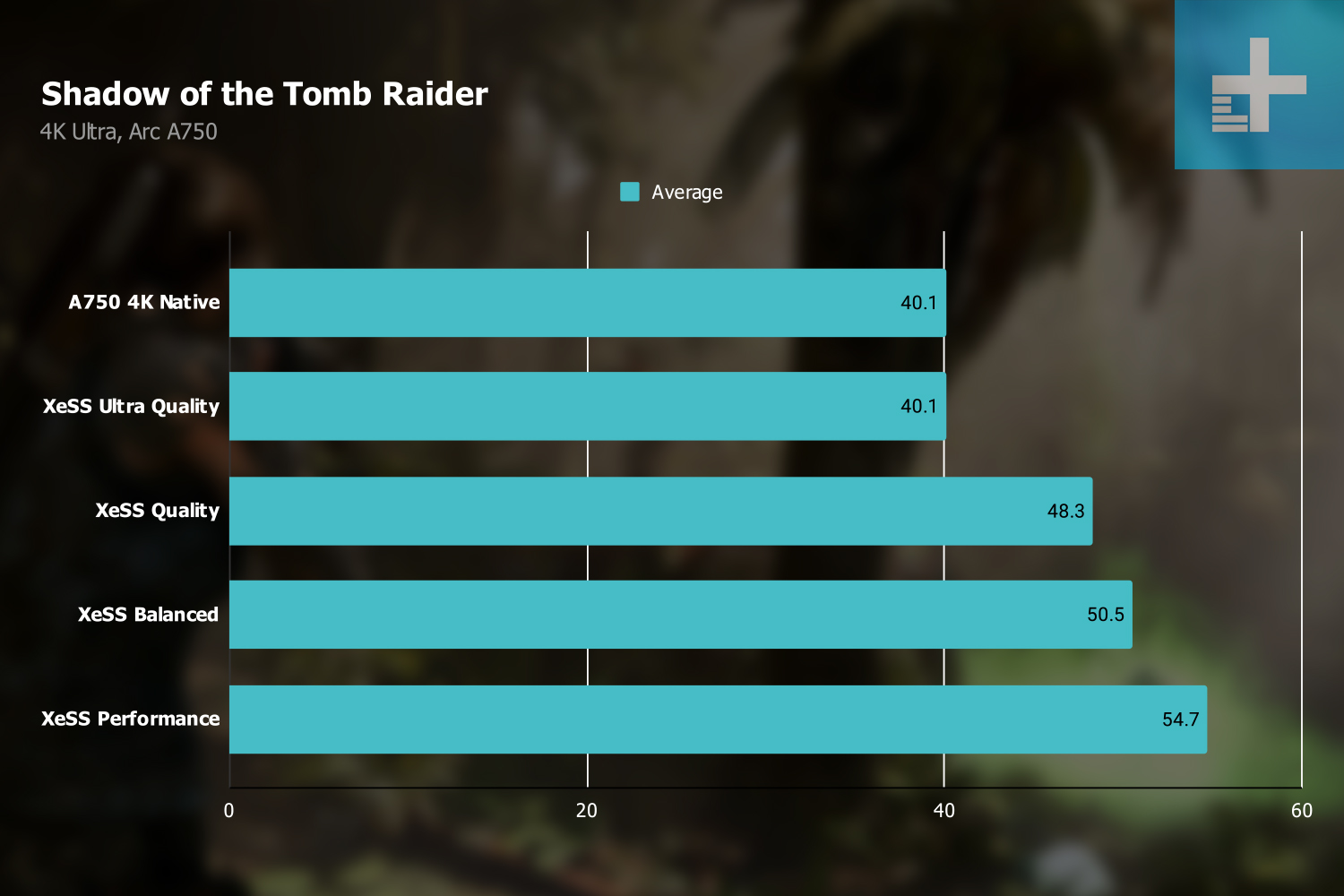

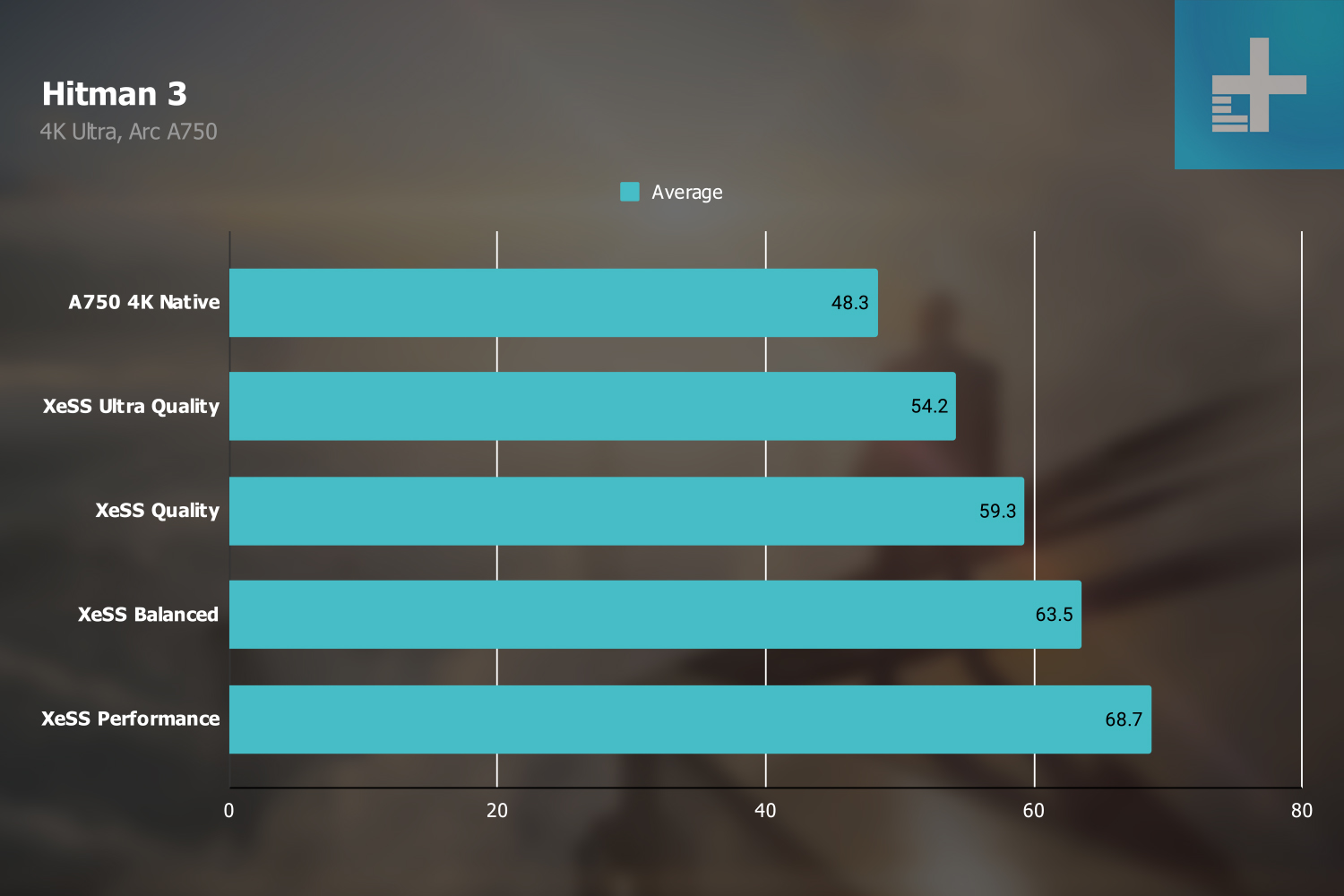

With the Arc A750, XeSS provided a 36.4% increase over native 4K with the Performance mode in Shadow of the Tomb Raider. Hitman 3 saw a larger 42% boost, but this range seems common for XeSS right now. Even in the dedicated XeSS feature test in 3DMark, which should be the best-case scenario for XeSS, the A750 and A770 both saw a 47% improvement.

That’s the advanced XMX versions of XeSS. The RTX 3060 uses the DP4a version, but it still shows similar performance boosts. Hitman 3 showed a 38% increase with XeSS Performance, while Shadow of the Tomb Raider saw a 44% jump. You’re getting similar performance gains across XMX and DP4a, which is great for Intel’s upscaling tech.

DLSS puts a damper on things, though. Even the least aggressive DLSS Quality mode provided about the same uplift as XeSS Performance mode. Apples-to-apples, DLSS shows a 63.7% increase in Hitman 3 and a massive 68.9% jump Shadow of the Tomb Raider. DLSS has its Ultra Performance mode in both of these games, too, which shows even larger improvements.

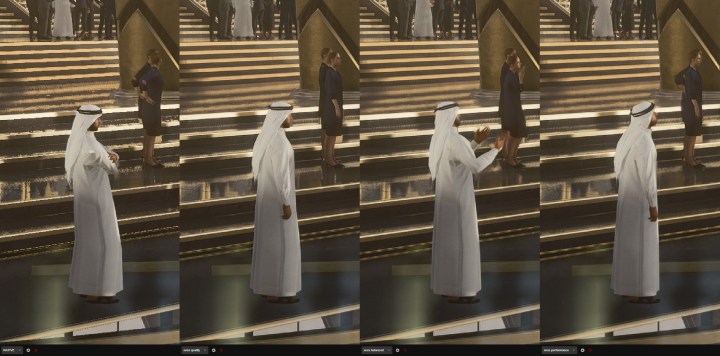

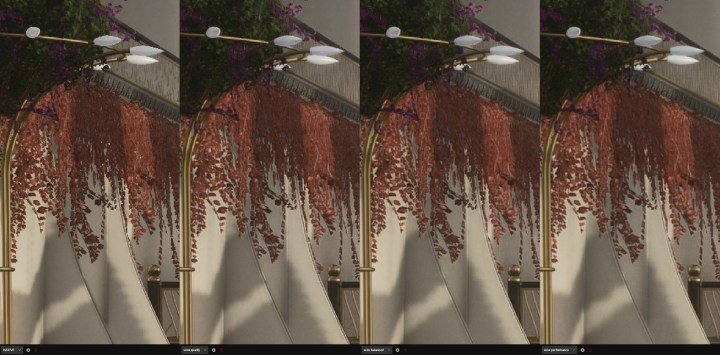

When it comes to image quality, Intel needs some work. XeSS isn’t nearly as bad as DLSS 1.0 was, but it still shows a number of artifacts, especially in the DP4a version. In Shadow of the Tomb Raider, for example, XeSS simply looks like it’s running at a lower resolution compared to DLSS at the same quality mode.

- For detailed images: Shadow of the Tomb Raider XeSS Performance comparison (click, drag, resize)

The native mode looks better, but it still has some problems. In Hitman 3, you can see how turning on XeSS turns the shrubs near the top of the screen into a blur regardless of the quality mode. There are some temporal artifacts as well. In the below screenshot of the audience member, you can see the eyes disappear as part of the stairs in XeSS’ Balanced mode.

- For detailed images: Hitman 3 XeSS comparison (click, drag, resize)

XeSS isn’t a DLSS killer yet. It still needs some work when it comes to cleaning up the image after the upscaling is done on Intel’s GPUs, and the DP4a version needs an overhaul to compete with what temporal supersampling can offer right now.

I have to wonder if Intel’s approach is misguided. AMD’s FidelityFX Super Resolution (FSR) 2.0 is comparable to DLSS in performance and image quality, and it works across GPU vendors without AI. XeSS can eventually match DLSS with enough optimization, but a general-purpose temporal super-resolution feature like FSR 2.0 or the one offered in Unreal Engine 5 would’ve provided higher performance gains out of the gate.

Should you buy the Arc A770 or A750?

For the Arc A770 and A750, it all comes down to price. Performance holds up, showing a fierce competitor with the A770 and a solid value alternative with the A750, but it’s hard to say if they’re the right pick until the launch dust has settled and we have an idea where prices will end up.

With current prices, it’s hard to make an argument for the RTX 3060. You’re looking at a $50 to $100 premium over Intel’s offerings for slightly better ray tracing and DLSS. DLSS is a big plus, but XeSS looks promising, even if Intel still needs to optimize it to reach its full capabilities. The Arc A770 handily beats the RTX 3060 in performance as well, though the A750 is slightly behind.

Although the final decision is going to come down to what GPU you can find at what price, one thing’s for sure: Intel’s entry into the GPU market is sure to make a splash, and hopefully, Team Blue will be a third competitor for years to come.