As newcomers in the world of discrete graphics cards, the best hope for Intel’s Arc A770 and A750 was that they wouldn’t be terrible. And Intel mostly delivered in raw power, but the two budget-focused GPUs have been lagging in the software department. Over the course of the last few months, Intel has corrected course.

Through a series of driver updates, Intel has delivered close to double the performance in DirectX 9 titles compared to launch, as well as steep upgrades in certain DirectX 11 and DirectX 12 games. I caught up with Intel’s Tom Petersen and Omar Faiz to find out how Intel was able to rearchitect its drivers, and more importantly, how it’s continuing to drive software revisions in the future.

The driver of your games

Before getting into Intel’s advancements, though, we have to talk about what a driver is doing in your games in the first place. A graphics card driver sits below the Application Programming Interface (API) of the game you’re playing, and it translates the instructions for the API into instructions that the hardware can understand.

An API like DirectX takes instructions from the game and translates them into a standardized set of commands that any graphics card can understand. The driver comes after, taking those standardized instructions and optimizing them for a particular hardware architecture. That’s why an AMD driver won’t work for an Nvidia graphics card, or an Intel driver won’t work for an AMD one.

Intel’s problems mainly focused around DirectX 9. It’s considered a legacy API at this point, but a large swath of games are still designed to run on DX9, including Counter-Strike: Global Offensive, Team Fortress 2, League of Legends, and Guild Wars 2.

The problem with DX9 compared to modern APIs like DX12 and Vulkan is that it’s a high-level API. That means it’s more generalized than a modern API, placing more strain on the driver to squeeze out performance optimizations. DX12 and Vulkan are low-level APIs, giving more clear access to the hardware while a developer is creating a game and taking some pressure off the driver. Petersen explained that with DX12, “it’s less likely that our driver is doing anything suboptimal because there’s a more direct connection between the game developer and our platform.”

Originally, Intel used D3D9on12 for DX9, which is a translation layer that uses DirectX 12 to understand DirectX 9 instructions. Petersen said he believes Intel “did the right thing at the time,” but D3D9on12 proved to be too inefficient. Performance was left on the table, with less powerful GPUs sometimes offering twice the performance of Intel’s graphics cards in DX9 games.

Intel essentially started from scratch, implementing native DX9 support and leverage translation tools like DXVK — a Vulkan-based translation layer for DX9. And it worked. In Counter-Strike Global Offensive, I measured around 190 frames per second (fps) with the launch driver and 395 fps with the latest driver; a 108% increase. Similarly, Payday 2 saw around a 45% boost going from the launch driver to the latest version with the Arc A750 based on my testing.

More on the table

DX9 was the killer for Intel’s GPUs at launch, but there are still performance optimizations on the table. Petersen made that clear: “Compared to where we are and that theoretical peak, there’s still quite a big gap.”

The new frontier isn’t DX9, though. It’s DX11. “I do think, especially for DX11 titles, there’s more headroom out there and we’re going to continue to work on it,” Petersen said. “DX12 is going to be more like a labor of love for forever because it’s a little bit more fine-grained, and it’s going to be a per-title kind of slog to make all those wonderful. But I do think there’s still an uplift ahead of us, and it’s more than you’ll typically see with a driver.”

One example of that is Warframe, where Intel claims upwards of a 60% boost in its latest driver against the launch driver. Although there isn’t a broad stroke Intel could make to help all DX11 titles, Petersen explained that DX11 is still more high-level than DX12. “While DX11 is not as thick as DX9, it’s still got quite a bit of work to be done for that optimization.”

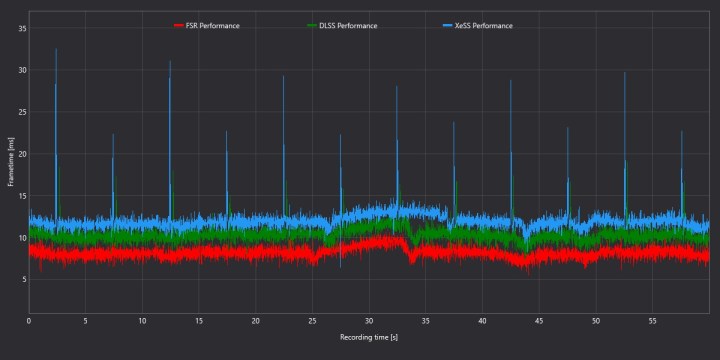

Average performance is one area of focus, but that wasn’t the only issue with Intel’s initial drivers. Petersen explained that the engineering team “fixed some of the fundamental resource allocation” issues in the driver, helping improve consistency by ensuring the driver doesn’t run into bottlenecks that cause big shifts in frame time.

As Intel’s cards get going, the team has been releasing new drivers at a breakneck pace. I asked Petersen and Faiz if that speed would continue, and Faiz didn’t mince words: “We would like to continue that momentum.” Petersen added: “It is well understood within our organization that, you know, driver updates are what’s going to make the difference between our success and lack of success.”

Both were careful not to overpromise, which is an issue Intel has run into with its Arc GPUs in the past. But the short record is certainly in Intel’s favor. Since launch, the cards have seen 15 new drivers (six WHQL, nine beta), including release day optimizations for 27 new games. That beats AMD and matches Nvidia’s pace. In fact, Intel was the only one with a driver ready for Hogwarts Legacy at launch (a game that Nvidia still hasn’t released a Game Ready Driver for).

XeSS is still a work in progress

Although Intel has made big strides with its drivers, there’s still a long road ahead. One area that needs attention is XeSS, Intel’s AI-based upscaling tool that serves as an alternative to Nvidia’s Deep Learning Super Sampling (DLSS).

XeSS is a great tool, but it lacks in a couple areas: game support and sharpness. Intel has been adding support for new games like Hogwarts Legacy and Call of Duty: Modern Warfare 2, but it’s going up against the years of work Nvidia has had to add DLSS to hundreds of games. Intel hopes implementing XeSS in these games will be an easy road for developers, though.

As Petersen explained, “[DLSS and XeSS] both rely on, you know, effectively certain types of data coming from the game to a separate DLL file. Same as XeSS. And we’ve kind of got the advantage of being a fast follower because, obviousl,y they were there first. So we can make it very easy to integrate XeSS.” This backbone is what has enabled modders to splice AMD’s FidelityFX Super Resolution into games that only support DLSS. The same is theoretically possible with XeSS.

One area I pressed on was a driver-based upscaling tool, similar to Nvidia Image Scaling or AMD’s Radeon Super Resolution. Petersen and Faiz were again careful to not promise anything, but they noted that it’s “not technically impossible.” That would fill in the gaps Intel currently has in its lineup, but we might not see such a tool for a while (if at all).

The other area is softness. Compared to DLSS, XeSS is usually not as sharp. I assumed this just a difference in the amount of sharpening applied, but Petersen said that’s not the case. “I think it’s a common problem, and I attribute most of the softness that you see today in certain cases as being, you know, an art style that’s not accurately reflected in the training set that we’re using for our model,” Petersen said. “And that will obviously change over time in new versions of XeSS.”

Like DLSS, XeSS uses a neural network to perform the upscaling. Nvidia clearly has a big head start in its training model, so it could be a few years before Intel’s training data is able to match what Team Green has been chipping away at for years.

Player three in the making

Intel is the largest GPU supplier in the world through its integrated graphics, but the discrete realm is a different beast. The company has proved it has the chops to compete in the lower-end segment, especially with the new aggressive pricing of the Arc A750. But there’s still a lot more work ahead.

Leaks say Intel plans on building on this foundation with a refresh to Alchemist in late 2023 and a new generation in 2024, but that’s just a rumor for now. What’s certain is that Intel is clearly standing by its gaming GPUs, and in a time of rising GPU prices, a third player is a welcome addition to bring some much-needed competition. Let’s just hope the momentum in drivers and game support coming off the launch keeps up through several generations.

This article is part of ReSpec – an ongoing biweekly column that includes discussions, advice, and in-depth reporting on the tech behind PC gaming.