Instead, the first Optane drives are built with enterprise use cases in mind. Intel says the consumer versions will come later, but for now, the DC P4800X is bound for all work and no play. Data centers will be able to leverage the much faster storage solution to handle larger amounts of data without spending a fortune on dedicated RAM.

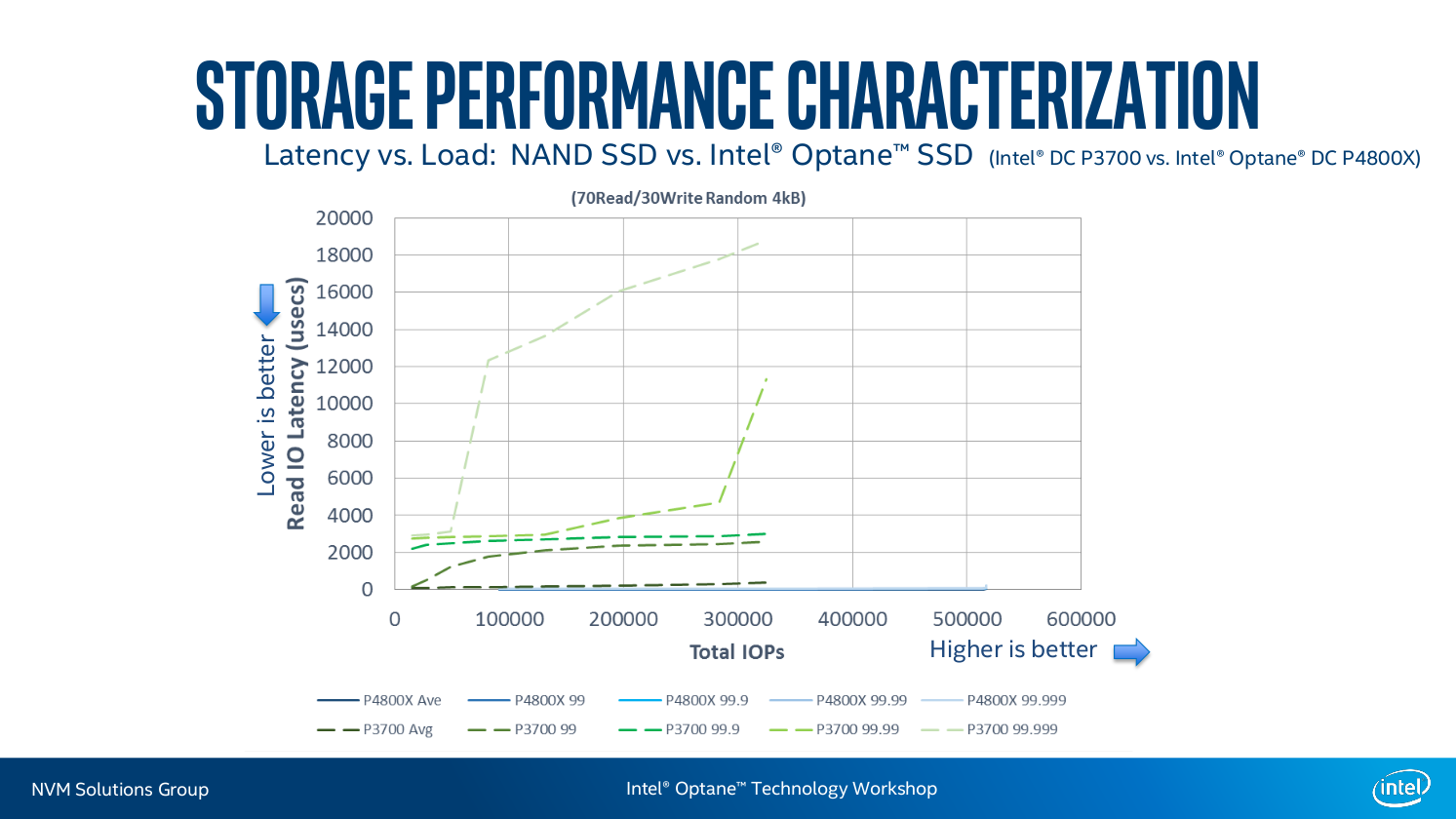

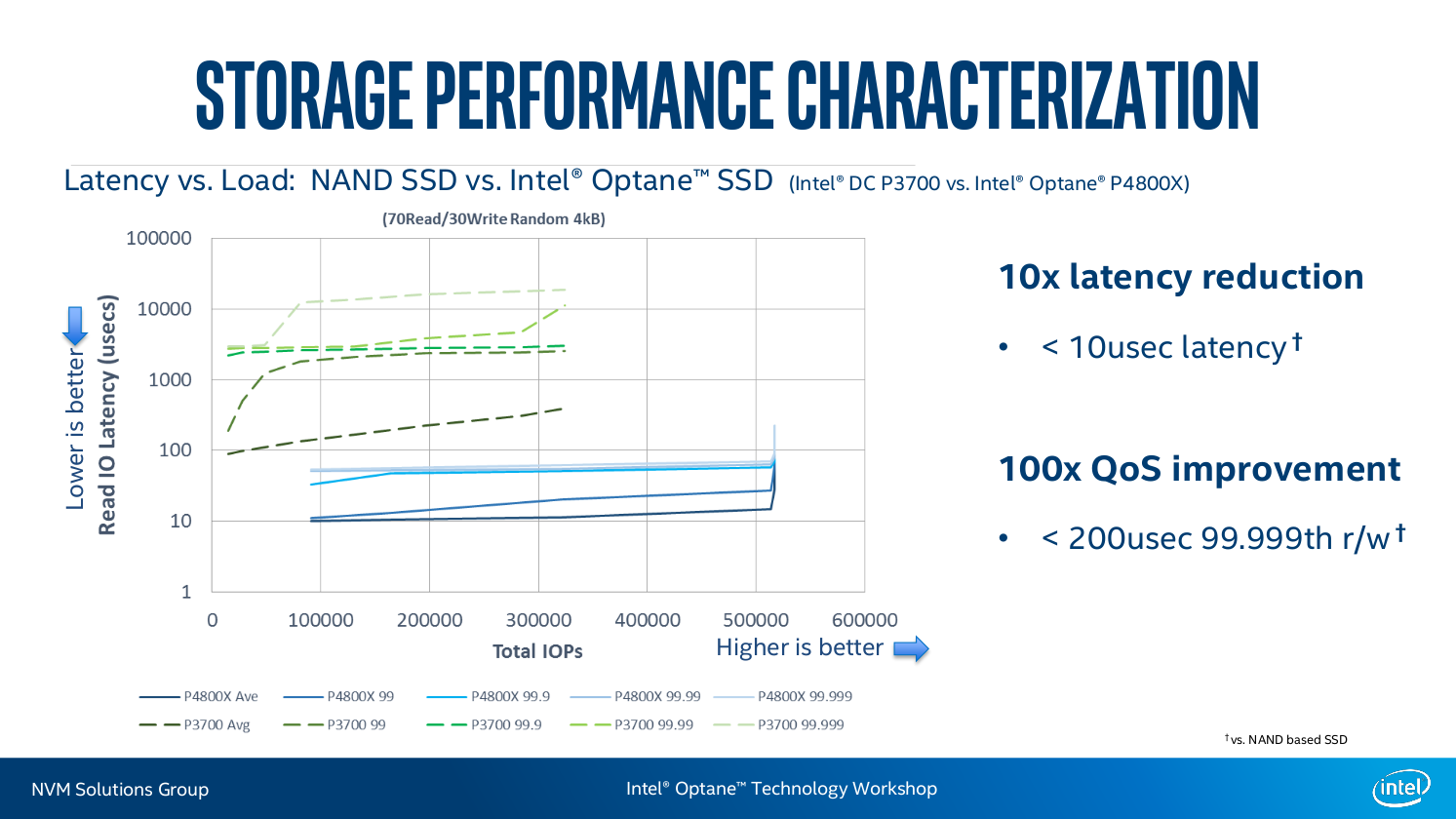

Still, the technology is worth attention. It holds the potential to dramatically outpace other SSD solutions and reduce latency to speeds more similar to RAM.

What is it?

In a few words, Optane sits somewhere between RAM and a high-end SSD in terms of read, write, and latency. Like SSDs, and unlike RAM, it’s non-volatile, so cutting its power will retain the data. However, it’s capable of producing high speeds and low latency that even NVMe drives would drool over. It’s also far more durable than NAND-based SSDs, although only extensive testing can say for sure exactly how much longer they’ll last.

That’s thank to a combination of Intel’s XPoint technology, and a series of hardware controllers and driver optimizations that allow the drive to communicate at lightning fast speeds over PCIe. It’s a completely different architecture and construction than 3D NAND, which powers Intel’s current enterprise SSD, the P3700.

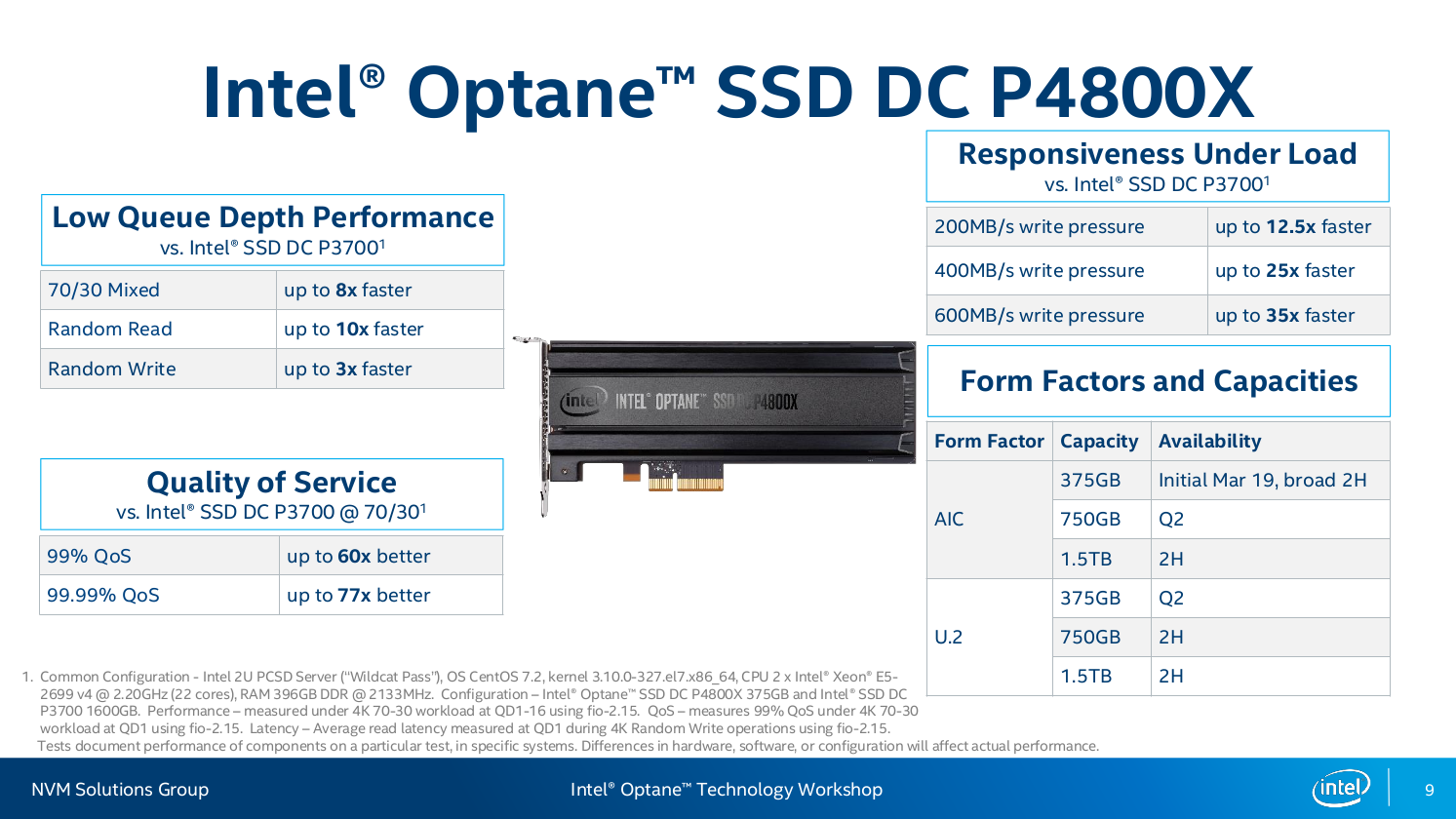

Business users and server administrators can actually install the DC P4800X, which is available first as a PCIe add-in-card, and later as a 2.5-inch U.2 drive, and then use Intel’s Rapid Storage Technology software to label the drive as memory. Intel’s data relating to the improvements don’t just show nominally increased speed. Depending on what metric you’re using, the drives often break the benchmarks entirely.

That’s a distinct change from the way data centers already leverage SSDs, and Intel is acutely aware of that fact. As such, the chip maker is asking us to change the way we think about storage, and how we measure it, and it comes down to a little-discussed factor known as the queue depth.

Cutting in line

Put simply, queue depth is the number of tasks currently waiting in line to read or write to the drive. On spinning disk drives, these tasks can pile up quickly, leading to a queue depth in the hundreds. During benchmarking, that means the drive is constantly running at its top speed, reading and writing as quickly as possible to push through tasks in its queue.

But according to Intel, modern SSDs aren’t actually stressed in that way. They’re fast enough to chew through their queue rather quickly, and at least in Intel’s testing, most workloads actually keep the queue depth at 32 or lower, so that’s where Optane SSDs are at their fastest. That means they fit into their role as addressable memory quite well, where the queue isn’t as stacked as it might be with a server.

Who is it for?

But the real question a lot of you may be having is why this matters. The drives are insanely expensive, and even if you managed to get Intel to ship you one — or a few dozen, as the case may be — it’s not like any personal software is built to leverage an SSD with a fraction the latency and multiple times the speed of the fastest PCIe NVMe drives on the market.

Which means its a great fit for servers that need a lot of addressable memory, like RAM, without spending a lot of extra cash on 64 and 128GB DIMMs, which are insanely expensive. It’s not quite as fast as memory, but in a lot of data center situations, the increased speed is enough to come close, depending on the workload.

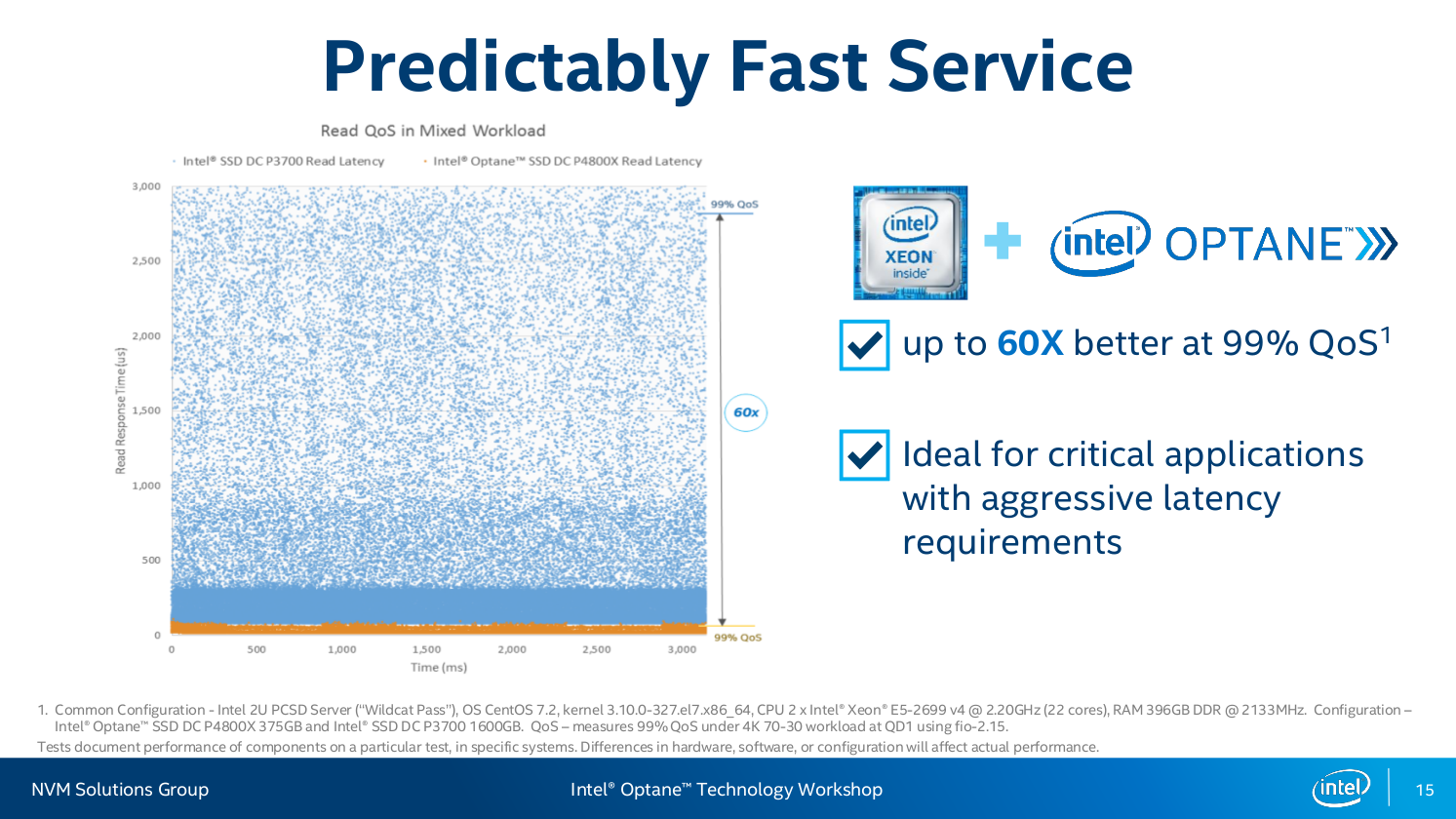

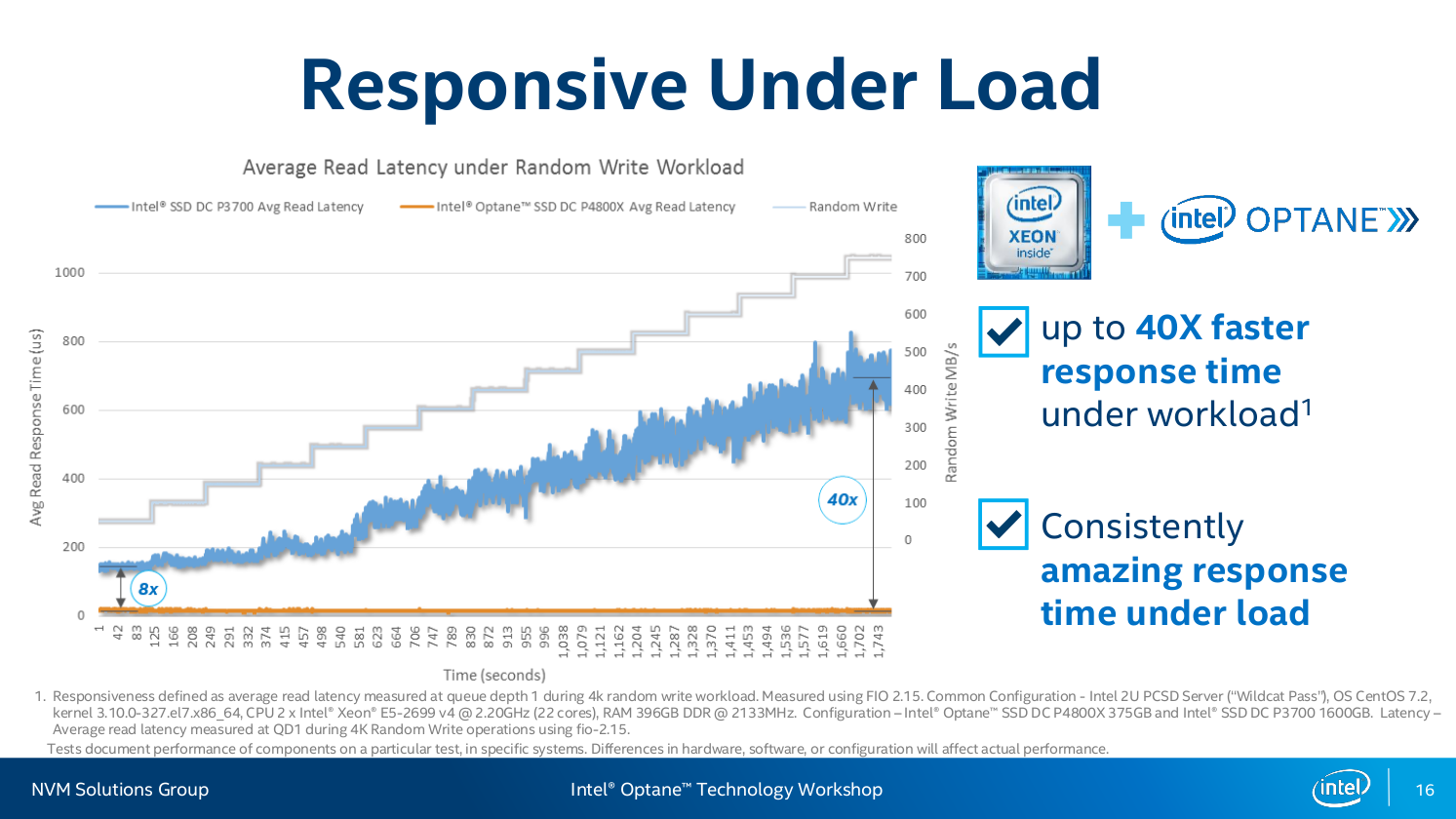

In these situations, latency becomes a major factor, and that’s another area where the PC 4800X excels. Not only is the latency much lower as stress levels rise, but it’s also more consistent, an added bonus for the people who need to keep these servers running.

It also allows server administrators to push past the typical maximum DRAM limits for Xeon processors, with an effective increase of 8X more capacity on x2 Xeon chips, up to 24TB of total addressable memory from just 3TB.

That means enterprise users driving thousands of systems, or sending and receiving millions of calls from around the world, can reduce their dependence on both traditional SSDs and RAM, lowering costs while providing impressive performance. From universities and chat clients, to Microsoft and MySQL, major players are already gearing up for the DC P4800X release.

What does it mean for you, really?

What we’re seeing here could be the first step towards a new type of storage, and a change in the way systems are built. Major advancements in storage technology like XPoint are going to enable us to start moving away from the sort of tiered storage we’re used to seeing in systems, where lower access times demand smaller storage amounts.

Obviously, Optane isn’t going to have much impact on even PC enthusiasts right out of the gate. Its initial launch is targeting enterprise only. Even if you did decide to buy one, it would be prohibitively expensive, with the 375GB PCIe AIC model carrying a $1,520 MSRP, where a 512GB Samsung SM951 would only cost you about $300. You may also struggle to see real benefit, since the drivers will be designed with enterprise use in mind.

Still, it’s time to start getting excited for a big shift in how system builders approach memory and storage. This technology is new and, if successful, it could actually lead to a future where computers no longer have RAM at all, but instead use a single pool for both long-term and short-term memory allocation.

Before that can happen, though, Optane has to prove that it works, delivers on what it promises, and is reliable enough to last years. Enterprise should prove a good test bed for the technology. If it can pass muster there, then it should be good enough for your home desktop — once the price comes down.