Intel plans to make discrete graphics cards, and yes, that’s exciting. But so far, it’s kept a tight seal on the details. No longer.

Digital Trends has obtained parts of an internal presentation from Intel’s Data Center group that give the first real look at what Intel Xe (codenamed “Arctic Sound”) is capable of. The presentation details features that were current as of early 2019, though Intel may have changed some of its plans since then.

These details show Intel is serious about attacking Nvidia and AMD from every possible angle, and provide the best look at the GPUs so far. The company clearly intends to go big with the new line of graphics cards, most notably one with a thermal design power (TDP) of 500 watts — the most we’ve ever seen from any manufacturer.

Intel declined to comment when we reached out to a spokesperson for a response.

Intel’s Xe graphics uses “tile” chiplets, likely with 128 execution units each

Xe is Intel’s single, unifying architecture across all of its new graphics cards, and the slides provide new information on Intel’s design.

The documentation shows Intel’s Xe GPUs will use “tile” modules. Intel doesn’t call these “chiplets” in the documentation, but the company revealed in November that its Xe cards would use a multi-die system, packaged together by Foveros 3D stacking.

The technique may be similar to that pioneered by AMD in its Zen processors (which makes sense: consider who Intel has hired). In graphics, this approach differs from how AMD and Nvidia cards are designed.

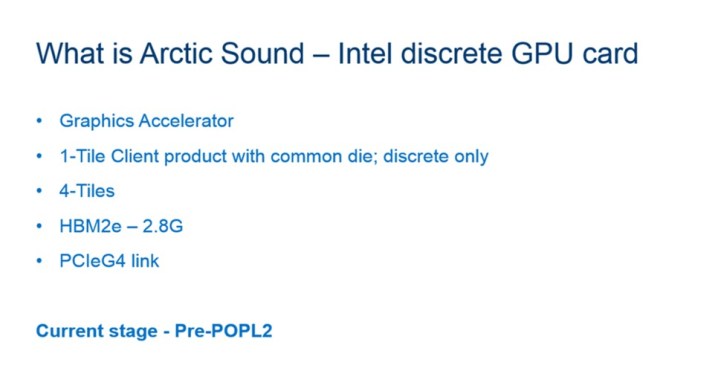

The cards listed include a one-tile GPU at the bottom of the stack, a two-tile card, and a maxed-out four-tile card.

The documentation doesn’t state how many execution units (EU) will be included in each tile, but the tile counts line up with a driver leak from mid-2019 that listed three Intel GPUs and their corresponding EUs: 128, 256, and 512. If each tile is presumed to have 128 EUs, the 384-EU configuration is missing. That lines up with the missing three-tile configuration from the leaked slides we received.

We assume Xe will use the same basic architecture shared by the company’s previous Irisu Plus (Gen 11) graphics since Xe (Gen 12) is coming just one year later. Intel’s Gen 11 GPUs contained one slice, which was divided into eight “sub-slices,” each holding eight EUs for a total of 64.

This is the foundation for Xe, which will begin to bring multiple slices together in a single package. By the same math, a single tile would feature two of these slices, (or 128 EUs). The two-tile GPU, then, would feature four slices (or 256 EUs) and the four-tile would get eight slices (or 512 EUs).

Intel has invested in multi-die connections that could enable these tiles to function at high efficiency, known as EMIB (Embedded Multi-Die Interconnect Bridge) — perhaps the “co-EMIB” mentioned by Intel last summer. It’s a technology Intel debuted in the revolutionary but ill-fated Kaby Lake-G chips from 2018.

Thermal design power will range from 75 to 500 watts

Intel has at least three distinct cards in the works, with a TDP ranging from 75 watts all the way up to 500. These numbers represent the full gamut of graphics, from entry-level consumer cards all the way up to server-class data center parts.

Let’s take them one at a time, starting at the bottom. The base 75-watt to 150-watt TDP applies only to cards with a single tile (and, presumably, 128 EUs). These seem the most suitable for consumer systems, and line up with the preview we’ve seen so far of a card called the “DG1.”

At CES 2020, Intel revealed the DG1-SDV (software development vehicle), a discrete desktop graphics card. It didn’t feature an external power connector, indicating it was likely a 75-watt card. That’s a match with the 1-tile SDV card listed first in the chart above.

It’s hard to say how much the 150-watt part might different from DG1-SDV. However, a 150-watt TDP would theoretically be in league with Nvidia’s RTX 2060 (rated for 160 watts) and AMD RX 5600XT (rated for 160 watts, after a recent BIOS update).

Despite the flashy design of the DG1’s shroud, Intel was insistent it’s for developers and software vendors only. The cards listed on the chart as “RVP” (reference validation platform) may be products we’d expect Intel to sell. How closely the RVP and SDV will resemble each other is unknown as of now, especially if Intel is readying both consumer and server versions of these GPUs.

Beyond these options, which may correlate to consumer products, Intel appears to have more extreme Intel Xe graphics cards. Both draw more power than a typical home PC can provide.

First is a two-tile GPU with a 300-watt TDP. If this was sold to gamers today, it would easily exceed the power consumption of current top-tier gaming graphics cards. Its TDP is rated at 50 watts more than the already power-hungry Nvidia RTX 2080 Ti.

Judging by TDP alone, this product likely fits as either a competitor to the 280-watt RTX Titan workstation GPU or the 300-watt Tesla V100, Nvidia’s older data center card. It’s likely a workstation part to fulfill the second pillar in Intel’s strategy, labeled as “high power.” Intel defines these as products made for activities such as media transcoding and analytics.

Intel’s most powerful Intel Xe GPU won’t appear as a consumer part.

The true high-performance solution is the 4-tile, 400- to 500-watt graphics card that sits at the top of the stack. This consumes far more power than any consumer video card of the current generation, and more than current data center cards, to boot.

As a result, the 4-tile card specifies 48-volt power. That is only provided in server power supplies, effectively confirming the most powerful Intel Xe won’t appear as a consumer part. The 48-volt power may be what allows Intel to get to 500 watts. While the most extreme gaming power supplies can handle a 500-watt video card, most can’t.

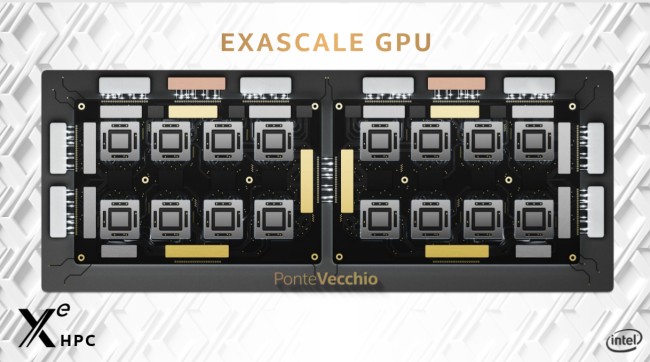

In November 2019, Intel announced “Ponte Vecchio,” which the company called the “first exascale GPU” for data centers. It is a 7nm card that uses a number of chiplet-connecting technologies to scale up to that power.

It certainly seems like a fit for this 4-tile, 500-watt card, though Intel says Ponte Vecchio isn’t due out until 2021. It was also reported at the time that Ponte Vecchio would use a Compute eXpress Link (CXL) interface over a PCI-e 5.0 connection and an eight-chiplet Foveros package.

Xe uses HBM2e memory and supports PCI-e 4.0

Rumors have circled about Intel using expensive, high bandwidth memory (HBM) over more conventional GDDR5 or GDDR6. According to our documentation, the rumors are true. The last major graphics card to use HBM2 was the AMD Radeon VII, though subsequent Radeon cards have since transitioned over to GDDR6, like Nvidia’s GPUs.

The documentation specifies that Xe will use HBM2e, which is the latest evolution of the technology. It lines up well with an announcement from SK Hynix and Samsung that HBM2e parts would launch in 2020. The documents also detail how the memory will be engineered, attached directly to the GPU package and using “3D RAM dies stacked up on each other.”

Though it isn’t mentioned, Xe will likely use Foveros 3D stacking for interconnecting between multiple dies, and also for bringing memory closer to the die.

The least surprising thing to be confirmed about Intel Xe is PCI-e 4 compatibility. AMD’s Radeon cards from 2019 all support the latest generation of PCI-e, and we expect Nvidia to conform to that in 2020 as well.

GPUs will target every segment

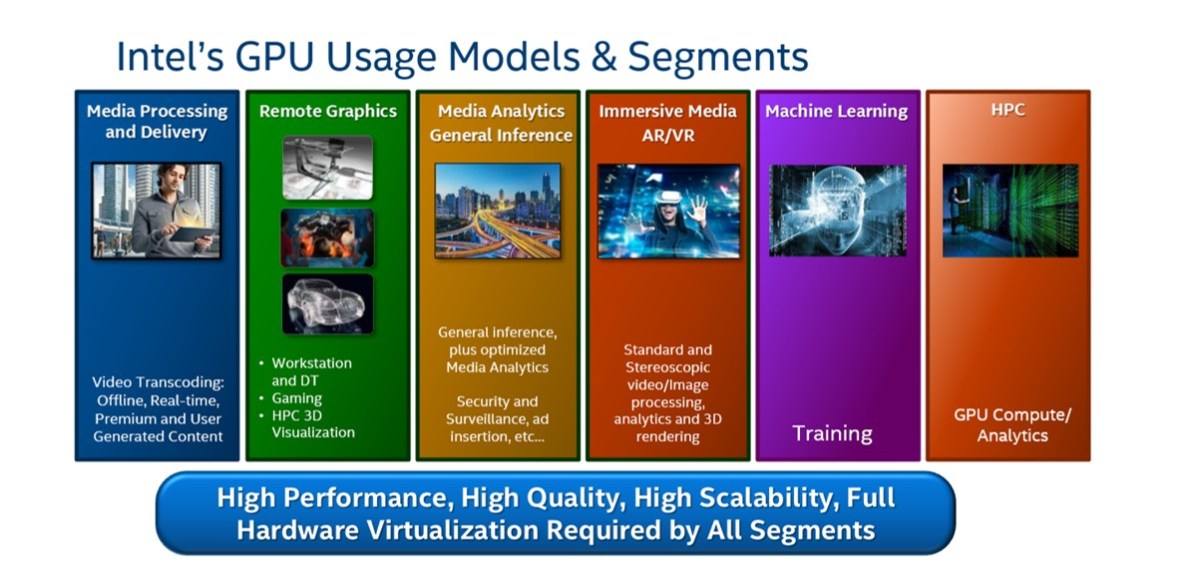

Intel has created a single architecture that scales from its integrated graphics for thin laptops up to high-performance computing made for data engineering and machine learning. That’s always been Intel’s messaging, and the documentation we’ve received confirms that.

Discrete cards for gaming are only a small part of the product stack. The slides show Intel plans for numerous uses including media processing and delivery, remote graphics (gaming), media analytics, immersive AR/VR, machine learning, and high-performance computing.

Where does that leave us?

It’s too early to say whether Intel’s ambitious dive into discrete graphics cards will disrupt AMD and Nvidia. We likely won’t hear more official details about Xe until Computex 2020.

Still, it’s clear Intel isn’t limping into the launch of its first discrete graphics cards. The company will compete with Nvidia and AMD at all key markets: entry-level, midrange, and HPC.

If it can establish a foothold in even one of these three areas, Nvidia and AMD will have a strong new competitor. A third option aside from the current duopoly should mean better choices at more aggressive prices. Who doesn’t want that?