Thanks to ChatGPT, natural language AI has taken the world by storm. But so far, it’s felt boxed in. With these chatbots, everything happens in one window, with one search bar to type into.

We’ve always known these large language models could do far more, though, and it was only a matter of time until that potential was unlocked. Microsoft has just announced Copilot, its own integration of ChatGPT into all its Microsoft 365 apps, including Word, PowerPoint, Outlook, Teams, and more. And finally, we’re seeing the way generative AI is going to be used more commonly in the future — and it’s not necessarily as a straightforward chatbot.

Bringing natural language into apps

Ever since the launch of the ChatGPT API, I’ve been excited to see how developers would take the power of a large language model and integrate it into actual apps that people use. After all, as fun and exciting as ChatGPT or Bing Chat are, they only produce text — and you’re left copying and pasting from there.

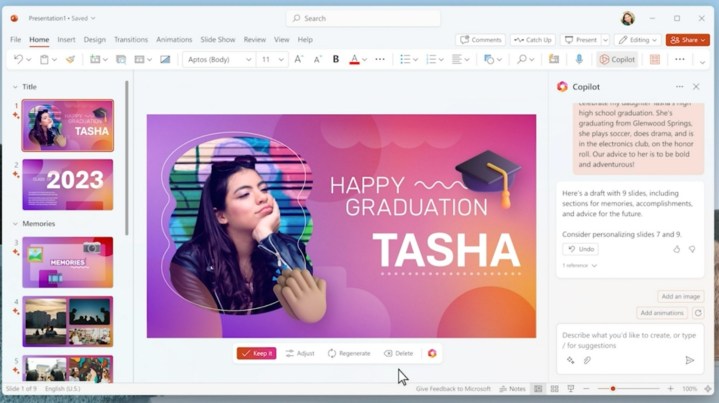

But Microsoft 365 Copilot shows how powerful and useful generative AI can be. As seen in the demos, Copilot has been merged directly into all these apps and can be used for all sorts of interesting things. In PowerPoint, it can generate entire slideshows based on just a prompt. As the Microsoft representative mentioned, Copilot is probably going to make a much fancier version of your kid’s graduation slideshow than you ever would. The same is true in a more complicated application like Excel, where Copilot can easily pull info from an unstructured data set and present it in a chart for you.

Of course, Copilot gives lots of options for tweaking things or choosing from different styles — in the same way that ChatGPT has always been good at. You can even ask Copilot to create a slideshow in the style of a different project you’ve made, or to use information from a different application like Word. It’s pretty neat, and it’s all integrated into Microsoft Graph, meaning your business profile and info from across the ecosystem is automatically integrated to give you contextual results.

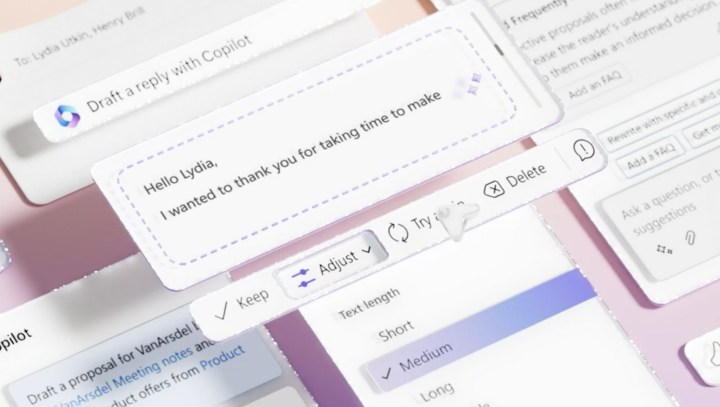

It’s a similar story with Outlook, where Copilot can draft up emails for you, or in Word, where it can create a document based on some rough notes. It’s not exactly groundbreaking, but it is useful and convenient. And the examples that seem the most practical did more than offer just a blank search box, which looks like it’ll be key to making AI more accessible.

Making AI accessible

In many of these examples, Copilot isn’t just offering you a ChatGPT input in the sidebar. It’s more than that, And that means relying more on suggested prompts, simpler ways of interacting, and even presets. When Word generates some text, it’s going to ask you what you think of it, prompting you to tweak things further. When you ask PowerPoint to make a slideshow for you, it offers you different style options that will augment the output. It’s not unlike what Microsoft already added to Bing Chat.

And this is all very, very good, especially when they’re tailor-made for the type of task you’re employing AI for. I’m not saying chatbots are going to go away — I think there will always be a space for raw, unfiltered exploration of what AI can do. And the true creative power of generative AI will always hinge on the ability to say anything in whatever words you choose.

But as AI shows up in more mainstream and accessible applications, developers need to find ways to guide the conversation, and that’s exactly what Copilot has started to do. It’s really exciting to imagine how developers will take this technology and run with it. It should be enough to make even the most critical AI naysayers pay attention.