I’m sorry to say it, but I’d be lying if I said otherwise. NPUs are useless. Maybe that comes off as harsh, but it doesn’t come from a place of cynicism. After testing them myself, I’m genuinely convinced that there’s not a compelling use for NPUs right now.

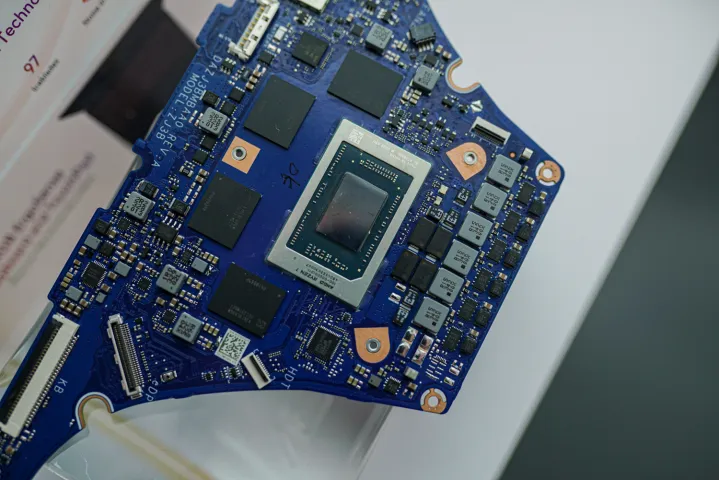

When you go to buy your next laptop, there’s a good chance it will have a Neural Processing Unit (NPU) inside of it, and AMD and Intel have been making a big fuss about how these NPUs will drive the future of the AI PC. I don’t doubt that we’ll get there eventually, but in terms of a modern use case, an NPU really isn’t as effective as you might think.

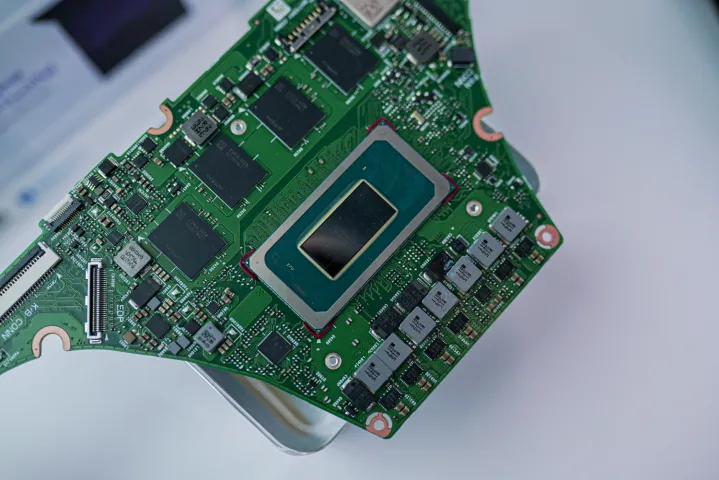

When talking about Meteor Lake at CES 2024, Intel explained that it uses the various processors on Meteor Lake chips for AI work. It doesn’t all fall on the NPU. In fact, the most demanding AI workloads are offloaded to the integrated Arc GPU. The NPU is mainly there as a low-power option for steady, low-intensity AI workloads like background blur.

Your GPU steps in for anything more intense. For instance, Intel showed off a demo in Audacity where AI could separate the audio tracks from a single stereo file, and even transcribe the lyrics. It also showed AI at work, with Unreal Engine’s Metahuman, transforming a video recording into an animation of a realistic game character. Both ran on the GPU.

These are some great use cases for AI. They just don’t need the NPU. The dedicated AI processor isn’t nearly as powerful as the GPU for these bursty AI workloads.

The main use case, according to Intel, is that the NPU is efficient, so it should help your battery life when running those steady, low-intensity AI workloads like background blur on it rather than the GPU. I tested that on an MSI Studio 16 running one of Intel’s new Meteor Lake CPUs, and it’s not as big of a power savings as you might expect.

Over 30 minutes, the GPU had an average power draw of 18.9W while the NPU averaged 17.6W. What was interesting is that the GPU spiked initially, going up to somewhere around 38W of total power for the system on a chip, but it slowly crept back down. The NPU, on the other hand, started low and slowly went up as time went on, eventually settling between 16W and 17W.

I need to point out that this is total package power — in other words, the power of the entire chip, not just the GPU or NPU. The NPU is more efficient, but I don’t know how much that efficiency matters in these low-intensity AI workloads. It’s probably not saving you much battery life in real-world use.

I’ll have to wait to test that battery life once I have more time with one of these Meteor Lake laptops, but I don’t suspect it will make a huge difference. Even the best Windows laptops have pretty mediocre battery life compared to the competition from Apple, and I doubt an NPU is enough to change that dynamic.

That shouldn’t distract from the exciting AI apps we’re starting to see on PCs. From Audacity to the Adobe suite to Stable Diffusion in GIMP, there are a ton of ways you can now leverage AI. Most of those applications are either handled in the cloud or by your GPU, however, and the dedicated NPU isn’t doing much outside of background blur.

As NPUs become more powerful and these AI apps more efficient, I have no doubt that NPUs will find their place. And it makes sense that AMD and Intel are laying the groundwork for that to happen in the future. As it stands, however, the big focus on AI performance is largely focused on your GPU, not the efficient AI processor handling your video calls.