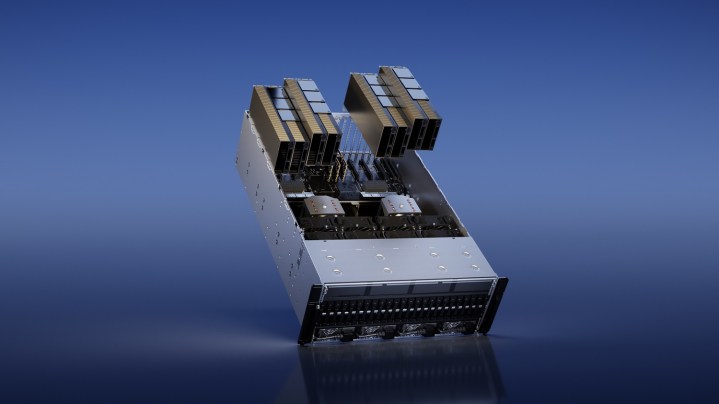

Nvidia’s semi-annual GPU Technology Conference (GTC) usually focuses on advancements in AI, but this year, Nvidia is responding to the massive rise of ChatGPT with a slate of new GPUs. Chief among them is the H100 NVL, which stitches two of Nvidia’s H100 GPUs together to deploy Large Language Models (LLM) like ChatGPT.

The H100 isn’t a new GPU. Nvidia announced it a year ago at GTC, sporting its Hopper architecture and promising to speed up AI inference in a variety of tasks. The new NVL model with its massive 94GB of memory is said to work best when deploying LLMs at scale, offering up to 12 times faster inference compared to last-gen’s A100.

These GPUs are at the heart of models like ChatGPT. Nvidia and Microsoft recently revealed that thousands of A100 GPUs were used to train ChatGPT, which is a project that’s been more than five years in the making.

The H100 NVL works by combining two H100 GPUs over Nvidia high bandwidth NVLink interconnect. This is already possible with current H100 GPUs — in fact, you can connect up to 256 H100s together through NVLink — but this dedicated unit is built for smaller deployments.

This is a product built for businesses more than anything, so don’t expect to see the H100 NVL pop up on the shelf at your local Micro Center. However, Nvidia says enterprise customers can expect to see it around the second half of the year.

In addition to the H100 NVL, Nvidia also announced the L4 GPU, which is specifically built to power AI-generated videos. Nvidia says it’s 120 times more powerful for AI-generated videos than a CPU, and offers 99% better energy efficiency. In addition to generative AI video, Nvidia says the GPU sports video decoding and transcoding capabilities and can be leveraged for augmented reality.

Nvidia says Google Cloud is among the first to integrate the L4. Google plans on offering L4 instances to customers through its Vertex AI platform later today. Nvidia said the GPU will be available from partners later, including Lenovo, Dell, Asus, HP, Gigabyte, and HP, among others.