The face of computing is changing, and a lot of it has to do with GPUs. Developers are finding new and exciting ways to leverage these chips’ immense and unique power, and it has the community spilling over with ideas, innovations, and new tech.

Deep learning

Deep learning and cognitive computing are definitely the hot topics at GTC this time around. Their presence has been looming the past couple of years, but more as a proof of concept. This year, researchers were ready to show off the work these systems have been doing.

One of the most striking demos from Facebook’s Artificial Intelligence Research lab made sure everyone knows just how powerful cognitive computing has come. When fed data sets, the algorithms are able to turn them into useful insight, and even recreate the important pieces of information as it identifies them.

But the crucial part of the equation comes in when we discuss how to teach these systems what to look for. Early attempts focused on telling a machine what to look for and what its goals are, but now the conversation revolves around how these machines can teach themselves.

An untrained deep learning system is simply set loose on a humongous amount of data, and then researchers watch to see what it looks for. Whether that’s a swath of renaissance paintings, or videos of people engaged in a variety of sports and games, we’re starting to let the computer decide which factors are important, and that’s leading to greater accuracy and more versatile algorithms.

It’s also leading to systems that can complete more and more advanced tasks, and at rates we never could have imagined before we let the machines take over.

Self-driving cars

Strangely, Nvidia has positioned itself as a leading figure in the race to create self-driving cars. As you might imagine, GPUs are well suited for this kind of work, which requires juggling dozens of elements, and doing so quickly. Nvidia’s hardware is perfect for heavily realized workloads, and the company is taking full advantage of its advantageous position in the marketplace, as well as the connections its built over the years, to build a platform for self-driving cars that will power the fully autonomous RoboRace.

Nvidia even showed off its own self-driving car concept, lovingly known as BB8. The demonstration video showed a car that, at first, struggled to even stay on the road. It ran over cones, drove into the dirt, and didn’t stop when it should’ve. With just a few months of training and learning, the car drove perfectly, able to switch between driving surfaces smoothly, and adjust to unusual situations, like roads with no central divider.

At the heart of that car is Nvidia’s Drive PX2 chip. Specifically designed for self-driving cars, the chip supports up to 12 high-definition cameras, and leverages Nvidia’s GPU tech for instant responsiveness and sensor management.

Virtual reality for work and play

That’s not to say the GPU community is all work and no play, and virtual reality is one of the key areas where the gaming world finds its way back in. That’s not the sole focus of VR anymore, though. From architecture to car design, there are many way to make virtual reality a tool instead of a toy.

IRay, in particular, is a massive step forward for VR, as it brings Nvidia’s highly detailed and accurate lighting into the virtual realm. IRay is already used for architectural projects and automotive designs, where light refraction doesn’t just have to look nice, it has to be pixel perfect.

Nvidia’s work is also leading to new hardware in other areas. During a presentation on computational displays, Nvidia vice president of graphics research David Luebke showed off a variety of new display types that might make a good fit for future VR and AR displays.

Among them were see-through displays with precision pinholes, LED projectors, and sets of micro-lenses. These new display technologies provide advanced optical features, like highly adjustable focal points, see-through capabilities, and sky-high refresh rates. One of the displays even allows developers to program eyeglass prescriptions into the lenses themselves.

Not so far away

We’ve come a long way on these advanced computing topics in just a few years, but most researchers agree that we’re going to move a lot faster in the next few. These innovations are heralding the dawn of the age of AI, and you can expect to see a lot of the currently in development software enhance everything from online shopping to social media, and yes, even gaming.

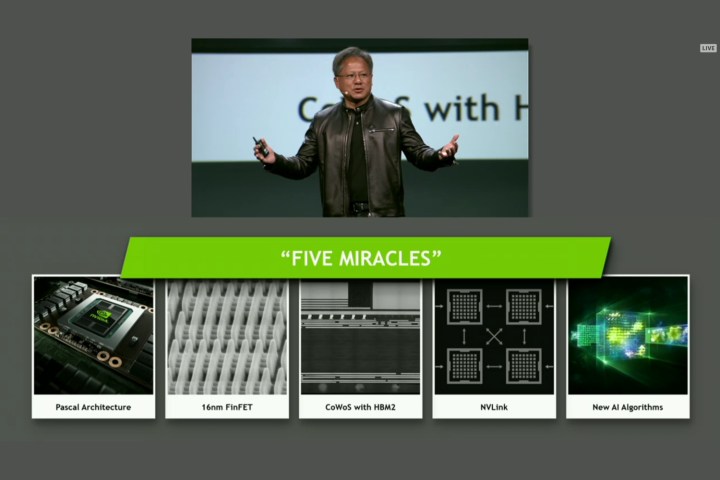

And the hardware is starting to get there, too. Nvidia showed off high-end Tesla offerings that, while insanely expensive and power hungry, have made leaps and bounds in terms of efficiency over the last generation of hardware.

With all of this new tech, we’re starting to move away from asking how to reach tech milestones, and starting to ask — when?