Nvidia is taking some notes from the enthusiast PC building crowd in an effort to reduce the carbon footprint of data centers. The company announced two new liquid-cooled GPUs during its Computex 2022 keynote, but they won’t be making their way into your next gaming PC.

Instead, the H100 (announced at GTC earlier this year) and A100 GPUs will ship as part of HGX server racks toward the end of the year. Liquid cooling isn’t new for the world of supercomputers, but mainstream data center servers haven’t traditionally been able to access this efficient cooling method (not without trying to jerry-rig a gaming GPU into a server, that is).

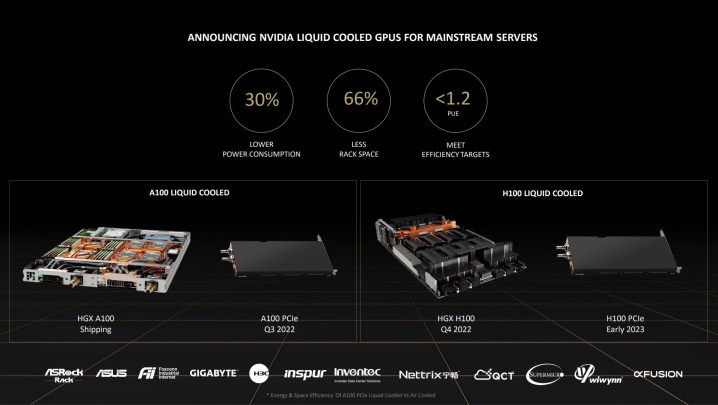

In addition to HGX server racks, Nvidia will offer the liquid-cooled versions of the H100 and A100 as slot-in PCIe cards. The A100 is coming in the second half of 2022, and the H100 is coming in early 2023. Nvidia says “at least a dozen” system builders will have these GPUs available by the end of the year, including options from Asus, ASRock, and Gigabyte.

Data centers account for around 1% of the world’s total electricity usage, and nearly half of that electricity is spent solely on cooling everything in the data center. As opposed to traditional air cooling, Nvidia says its new liquid-cooled cards can reduce power consumption by around 30% while reducing rack space by 66%.

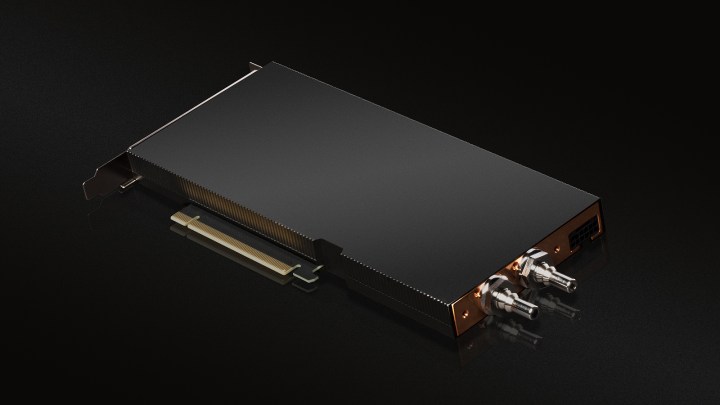

Instead of an all-in-one system like you’d find on a liquid-cooled gaming GPU, the A100 and H100 use a direct liquid connection to the processing unit itself. Everything but the feed lines is hidden in the GPU enclosure, which itself only takes up one PCIe slot (as opposed to two for the air-cooled versions).

Data centers look at power usage effectiveness (PUE) to gauge energy usage — essentially a ratio between how much power a data center is drawing versus how much power the computing is using. With an air-cooled data center, Equinix had a PUE of about 1.6. Liquid cooling with Nvidia’s new GPUs brought that down to 1.15, which is remarkably close to the 1.0 PUE data centers aim for.

In addition to better energy efficiency, Nvidia says liquid cooling provides benefits for preserving water. The company says millions of gallons of water are evaporated in data centers each year to keep air-cooled systems operating. Liquid cooling allows that water to recirculate, turning “a waste into an asset,” according to head of edge infrastructure at Equinix Zac Smith.

Although these cards won’t show up in the massive data centers run by Google, Microsoft, and Amazon — which are likely using liquid cooling already — that doesn’t mean they won’t have an impact. Banks, medical institutions, and data center providers like Equinix compromise a large portion of the data centers around today, and they could all benefit from liquid-cooled GPUs.

Nvidia says this is just the start of a journey to carbon-neutral data centers, as well. In a press release, Nvidia senior product marketing manager Joe Delaere wrote that the company plans “to support liquid cooling in our high-performance data center GPUs and our Nvidia HGX platforms for the foreseeable future.”