Called GDDR6, it stands for Graphics Double Data Rate (DDR), a type of Synchronous Dynamic Random-Access Memory (SDRAM) that’s designed specifically for a graphics processor. Because of this, you’ll only see GDDR packed on video cards by Nvidia, AMD, and their partners, and not installed as memory used by the overall system (aka DDR). Unlike memory sticks, GDDR is compact and spread out around the GPU to take up the least amount of physical space as possible on a graphics card.

According to one of the slides presented during the show, GDDR6 will offer more than 14 gigabits (Gb) per second of bandwidth, beating out the most recent GDDR5X Micron launched earlier this year with a bandwidth of up to 12Gb per second, and the 10Gb per second offered by vanilla GDDR5 memory. Bandwidth is essentially a term used for describing the speed between the on-board memory and the graphics chip.

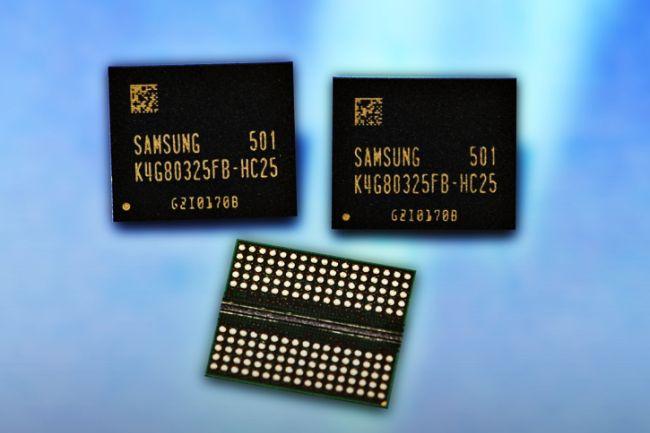

GDDR is attached to the graphics card via soldered pins. Samsung is not only aiming to increase the gigabits per second performance through each pin via each generational release, but to decrease the power draw from the memory chips in the process. GDDR5 managed a 40 to 60 percent in power efficiency compared to GDDR4, and GDDR6 is expected to be even more power efficient when it launches in 2018.

Ultimately, all of this means squeezing better performance out of graphics cards. As graphics chips get faster, the on-board memory need to do the same. The graphics chip uses this local memory to temporarily store huge textures, computations, and more in close proximity rather than sending all of that data to the system memory located miles away within the desktop or laptop.

However, if you ask AMD, GDDR simply can’t keep up with the evolution of the graphics processor. According to the company, GDDR limits the overall device form factor because a large number of GDDR chips are required for a high bandwidth. The more chips you throw on the graphics card, the more power that card consumes even though each generation of GDDR is more power efficient than the last.

Thus, enter AMD’s approach to local video memory: High-Bandwidth Memory, or HBM, launched in 2015. Rather than spreading memory chips around the graphics processor as seen with GDDR, HBM features stacked chips that are interconnected by columns of microscopic wires. Because of this, data goes up or down the elevator shafts to the appropriate floor, whereas GDDR data drives through streets looking for an open memory chip to occupy.

SK Hynix reportedly attended the same convention showcasing the second generation of HBM memory. The company said that HBM2 will expand into three markets: High Performance Computing and Servers, Networking and Graphics, and Client Desktops and Notebooks. Graphics cards will see HBM2 served up in “cubes” of up to 4GB while the HPC/server/networking markets will see capacities of up to 8GB. HBM2 is expected to be supported by AMD’s new “Vega” graphics core architecture slated for a 2017 release.

Finally, there was also talk at the show about HBM3, which will reportedly be supported by AMD’s “Navi” graphics core architecture slated for a 2019/2020 release. A slide provided by Samsung showed that HBM3 will have double the bandwidth and even more stacks than the previous generation.