I’ve been using an ultrawide monitor for a decade. I bought in early, sometime back in 2013, picking up LG’s first ultrawide display: the 29EA93. It’s terrible by today’s standards, but I fell in love immediately.

I was using Pro Tools a lot at the time, and the extra screen real estate was a godsend for seeing more of my window. It also helped that I could pull up two browser windows side by side to multitask, as well as enjoy the deeper immersion in games. It didn’t matter that the screen was only 29 inches, or that it only had a resolution of 2,560 x 1,080. I was sold; 21:9 was for me.

At least, 21:9 was for me. A decade on, I’ve just finally gone back to using a 16:9 display — the KTC G42P5 — that I recently picked up on a Cyber Monday sale. Just a few months ago, however, I wrote how I couldn’t live without an ultrawide monitor. What changed? Let’s dig in.

Immersive (until it isn’t)

My main draw to ultrawide over the years has been the immersion in games. It’s still unmatched. You get to see more of the game world than anyone is seeing with a 16:9 display. Some monitors, like the Samsung Odyssey OLED G9, take this further with a 32:9 aspect ratio. It’s great … until you stumble upon a piece of media that doesn’t support a 21:9 aspect ratio.

Black bars ruin the immersion immediately. Even modern titles like Elden Ring don’t support 21:9 out of the box, forcing you to either settle for black bars or resort to a third-party tool like Flawless Widescreen. And, if you’re not careful, these tools can land you with a ban in online games.

Even if you’re careful, you’ll still be pulled out of the game the moment a cutscene plays. They’re almost universally baked at 16:9. This happened recently in Hi-Fi Rush. The game has several moments where it transitions from a cutscene to gameplay, pulling me out and back into the immersion in a jarring way.

In addition, overlay elements in games usually don’t take up the full 21:9 aspect ratio. In Destiny 2, for example, speed lines from sprinting cut off around the edges where a 16:9 display would end. Menus in games like Marvel’s Spider-Man condense the aspect ratio, too.

It’s not a big enough problem that ultrawide monitors become unusable — I’ve used one for 10 years — but there are still situations where the immersive experience feels like it’s held together by duct tape and glue.

The practical reasons

The occasional misstep in immersion has improved over the last decade, but it hasn’t gone away. There are a few more practical reasons I chose to go back to a 16:9 display.

First is using the screen for more than just my PC. I play console releases from time to time on my PlayStation 5 and Nintendo Switch, which definitely don’t support 21:9. There aren’t any 4K 21:9 monitors, either — at least not yet. They’re mostly 1440p monitors with wings — meaning I would sacrifice a good bit of resolution on consoles.

Now, I can have a single display that does everything I need. I can use it to watch movies, play games, and hook up my consoles, all without dealing with black bars. It’s been refreshing after trying to wrestle around a 21:9 display for so many years.

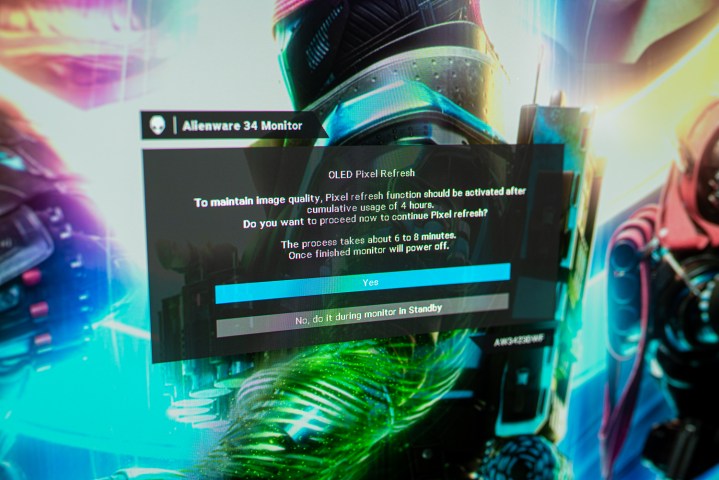

What finally sold me was the recent testing by Rtings, however. The website has been conducting an ongoing OLED burn-in test, and it just recently added the Alienware 34 QD-OLED (the monitor I traded for my new KTC display). The latest test results showed that burn-in was more likely with 16:9 content on a 21:9 display. It wasn’t the burn-in results alone, but it added on the pile of gripes and finally tipped the scales away from 21:9 for me.

There are also a couple of reasons specific to me. I wanted to be able to take 4K screenshots for articles, and I wanted the higher resolution for image quality comparisons I do in PC performance guides. These shouldn’t apply to most people, but they’re worth highlighting as an example since you might have your own personal reasons to prefer a more conventional aspect ratio.

You can go big enough

There were two things keeping me on an ultrawide monitor — immersion for games and screen real estate for work. Those were the areas I couldn’t compromise. The solution? A larger 16:9 display.

That’s where the KTC G42P5 comes in. It’s a 42-inch display, and it pulls double duty by giving me the immersion and screen real estate I had become accustomed to on a 21:9 monitor. The screen size enables me to have both.

For immersion, it’s all about viewing distance. I put the display on a monitor arm, allowing me to set it the perfect distance away so I’m not bending my neck to see the screen, all while ensuring it fills my peripheral vision. If anything, the monitor has been more immersive than a 21:9 display because I don’t have to fuss with black bars.

For screen real estate, it’s all about balancing Windows scaling. If you hook up a large 4K display to your PC, Windows will automatically set your scaling to 300%. I found, for my screen size and viewing distance, around 150% makes items large enough that I can see them while allowing me to take advantage of the screen size.

I haven’t given up what sold me on ultrawide displays 10 years ago, all while getting past the issues that still show up with the 21:9 form factor. That’s something that’s only been possible for the past few years, though.

For years, monitors and TVs were clearly separated into two camps. Monitors could have high refresh rates, but they weren’t very large, while TVs were large, but lacked the refresh rate and latency savings that PC gaming requires. We’ve now reached this perfect middle ground where you can get a large display with a high refresh rate, which plays both sides with productivity and immersion. And that’s been enough for me to give up on an aspect ratio I’ve held on to for a decade.