AMD has been one of the top graphics cards manufacturers for well over a decade after picking up the even-longer-standing ATI. Overall, it’s done pretty well for itself. However, AMD (and ATI) has also made several disappointing graphics cards over the years and GPUs that can barely justify their own existence.

If you’d like to take a trip down memory lane and wince at all AMD’s missteps, here’s a look back at the AMD and ATI GPUs that let us all down.

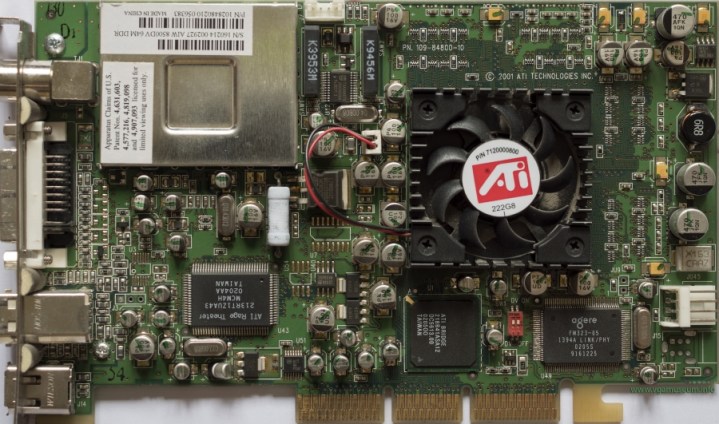

Radeon 8500

Crippled by bad drivers

ATI, the company that AMD bought in order to get Radeon graphics in its portfolio, was the only company in the late 90s and early 2000s that could stand up to Nvidia, which quickly established itself as a leader in the graphics cards market. In 2001, there was a lot of excitement about what AMD’s Radeon 8000 series GPUs could do. The hardware was good, with the Radeon card expected to beat Nvidia’s flagship GeForce3 Ti 500 in several metrics, and at $299, the 8500 was $50 cheaper than the Ti 500. What could go wrong?

Well, in the actual benchmarks, the 8500 was significantly behind the Ti 500, and sometimes it was just half as fast. On paper, the 8500 should have beaten the Ti 500 by at least a small margin, if not a noticeably large one. It was not quite the flagship reviewers were hoping for, as Anandtech noted that even at $250, the 8500 could only match Nvidia’s GeForce3 Ti 200, which cost under $200.

Ultimately, bad drivers doomed the 8500 and ATI’s desire to beat Nvidia. The 8500 did quite well in synthetic benchmarks like 3DMark 2001, where it beat the Ti 500, but in actual games, it fell noticeably behind. Theoretically, if the 8500 had better software level optimization for games, it would have been able to stand toe to toe with the Ti 500. The situation was so severe that ATI promised it would be releasing new drivers as quickly as every two weeks. Unfortunately, this was not enough to turn the 8500 into a true competitor against Nvidia’s flagship GPUs.

The fact that the 8500 had so much potential is what makes this situation even more depressing. There have been plenty of other AMD or ATI GPUs with terrible driver support (the RX 5000 series was particularly buggy for some), but the 8500 is easily the most heartbreaking. It could have been much more than just another midrange GPU with all that untapped horsepower. ATI was able to claim victory with its next generation Radeon 9000 series, however, so you could argue Radeon 8000 limped so Radeon 9000 could run.

Radeon R9 390X

A space heater that can also play games

We’re going to fast forward about a decade here, because, honestly, ATI and AMD (which acquired ATI in 2006) didn’t really make any particularly bad GPUs after the 8000 series. There were disappointing cards like the HD 3000 and HD 6000 series, but nothing truly bad, while AMD’s 290X scored an impressive blow against Nvidia in 2013. Unfortunately, the years that followed weren’t so kind.

With AMD stuck on TSMC’s 28nm node, the only thing it could really do was sell old GPUs as new GPUs — a tactic known as rebranding. The Radeon 300 series was not the first (nor the last) series to feature rebrands, but it has the unfortunate distinction of being a series of GPUs that was nothing but rebrands.

The R9 290X was rebranded as the R9 390X, which launched in 2015, and while the 290X was fast in 2013, things had changed in 2015. The 390X could just barely catch up to Nvidia’s GTX 980 at resolutions higher than 1080p, but Nvidia’s new flagship GTX 980 Ti was almost 30% faster. Power was also a big issue for the 390X. In TechPowerUp’s review, the 390X consumed 344 watts on average in games, more than double that of the GTX 980 and almost 100 watts more than the 290X. Even something as simple as using multiple monitors or watching a Bluray caused the 390X to use about 100 watts.

The Radeon 300 series and particularly the 390X cemented the reputation of AMD GPUs as hot and loud, and although the 290X was also noted for being hot and loud, the 390X was even more so, which is not a good thing.

Radeon R9 Fury X

So close yet so far

The R9 Fury X was the high-end GPU AMD developed after the 200 series, and unlike the 300 series, it was brand new silicon. Fiji, the codename for the graphics chip inside the Fury X, used the third and newest iteration of the GCN architecture and 4GB of cutting edge High Bandwidth Memory (or HBM). It even came with a liquid cooler, prompting an AMD engineer to describe the card as an “overclocker’s dream.” AMD went through a ton of effort to try to beat Nvidia in 2015, but unfortunately, this kitchen sink approach didn’t work.

AMD was faced with trying to dethrone Nvidia’s GTX Titan X, a top-end prosumer card that retailed for $999, and even though the Fury X was slower than the Titan X by a small margin, it was also $350 cheaper. Had everything gone according to plan, AMD could have positioned the Fury X as a viable alternative for gamers who wanted high-end performance for less and without all the extra compute features they didn’t want.

But the Titan X wasn’t Nvidia’s only high-end card at the time. The GTX 980 Ti retailed for the same $649 MSRP as the Fury X and had 6GB of memory, lower power consumption, and about the same performance as the Titan X. For all the trouble AMD went through, with designing a new architecture to improve power efficiency, using HBM to increase memory bandwidth, and putting a liquid cooler on this GPU, the Fury X lost anyway, and Nvidia barely had to lift a finger. It was simply disappointing, and Anandtech put it best:

“The fact that they get so close only to be outmaneuvered by NVIDIA once again makes the current situation all the more painful; it’s one thing to lose to Nvidia by feet, but to lose by inches only reminds you of just how close they got, how they almost upset Nvidia.”

That comment about the Fury X being an “overclocker’s dream” also caused some controversy, because the Fury X was locked down unlike ever before. There wasn’t any way to raise the voltage for higher clocks, and the HBM’s clock speed was locked entirely. Anandtech was able to get its card to 1125MHz, a rise of just 7%. By contrast, GTX 9 cards were well known for easily getting 20% overclocks, sometimes as much as 30% on good cards.

The Fury X wasn’t bad in the way the 390X was; it was bad because it needed to be something more, and AMD just didn’t have it.

Radeon RX 590

Stop, he’s already dead!

Fast forward three years, and things were looking better for AMD. The performance crown continued to elude it, but at least its RX Vega GPUs in 2017 got it back on equal footing with Nvidia’s then-current generation x80 class GPU, the GTX 1080. AMD had seemingly planned to launch more RX Vega GPUs for the midrange and low-end segments, but these never materialized, so instead, AMD rebranded its hit RX 400 series as the RX 500 series, which was disappointing but not terrible, as Nvidia didn’t have new GPUs in 2017 either.

By the end of 2018, Nvidia had launched a new generation of GPUs, the RTX 20 series, but it didn’t shake things up very much. These cards didn’t provide better value over the GTX 10 series, and although the RTX 2080 Ti was significantly faster than the GTX 1080 Ti (and AMD’s RX Vega 64), it was also exorbitantly expensive. AMD really didn’t need to launch a new GPU, especially not a new midrange GPU since cards like the RTX 2060 and the GTX 1660 Ti were months away. And yet, AMD decided to rebrand RX 400 for a second time with the RX 590.

The official raison d’être for the RX 590 was that AMD didn’t like there being such a large performance gap between the 580 and the Vega 56, so it launched the 590 to fill in that gap. The thing is, the 590 was just an overclocked 580, which was an overclocked 480. Just adding clock speed didn’t really do much for the RX 590 in our review. So far, not off to a good start.

In order to reach these ever increasing clock speeds, the power needed to come up too, and the RX 590 ended up being rated for 225 watts — 75 watts above the original RX 480. The Vega 56 actually consumed less power at 210 watts, which made it extremely efficient by comparison. Vega even had a bit of a reputation for being hot and loud, but at least it wasn’t the 590.

Radeon VII

A fittingly terrible name for a bad GPU

Although the RX 590 was basically looking for a problem to solve, AMD did have a real issue with the RTX 2080, which was much faster than anything AMD had by quite some margin.

Enter the Radeon VII, a graphics card you probably forgot even existed. It wasn’t strictly new, with AMD instead taking a datacenter GPU, the Radeon Instinct MI50, and cutting it down to gaming specs. AMD halved the memory from 32GB to 16GB, reduced FP64 performance (which is useful for scientific stuff), and reduced the PCIe spec from 4.0 to 3.0.

While this card would have to go up against the fully-formed RTX 2080, that prospect didn’t seem so bad at the time. Although the 2080 had cutting edge ray tracing and AI-powered resolution upscaling, these features were also in their infancy and not particularly important at the time, so AMD felt it was sufficient to compete on performance alone.

Although AMD claimed it could go toe to toe with the 2080 (and thus priced the Radeon VII the same at $699), the reviews disagreed. Techspot found that the VII could barely catch up to the 2080, being 4% slower on average at 1440p. It couldn’t even convincingly beat the GTX 1080 Ti, which was using three year old technology at that point. This was despite the VII’s massive advantage in process (7nm versus 12/16nm), memory bandwidth, and memory size. The VII was 20% or so faster than the Vega 64 for the same power consumption, but that wasn’t nearly impressive enough to justify its own existence.

To make matters worse for AMD, the VII was probably being sold at a loss, as a 7nm GPU with 16GB of HBM2 was surely not cheap to produce back in 2018 and 2019. To be a loser in performance, value, and efficiency is one thing, but to be all that and not even turn a profit is just sad. The cherry on top is that, when AMD’s RX 5000 GPUs launched just a few months later, the new RX 5700 XT had about 90% of the performance of the VII for half the price, making it obsolete before it had even gotten going.

In hindsight, it’s hard to see why AMD ever wanted the VII to exist. The company only had to wait a few more months to launch a GPU that could actually turn a profit while having superior value and efficiency. It also wasn’t particularly interesting to prosumers due to its reduced FP64 performance. The existence of the Radeon VII is almost as baffling as its name.

Radeon RX 6500 XT

Nobody asked for this

Budget, entry level GPUs have become increasingly rare in recent years, with Nvidia and AMD failing to really deliver anything significantly better than old cards from 2016 and 2017. Things got even worse with the GPU shortage of 2020 to 2022, which compounded budget buyers’ feelings of being neglected. People just wanted something relatively modern that didn’t cost over $300 for once.

Finally, in early 2022, AMD launched some brand-new budget GPUs from its RX 6000 series, the RX 6500 XT for $199 and the RX 6400 for $159. The pricing was certainly reminiscent of the RX 480 and RX 470, which came in at similar prices. The performance was also reminiscent of the RX 480 and RX 470, by which I mean the performance was almost identical to the old ones for the same price. In the six years since RX 400 debuted, this was the best AMD could do?

Techspot tested the 6500 XT and found that it lost to the previous generation 5500 XT (which launched at $169), the GTX 1650 Super (which launched at $159), and even the RX 590. It’s just unthinkable that a modern GPU could lose to an overclocked version of a card made six years ago, but here we are. The RX 6400, meanwhile, was just behind the RX 570.

The 6500 XT and the 6400 were never meant to be desktop GPUs, or at least they weren’t really designed for desktops. Instead, these are laptop GPUs soldered to a card so that it can be used for desktops. Consequently, these GPUs only have 4GB of GDDR6 memory, two display outputs and are limited to four PCIe lanes. These GPUs are pretty efficient, but that’s not that important for desktops, and the locked clock speed on the RX 6400 is extremely disappointing.

But to make matters worse, these GPUs have an identity crisis. Performance is fine in a system with PCIe 4.0, but performance drops massively using PCIe 3.0. Although PCIe 4.0 has been out for three years, plenty of budget PC gamers might have older systems, which rules out these kind of GPUs. Furthermore, mid-range and low-end AMD CPUs from Ryzen 5000 onwards have PCIe 4.0 support artificially disabled, a hilarious act of self-sabotage. You’re better off pairing one of these GPUs with a 12th Gen Intel CPU, since you’ll have guaranteed PCIe 4.0 support.

Between the 6500 XT and the 6400, it was hard to choose which was worse. Ultimately, I went with the 6500 XT because it does nothing that older GPUs can’t do. The RX 6400, on the other hand, actually has a good reason to exist: low profile builds. The 6400 is the most efficient, fastest, and cheapest low profile GPU you can buy. Its only real weakness is the need for a PCIe 4.0 enabled CPU and motherboard, but that’s not too difficult to overlook.

As for the 6500 XT, it’s hard to not see it as a cash grab from AMD. It’s a cheap and poor-performing GPU that doesn’t work well in budget builds. But it was available, and affordable, and at a time of GPU shortages, that was almost enough to make it work. Even then, though, everyone knew it was a dud.

AMD, please stop making these awful GPUs

I’m under no impression that this list will never be updated with a new bad AMD GPU, but hopefully, it won’t recreate the mistakes of the past. If it can avoid bad launch drivers, stop more aggressive rebranding, and stop releasing poor cards to desperate gamers, maybe it’ll be a few generations before AMD releases another 6500 XT. Here’s hoping.

In the meantime, we can look forward to AMD’s RX 7000 cards, which look particularly impressive so far.