Measuring the performance of games can be quite complicated. It’s not just about the average frame rate; frame time matters too. This was brought into stark focus with the release of Gotham Knights, where even though the game performs well overall with a decent average frame rate, it has major issues with frame time. This is a real problem, as even though frame time is a bit abstract for most players, it’s a vital metric when judging how well a game actually runs.

Don’t throw the baby out with the bathwater; frame rate is still equally important. But frame time reveals a lot about how a game can feel during play and goes deeper than just the number of frames your system can output every second. We’re going to help demystify what frame time is, how you can measure it, and why you should pay attention to it when looking at your gaming PC’s performance.

Frame time versus frames per second

Most gaming benchmarks are expressed in frames per second, or fps. Frame rate counters and benchmarking software work by capturing a second of the game, checking how many frames were rendered in that second, and then adding it to a running average. For example, if a game rendered 62, 64, 58, and 56 fps in a four-second interval, the game would have a 60 fps average. But a second is a long time in a high-paced game, and not all of those frames will have been rendered equally, with some frames rendering faster than others. Frame time is the time that passes between each of those frames, answering the question, “How much time passes between each frame?”

Using the same 60 fps, the frame time would (theoretically) be 16.6ms, or 1/60th of a second. You can do this with any other frame rate. 1/144th of a second would mean there’s a frame every 6.9ms. Just divide one by your average frame rate and then multiply by 1,000 to get the frame time in milliseconds.

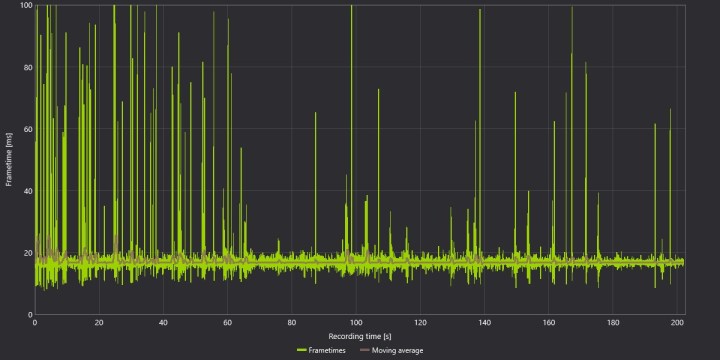

That’s a good exercise to understand what frame time is, but it’s not useful because it’s not realistic. Frame time is important because it varies. In a second where there are 60 frames, those frames usually aren’t evenly spaced at 16.6ms, and if the variance is high enough, the game won’t feel as smooth. Take Gotham Knights as an example. Although the game runs at a locked 60 fps average in the chart above, there are frame time spikes well above 100ms. That makes the game feel like it’s stuttering and choppy, despite the fact that it’s running at the targeted frame rate.

It’s important to look at both frame rate and frame time when evaluating a game, and benchmarking tools like CapFrameX make it easy to chart frame times. One is not objectively better than the other. They’re looking at two sides of the same coin. Frame rate tells you the overall performance level, while frame time says more about the experience of actually playing the game.

They’re tied together, too. Above, you can see the frame rate over time that corresponds to the frame time chart up the page. As the frame time goes up, the frame rate falls, and vice versa.

Pulling more data out of frame time

Frame time is abstract because, frankly, performance in games has been measured in frame rate for decades. There are some additional metrics you can pull out of frame times to better understand the consistency of the game, however. The most common ones you’ll see in GPU reviews (including our own) are 1% lows.

This number, sometimes also referred to as the 99th percentile, takes the lowest 1% of frames from a data set and averages them. Essentially, 1% lows take into account the highest frame times and, therefore, the lowest frame rates from a data set. When you see a large gap, that indicates that the frame time is poor and that the game doesn’t feel smooth to play, even if it’s hitting a desired average frame rate.

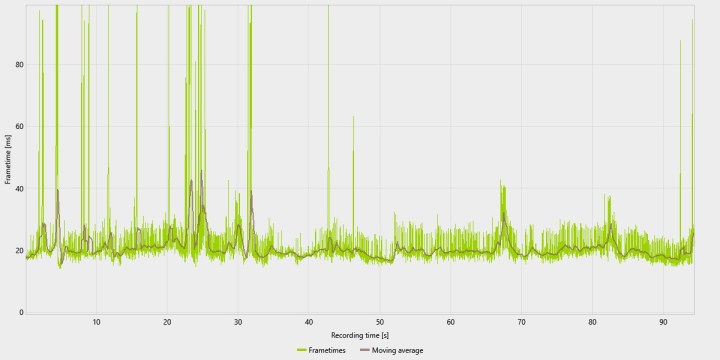

God of War‘s PC port has very consistent frame times, for example. You can see that in the chart above. The average 75.7 fps and the 1% low fps of 62.6 are relatively close, about a 19% difference, indicating that the game runs smoothly. Contrast that with Gotham Knights, where there’s a 58% gap between the average fps of 49.4 and the 1% lows of 27.2.

Fps and 1% low fps aren’t perfect metrics, but they still summarize the performance of a game on certain hardware. You can look at the frame time as the raw data and the frame rate as an abstraction of that data that’s easier to digest and compare with other games or hardware. If you saw two frame time graphs for two different GPUs, that says a lot less about how they perform relative to each other than comparing the average frame rate that those GPUs achieved in a game.

It’s important to remember that these metrics are only as good as the context they’re presented in. Frame time is vital for evaluating how well a game performs, but frame rate is much more useful for seeing the scaling between different hardware. It’s all a matter of perspective and purpose.

How to improve frame time in games

Frame times are often an issue of the game, the driver, or some middle step between the two. That is to say, it’s hard to solve frame time issues if you encounter them. Gotham Knights is a good recent example. The frame time issues are a result of shader compilation stuttering, which is an issue we saw with Elden Ring earlier this year.

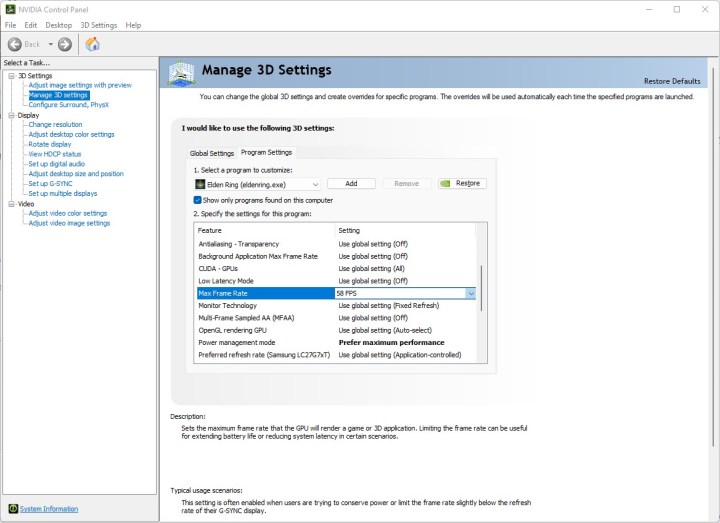

One thing you can do to help the situation is set a frame rate limit. This won’t solve frame time issues from things like shader compilation, but it can help frame pacing in poorly optimized games. Many games include a built-in frame rate limit, but you can also set one with your graphics card software. For Nvidia, go to the Nvidia control panel, select Manage 3D settings, and set the Max frame rate under the Global settings tab. AMD has a similar feature in Radeon Software called Frame Rate Target Control (FRTC).

In many cases, though, poor frame times and frame pacing result from drivers and games. Your best bet is to update your video card drivers and hope a patch comes out. Closing background apps and optimizing your PC for gaming can also help, but only in titles that don’t already have issues with frame pacing.