In the past decade, however, it has taken enormous leaps forward thanks to deep learning neural networks. These are essentially vast computational approximations of the way that the human brain works, which can learn to recognize objects and people based on training examples.

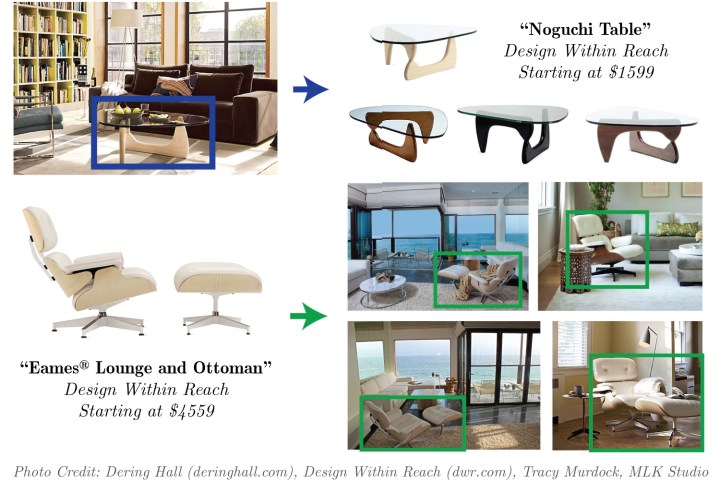

A number of companies have attempted to use this kind of technology to transform the retail space, but so far none have really succeeded. We scan QR codes when we go into a store, or type the name of a book into Amazon, but the technology that lets us snap a quick picture of, say, a chair that we like and easily search for it (or similar items) online has largely eluded us.

This is a problem a team of Cornell University researchers are trying to solve. With a new startup called GrokStyle, co-founders and computer scientists Sean Bell and Kavita Bala have developed a state-of-the-art algorithm that is more accurate than any competing system at recognizing objects within a picture and then linking them to real-world items for sale.

“What we’re focused on is the post-search experience,” Bell, a computer science Ph.D., told Digital Trends. “That’s something I don’t feel has been very good so far. What we’re doing is not just recognizing a product, but finding out who else sells it, whether there are existing variations, whether you can get it in a different type of wood, etc. We’re not just trying to answer the ‘what is this?’ question about objects, but trying to answer the related questions people have in the shopping experience.”

As you can imagine, this isn’t easy – and particularly not since it does more than apps, which recognize, say, book covers and movies by letting you search for that item and that one alone.

“You may be in a restaurant and see a chandelier you like, and want to one to find one that is the same and available for sale — or similar, but in a different color or price point,” Bell said. “The idea is that you take a picture of something you like, and then based on that image, you get presented with a list of similar items. You can then filter these based on location, material, and various other metrics. It’s not just simply about either finding something or not finding it; we wanted to give you a wide variety of choice.”

Going forward, the idea is to even let users single out particular qualities of an object (say, its grain of wood or fabric) and then find other complimentary items. “Our system lets you hold certain aspects as constants, and then change other attributes,” he said.

The system the team has developed, described in the journal ACM Transactions on Graphics under the name “Learning visual similarity for product design with convolutional neural networks,” was benchmarked to be an astonishing two times as accurate as competing methods. It is hoped that users will be able to try out the system over the coming months.

“This is an exciting area, and ultimately we think it’ll come down to who has the best technology,” Bell said. “Right now we’re state of the art in terms of product image recognition. We’ve demonstrated to the academic community that our system for doing this is the most accurate. We want to continue that edge going forwards.”