The good news? They are still unrecognizable to the overwhelming majority of people. The bad news? They don’t fool modern computer science.

That information is according to a new project carried out by researchers at the University of Texas at Austin and Cornell University, which used deep learning to correctly guess the redacted identities of people hidden by obfuscation. While human guessed redacted identities correctly 0.19 percent of the time, the machine learning system was able to make a correct judgment with 83-percent accuracy, when allowed five attempts.

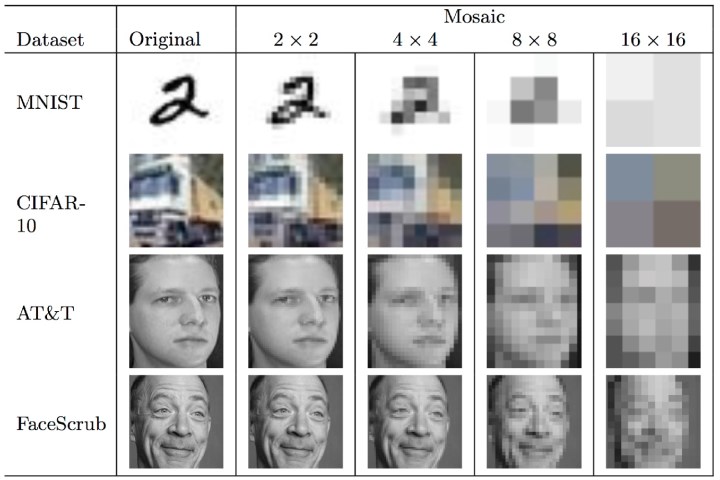

“Blurring and pixelation are often used to hide people’s identities in photos and videos,” Vitaly Shmatikov, a professor of computer science at Cornell, told Digital Trends. “In many of these scenarios, the adversary has a pretty good idea of a small set of possible people who could have appeared in the image, and he just needs to figure out which of them are in the picture.”

This, Shmatikov continued, is exactly the scenario where the team’s technology works well. “This shows that blurring, pixelation, and other image obfuscation methods may not provide much protection when exposing someone’s identity would put them at risk,” he said.

The challenge, of course, is that often such methods of redacting a person’s identity are used to protect a person, such as a whistleblower or a witness to a crime. By applying such image recognition algorithms, based on artificial neural networks, to images that have therefore been obfuscated using off-the-shelf tools, people could potentially be placed in harm’s way.

“The fundamental challenge is bridging the gap between privacy protection technologies and machine learning,” Shmatikov said. “Many designers of privacy technologies don’t fully appreciate the power of modern machine learning — and this leads to technologies that don’t actually protect privacy.”