Conducted by Ph.D researcher Mriganka Biswas and the University of Lincoln School of Computer Science’s Dr. John Murray, the study looked to build off prior research which asserted people understand humanoid robots better if they intuitively use gestures. By using Dr. Murray’s Emotional Robot with Intelligent Network (ERWIN) —which boasts the ability to express five different emotions— and a social development studying robot named Keepon, the researchers were able to analyze a wide range of human interactions.

“Our research explores how we can make a robot’s interactive behavior more familiar to humans, by introducing imperfections such as judgmental mistakes, wrong assumptions, expressing tiredness or boredom, or getting overexcited,” Biswas said while presenting the study’s findings. “By developing these cognitive biases in the robots — and in turn making them as imperfect as humans — we have shown that flaws in their ‘characters’ help humans to understand, relate to and interact with the robots more easily.”

During the study, the researchers examined human interaction with the robots in two different ways. First, the subjects communicated with robots unaffected by cognitive biases — i.e. the “too perfect” version of AI. For the second part of the study, Biswas introduced the groups to ERWIN, who would consistently forget various facts and express this forgetfulness with verbal and expressive cues, and Keepon, who has the ability to display immense happiness or sadness via noises and movement. The resulting discovery of the two interactions was that nearly every single participant reported a more meaningful experience with the mistake-prone robots.

“The cognitive biases we introduced led to a more humanlike interaction process,” Biswas adds. “We monitored how the participants responded to the robots and overwhelmingly found that they paid attention for longer and actually enjoyed the fact that a robot could make common mistakes, forget facts, and express more extreme emotions, just as humans can.”

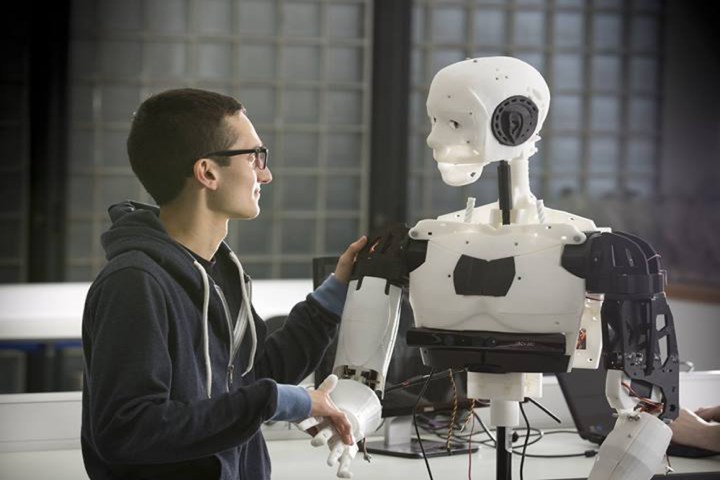

Moving forward, Biswas intends to use the data collected during this study as he begins formulating the groundwork for his next robotic research project. For this upcoming project, he hopes to assess the interaction differences between a human-like robot who uses cognitive bias and the robots used in the above study. The initial idea is a robot which looks closer in likeness to a human has an even better chance of developing successful relationships with people. Dr. Murray will yet again lend one of his robots to the study, granting Biswas access to his 3D-printed MARC robot (Multi-Actuated Robotic Companion), pictured at the top of this article.