On that cheery note, here are six of the most likely ways we might spring the techpocalypse on ourselves.

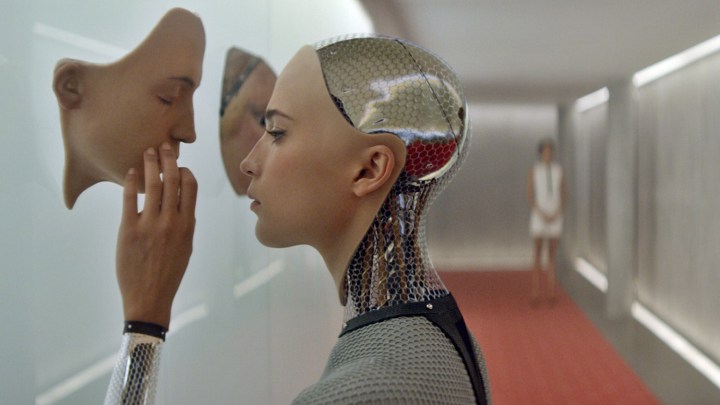

Superintelligent A.I. takes over

The arrival of superintelligence and the technological singularity is based on the assumption that it’s possible for A.I. to one day possess abilities greater than our own. Compared to humans who are limited by biological evolution, machines could then improve and redesign themselves at an ever increasing pace; becoming smarter all the time. At this point, enormous changes would inevitably take place in human society — which have the possibility of posing an existential risk to humankind.

It’s impossible to predict how an entity more intelligence than us would behave, but the results could be anything from machines wiping out humanity, Terminator-style, to enslaving the world’s population. Heck, combine artificial intelligence with nanotechnology and you might get a scenario like…

Transforming the world into grey goo

The words “grey goo” are rarely associated with positive life experiences. This particular hypothesis is one which has arisen with the advance of nanotechnology, in which self-replicating nanotechnology consumes all the matter on our planet.

It was first proposed by nanotechnology expert Kim Eric Drexler in his book Engines of Creation, in which he writes that: “Imagine … a replicator floating in a bottle of chemicals, making copies of itself… The first replicator assembles a copy in one thousand seconds, the two replicators then build two more in the next thousand seconds, the four build another four, and the eight build another eight. At the end of 10 hours, there are not 36 new replicators, but over 68 billion. In less than a day, they would weigh a ton; in less than two days, they would outweigh the Earth; in another four hours, they would exceed the mass of the sun and all the planets combined – if the bottle of chemicals hadn’t run dry long before.”

The idea was shocking enough that it prompted the U.K.’s future monarch Prince Charles to call the Royal Society to investigate it. Right now the technology for self-replicating nanobots doesn’t exist. But, hey, there’s always tomorrow!

Depleting our planet’s natural resources

A combination of growing human population levels and new controversial ways of extracting our planet’s natural resources, such as fracking, could add up to disaster. While there’s a worldwide push toward using renewable energy sources — and scientists are showing an ever increasing interest in the prospect of colonizing other planets — the possibility of somehow depleting our natural mineral resources is certainly a risk factor to be wary of.

Massive cyber attack

This one isn’t so much about physically destroying the world as it is about leveling governments, crashing economies, and generally obliterating society as we know it. From the large scale hacks we’ve seen in recent years, it’s pretty clear just how destructive giant coordinated hacking attacks can be.

A major cyber attack could pollute water plants, switch off our power, and bring travel to a shuddering halt. It could also result in the leak of sensitive classified information, wipe trillions of dollars off the stock market or personal bank accounts, or take down telecommunication networks.

Add in humanity’s looting-and-pillaging tendency to behave badly once the veneer of polite society is gone, and you wouldn’t necessarily need a James Bond-style villain holding the world’s nuclear weapons to ransom for this to cause unimaginable chaos around the globe.

Experimental technology goes wrong

With projects such as Switzerland’s super-powered particle accelerator the Large Hadron Collider (LHC) and New York’s spin-polarized proton collider the Relativistic Heavy Ion Collider (RHIC), scientists are interested in unlocking some pretty deep secrets of physics, concerning the nature of matter, dark energy, and other high-energy experiments.

Not helped by various techno-thriller writers, this has raised plenty of concern about doomsday scenarios, such as the accidental (or purposeful) creation of a manmade black hole, an unstable “strangelet” particle, false vacuum states, or something else entirely.

The possibility of this happening is present, although the odds are very, very, very long. Our favorite quip about this came from Frank Close, professor of physics at the U.K.’s University of Oxford, who likened one such scenario to the odds of, “winning the major prize on the lottery three weeks in succession.” The problem, he said, is that people believe it is possible to win the lottery three weeks in succession.

We go to war using high-tech weapons

Sadly, the entry on this list which could already happen from a technological perspective is this one. Mankind currently has the ability to end pretty much all life on Earth, courtesy of its enormous stockpile of nuclear weapons. The two most nuclear-armed states are the U.S. and Russia, which have around 15,000 warheads between them.

More recently, another threat that has arisen in warfare is the arrival of fully automated weapons systems. Referred to as the “third revolution in warfare” in a 2017 open letter signed by Elon Musk of Tesla and Mustafa Suleyman of Google, the threat of autonomous weapons is that they will allow armed conflict to be fought at greater scales than ever, and at a timescale faster than humans can possibly comprehend.

Remember the 1983 movie WarGames? Picture that scenario, with less Matthew Broderick and more advanced weaponry.