Apple rolled out a bunch of features with iOS 16. These include improvements in system apps like Messages, Maps, and Safari, a customizable lock screen, and more. But one of the most entertaining features is the ability to automatically remove the background from images — giving you a Photoshop-like edit with just a couple of taps.

It’s an intriguing feature that combines years of AI research and development. You can lift the subject from the background of an image by simply holding it and pasting it in Messages, Telegram, Safari, etc. I tried the feature on a wide range of photos. Some were captured by a DSLR, others with a smartphone camera with and without portrait mode. I also tested images of objects, pets, and many other pictures in challenging environments. After trying the feature on 10 different images, the results speak for themselves.

Objects

The first image is of the Sony WF-1000XM4 earbuds. It was taken on a DSLR, which highlighted the earbuds and their case while blurring the packaging. I tapped and held on to the packaging and the system was smart enough to recognize the subject in focus. The AI precisely removed the background with clear edges where there was sufficient light but struggled with the shadows of the case and earbuds.

I chose this second image because I captured it with the portrait mode effect on the iPhone — giving an artistic look to the picture. iOS 16 smartly understood the subject and picked up the phone with my hand. While I would have liked to have an option to pick just the phone instead of also saving my hand, it did a fine job with the edges. No complaints here.

Pets

Our Section Editor, Joe Maring, sent me this image of his dog in focus with the background far away. I tapped on the dog to lift him off the background, and it worked on the first try. It is worth noting that alongside the edges of the dog, the edges of his belt were discretely moved from the background. Overall, an impressive job.

While the AI did a good job in well-lit scenarios, Maring and I pushed the limits with this image of his cat sitting on the side of the window. The image is challenging for two reasons. First, it’s indoors, so there is not enough light in some areas around the cat. Secondly, the sunlight falls on the cat’s head, which could’ve confused iOS 16’s guesswork. But it didn’t. Apple’s new feature moved the cat from the background with precise edges even in low-lit areas like his tail.

Next up is another photo of a cat indoors, but this time with a person petting it. We were worried if iOS 16 would pick the objects in the background alongside the subject, but instead, it picked the human hand. It would have been much better if we could get an option to erase parts of the image we didn’t want – something we hope Apple is working on. The end result certainly isn’t bad, but it’s an example that there’s still room for improvement.

People

I noticed that it was far easier for iOS 16 to remove the subject from a background when the image was captured in portrait mode. For instance, the above photo was taken in portrait mode on the iPhone 12. It did a decent job in maintaining the edges – even the hair. But, for some reason, iOS 16 created a blooming effect at my bottom. It could be because the portrait mode might have blurred that area, but it’s a strange anomaly nonetheless.

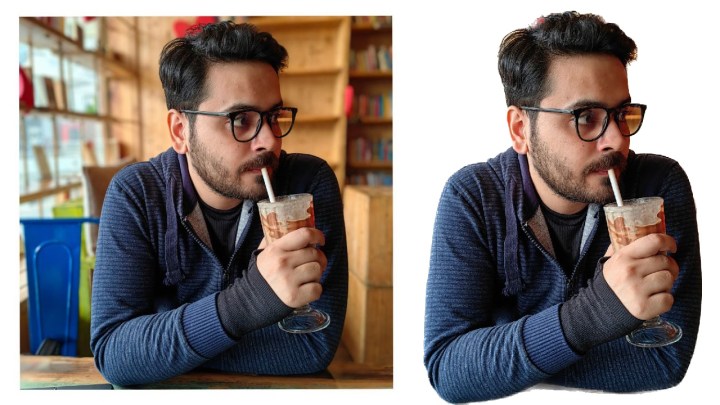

Here, iOS 16 did a decent job in removing the face reflection of a person in the background. But if you are sitting with someone, and both of you are on the same axis, it’ll pick both of you. For instance, I cropped myself in this image, but the arm of the person sitting beside me couldn’t be removed even when I tapped on myself to lift the subject from the background. The above image wasn’t taken in portrait mode, but iOS 16 still did a decent job at the edges.

This image was captured on the Huawei P50 Pro, which has one of the best portrait modes on a smartphone. But it got tricked in the area near my glasses and hair. iOS 16 went with the portrait mode data to cut out the edges very clearly but got deceived in the same areas where the camera didn’t do well. The edges around the hair could’ve been much better.

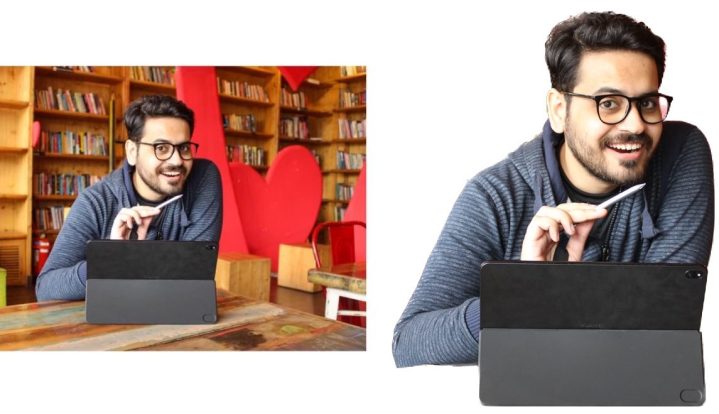

By contrast, this image was taken on a DSLR, and iOS 16 was smart enough to retain the laptop alongside me. It performed much better with the hair than in the previous image, cutting around the edges without picking up unwanted background.

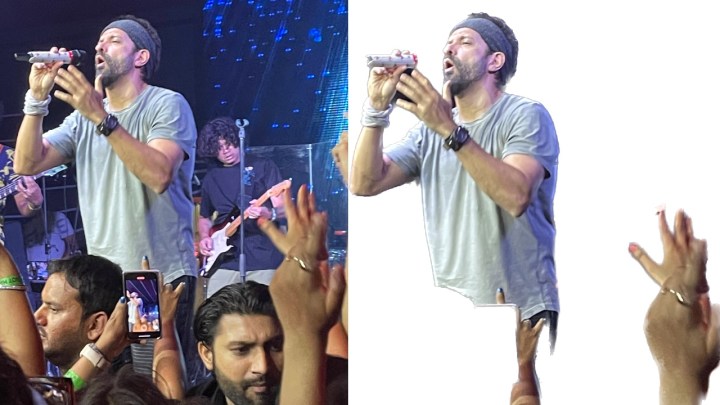

One area where the iOS 16’s background remover struggles is when there is a crowd. The photo above is a great example. It was clicked on the iPhone 12 during a concert in a low-lit environment. While it did a decent job near the face, it couldn’t distinguish between the subject and the various hands in the crowd. Again, it would be nice of Apple to give us the option to erase unwanted objects to fix these little mistakes. But there’s only so much you can do with a tap and hold, thus making us miss 3D Touch even more.

Off to an incredible start

Overall, iOS 16’s background remover does a brilliant job in well-lit scenarios. It works well on pets and objects to clear out the background from the edges, but it seems like Apple is mostly relying on the portrait mode data to lift the subject from the background. As a result, when an image isn’t taken in portrait mode — or something is blocking the subject — iOS 16 gets confused.

While these are valid complaints, it’s important to take all of this in context. This is the first available version of Apple’s background remover in the first Developer Beta of iOS 16. With iOS 16 not expected to officially launch until September or October, Apple has months ahead to tweak and refine the feature ahead of its public debut. Considering how good the feature already is at such an early stage, it’s exciting to think where it’ll be in a few months’ time.