As announced via the official Google Photos Twitter account on March 15, users of Google Photos for iOS can now use Google Lens to analyze and extract text, hyperlinks, and other information from photos.

Originally announced at Google’s I/O event, Google Lens uses machine learning to extract text and hyperlinks from images, along with its ability to identify various landmarks from around the world and a host of other promised abilities. It first launched on Google’s Pixel phones at the tail end of 2017, before being launched for all Android phones in March 2018. As of today, March 16, iOS users can also access the deep learning of the Google Lens by accessing it through the iOS Google Photos app.

Starting today and rolling out over the next week, those of you on iOS can try the preview of Google Lens to quickly take action from a photo or discover more about the world around you. Make sure you have the latest version (3.15) of the app.https://t.co/Ni6MwEh1bu pic.twitter.com/UyIkwAP3i9

— Google Photos (@googlephotos) March 15, 2018

Anyone looking to play with Google Lens should make sure that their Google Photos app is updated to the latest version (3.15). Then, open your Google Photos app, open a photo, then tap the Google Lens logo. If you’re struggling to find it, Google has posted a small guide on its support website. Some Twitter users have been complaining that they have not yet been able to access the functionality, and it seems that the update is in the process of rolling out worldwide. It’s also worth noting that Google Lens can only be used if your iOS device’s language is set to English, for the time being.

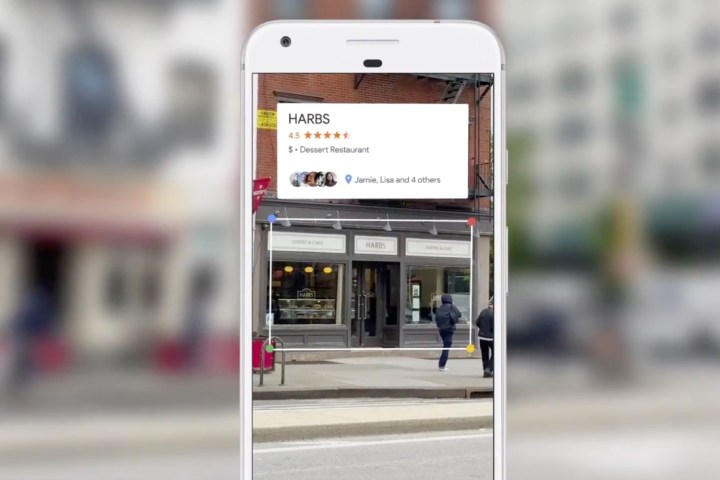

But what can you do with Google Lens? It’s capable of extracting text from your Google Photos, and while that may not sound impressive, it’s then able to use that text to find businesses, extract hyperlinks, find addresses, or identify books, movies, and games. If you take a picture of a business card, Google Lens will offer to save the information as a new contact, taking some of the fuss out of business networking. Landmarks can also be identified, and information on ratings, tours, and history will be offered as a result.

Use Google Lens to copy and take action on text you see. Visit a website, get directions, add an event to your calendar, call a number, copy and paste a recipe, and more. pic.twitter.com/E4ww2cxVUd

— Google Photos (@googlephotos) March 15, 2018

The Google Photos account has been sharing more than a few ways to make your Google Lens work for you, and while that fact that it’s currently restricted to the Google Photos app on iOS means it’s a bit harder to use in everyday circumstances, it’s a really cool addition, and a great indication of what the future has in store for us.