The scenario involves targeting a phone that has microphone-enabled headphones plugged into its headphone jack. The hackers use a laptop with the open-source GNU Radio software onboard, a USRP software-based radio, an amplifier, and an antenna to generate electromagnetic waves. The attacker can then exploit the headphone wire itself, simulating audio to make it seem as though it is coming from the microphone. From there, the attacker can control the phone remotely from as far as 16 feet away and ask the digital assistant to perform any action that it’s capable of doing. That includes making calls, navigating the Web, sending texts, and so on.

Hackers could even turn your phone into a listening device to spy on your communications, send the browser to a site with malware, or issue spam and phishing messages through your email and social media accounts. The simple brilliance of the hack shows once again how hackers can help expose problems with some of the most common and trusted technology.

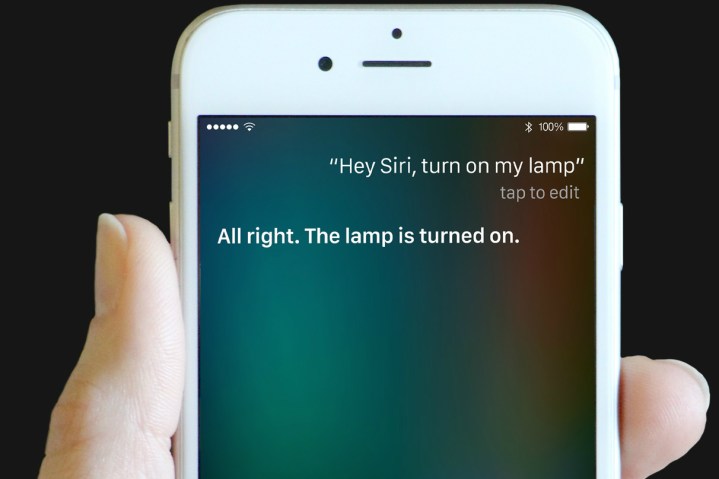

Of course, the hack does have its limits. Hackers can only target phones that have microphone-equipped headphones or earbuds plugged in. It doesn’t work if users don’t have Google Now enabled from their lockscreens, or if they have Google Now programed to respond only to their voice. Now that Siri only responds to the voice of the phone’s owner in iOS 9 on the iPhone 6S, it won’t work on the new iPhones, either. Additionally, anyone who looks at their phone regularly would probably see unauthorized voice commands being carried out on their phone — it’s not exactly a discrete hack.

Regardless, the researchers have pointed out that it’s still a vulnerability that could be exploited easily, especially in public spaces where people congregate.

To protect users’ phones against hacks, the security community frequently recommends that users disable the voice-activated assistants from appearing on the default screen, though most people aren’t willing to sacrifice the convenience of the feature. Additionally, the researchers suggest that if Apple and Google allowed users to set their own activation word like the Moto X does, hackers wouldn’t be able to activate Siri or Google Now, unless they knew your specific name or phrase. Of course, that’s something the tech giants will have to consider — not the user. In regards to this particular headphone jack hack, the researchers suggest microphone cords with heavier shielding inside.

For some time, security advocates have been preaching about the hackable potential of our phone’s voice-activated digital assistants. Quite recently, an embarrassing hack of the iOS 9 lock screen involved tricking Siri into giving up contacts and other information. That flaw has since been fixed in a recent update, but the question of voice assistant hackability is still a serious one.