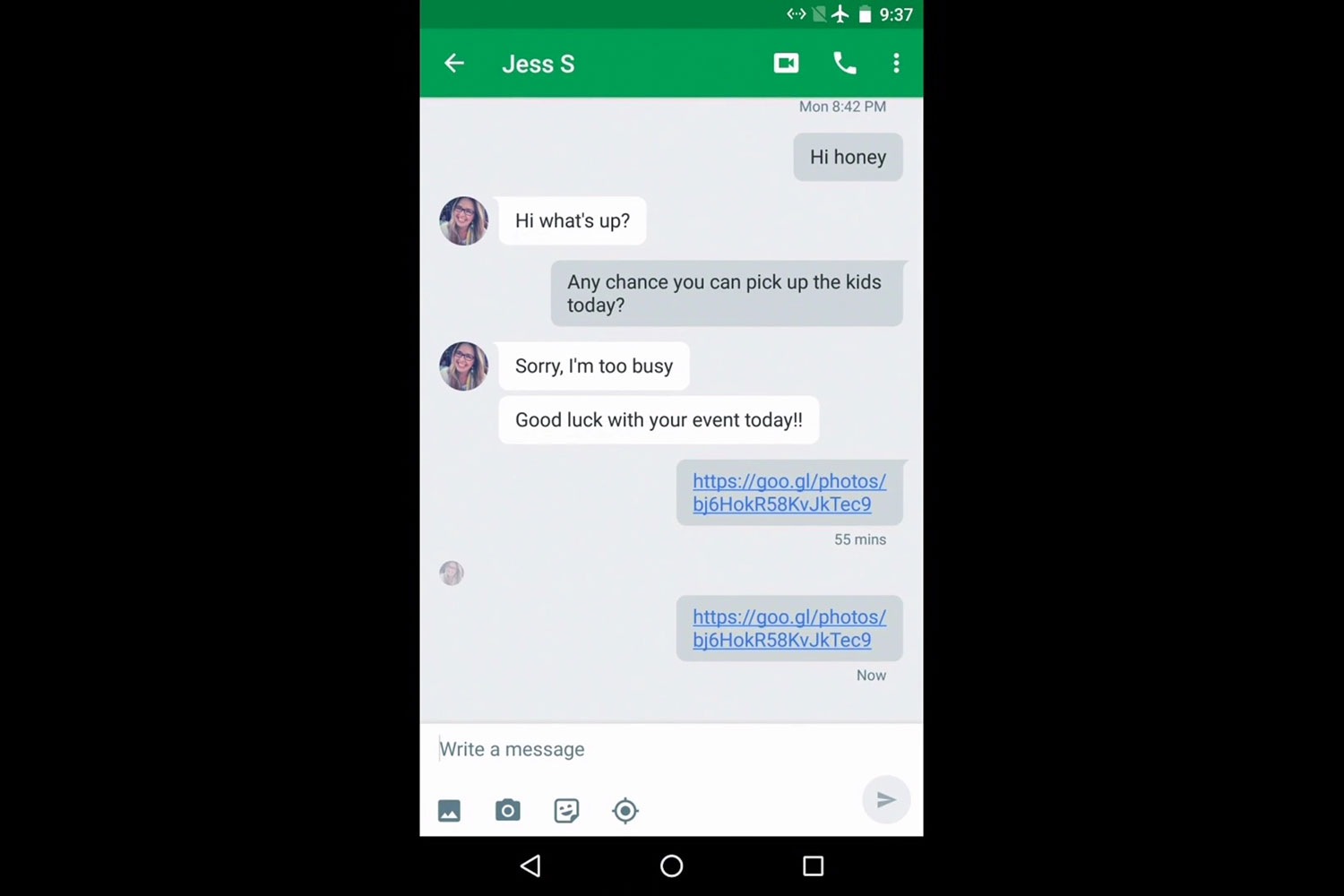

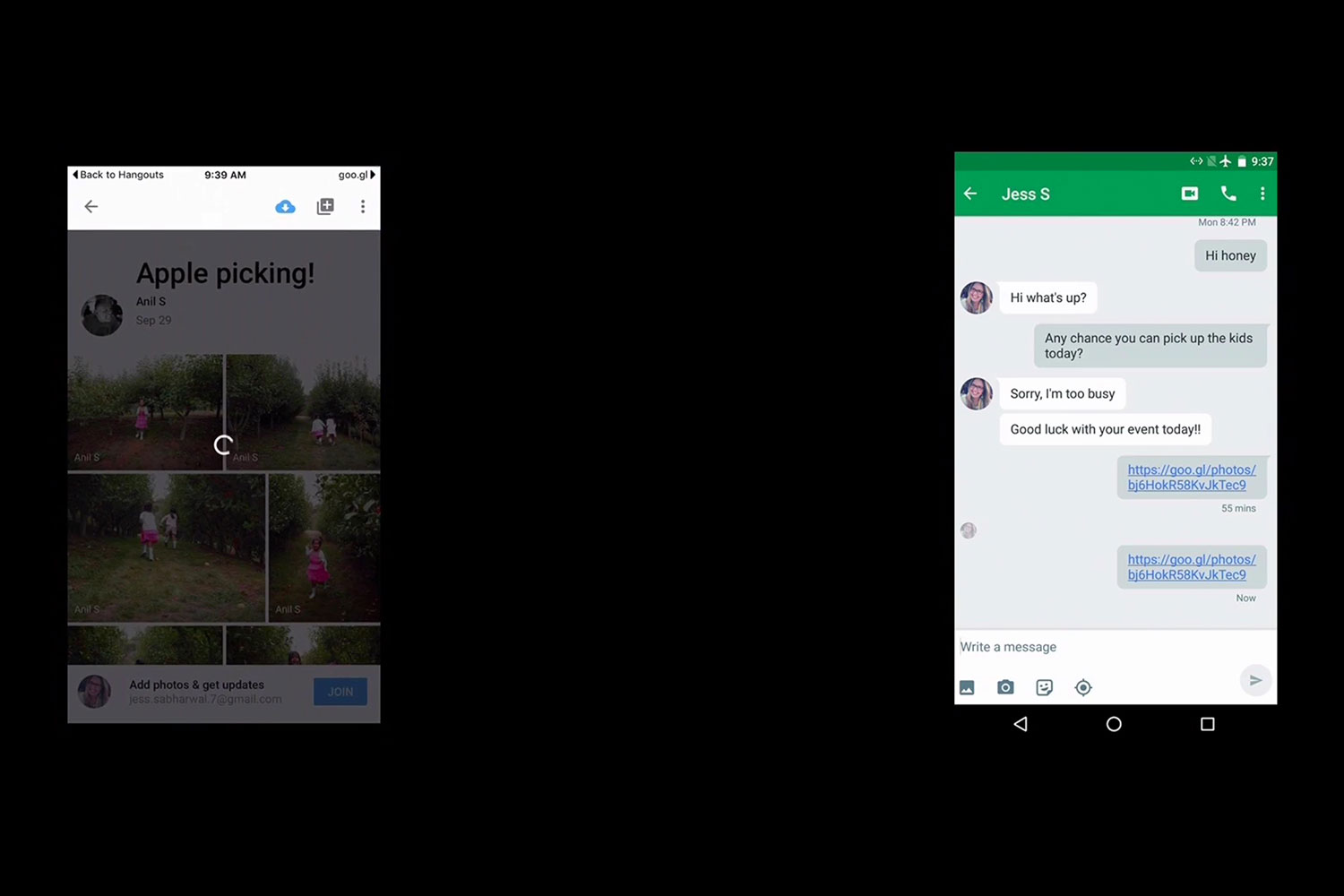

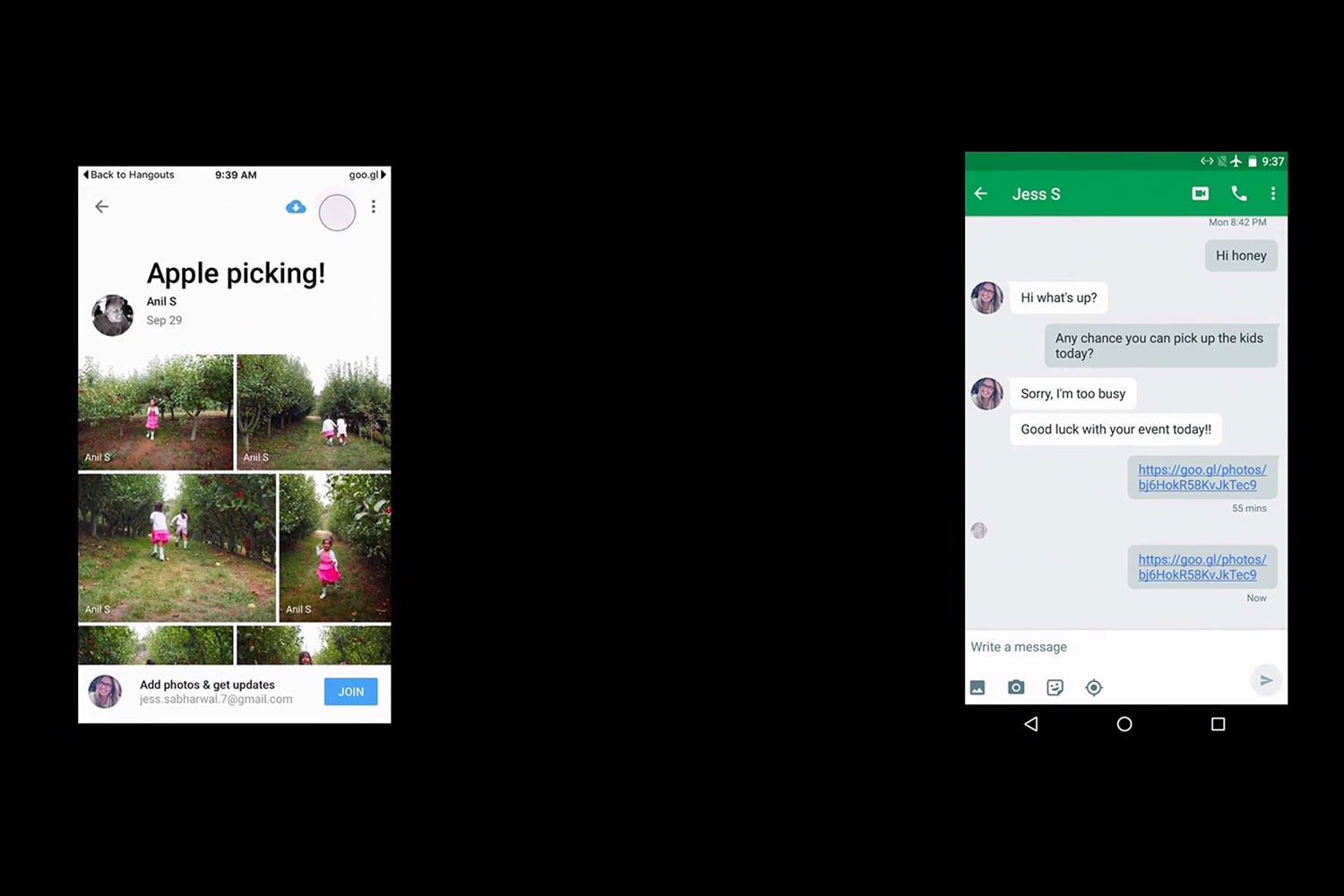

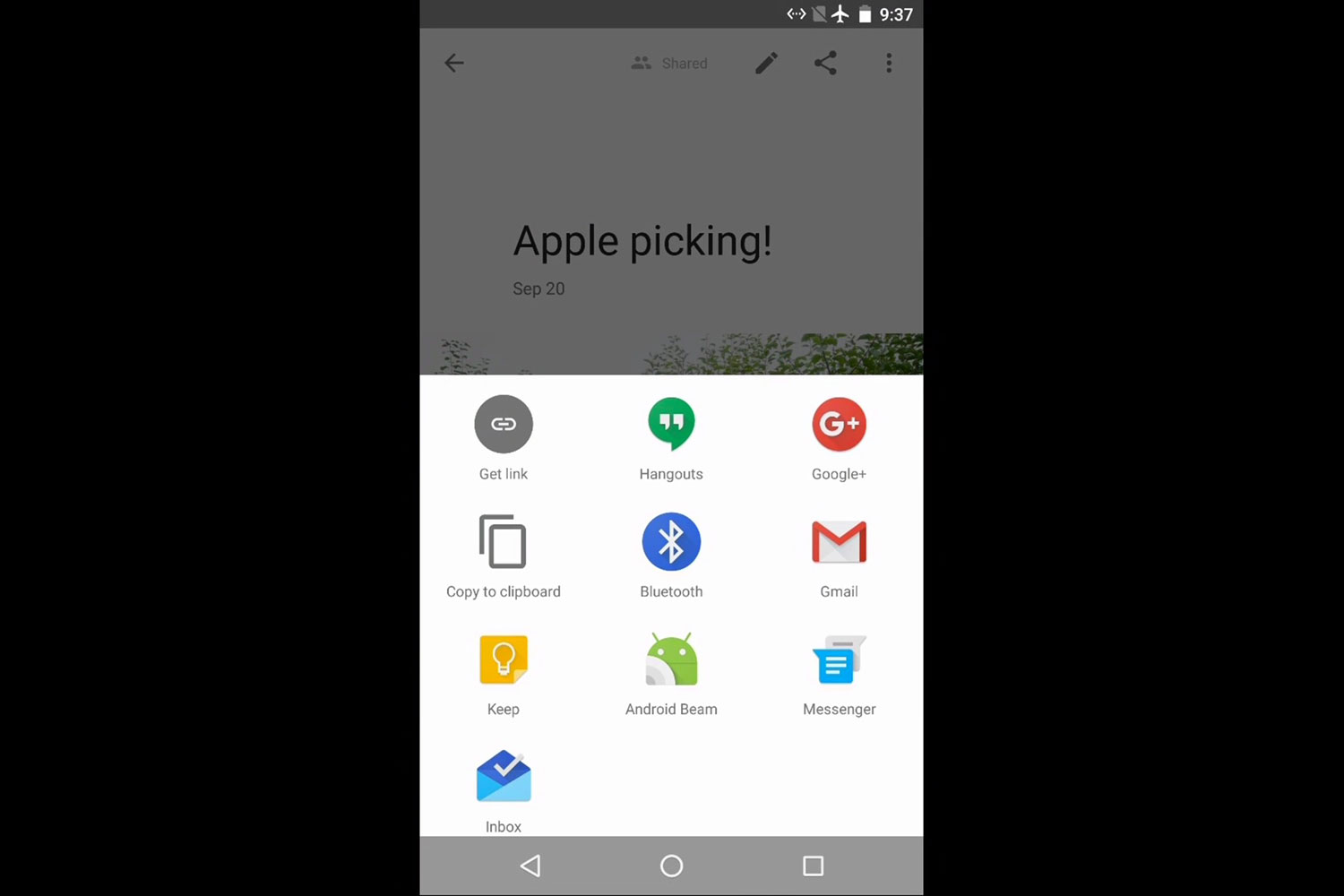

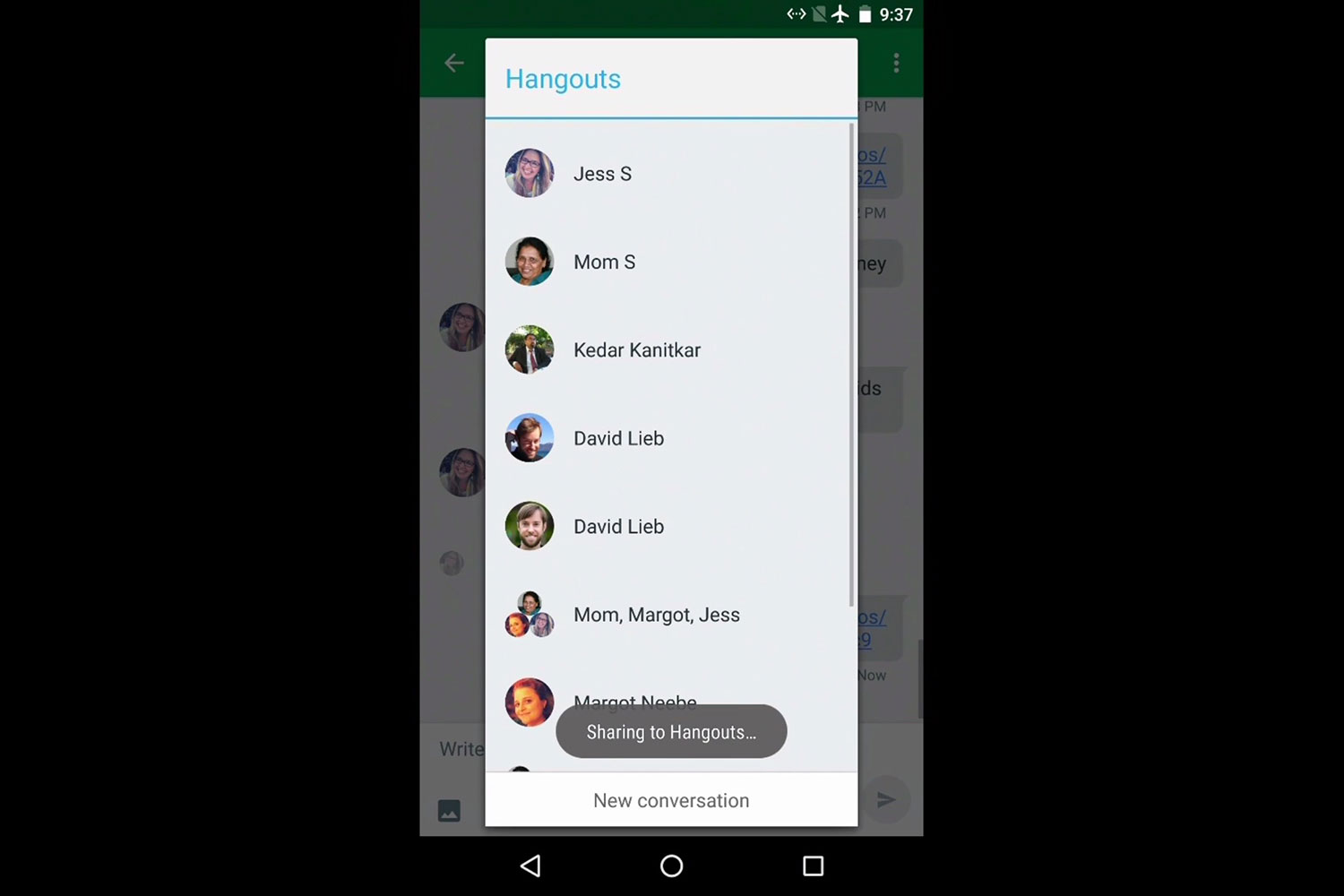

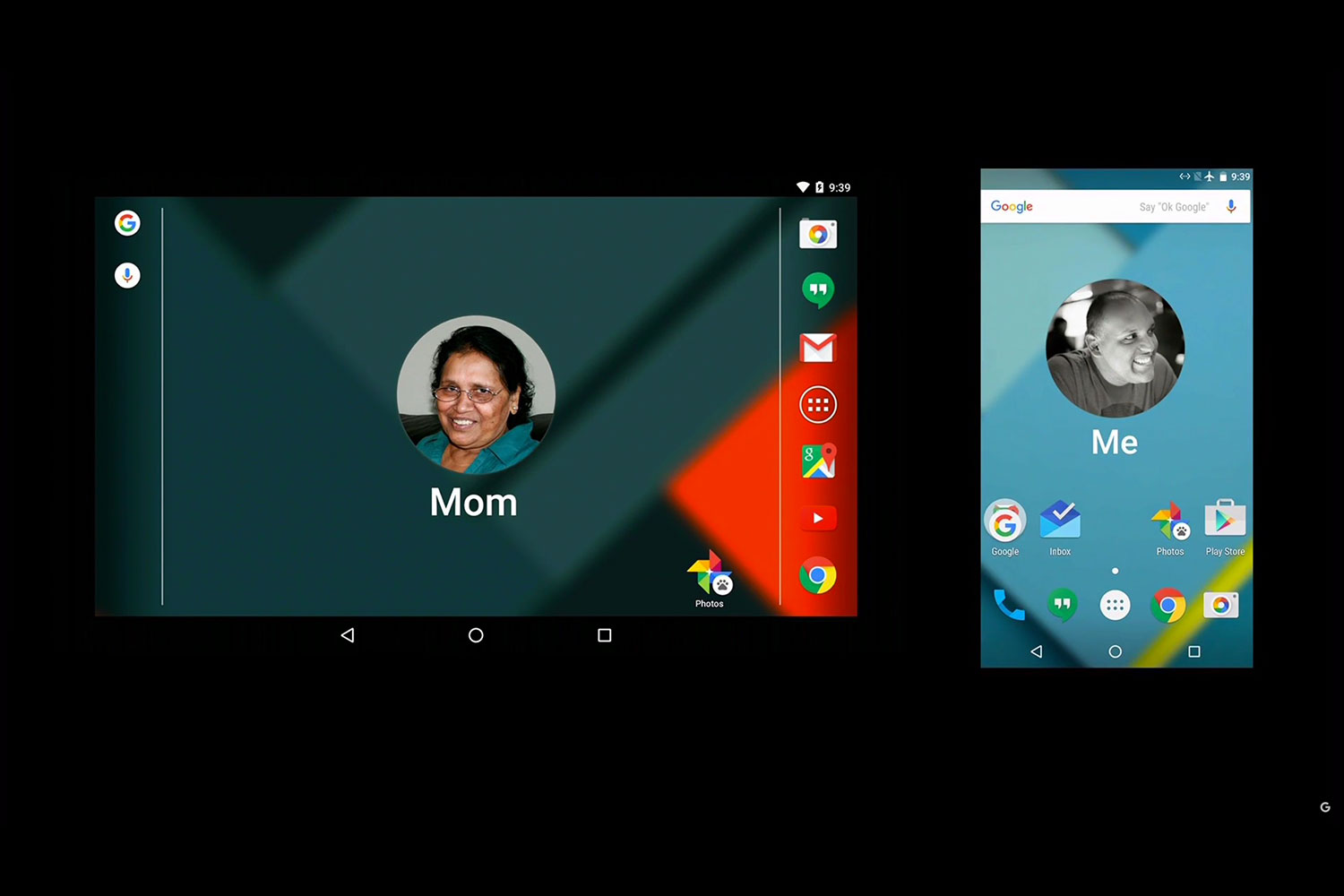

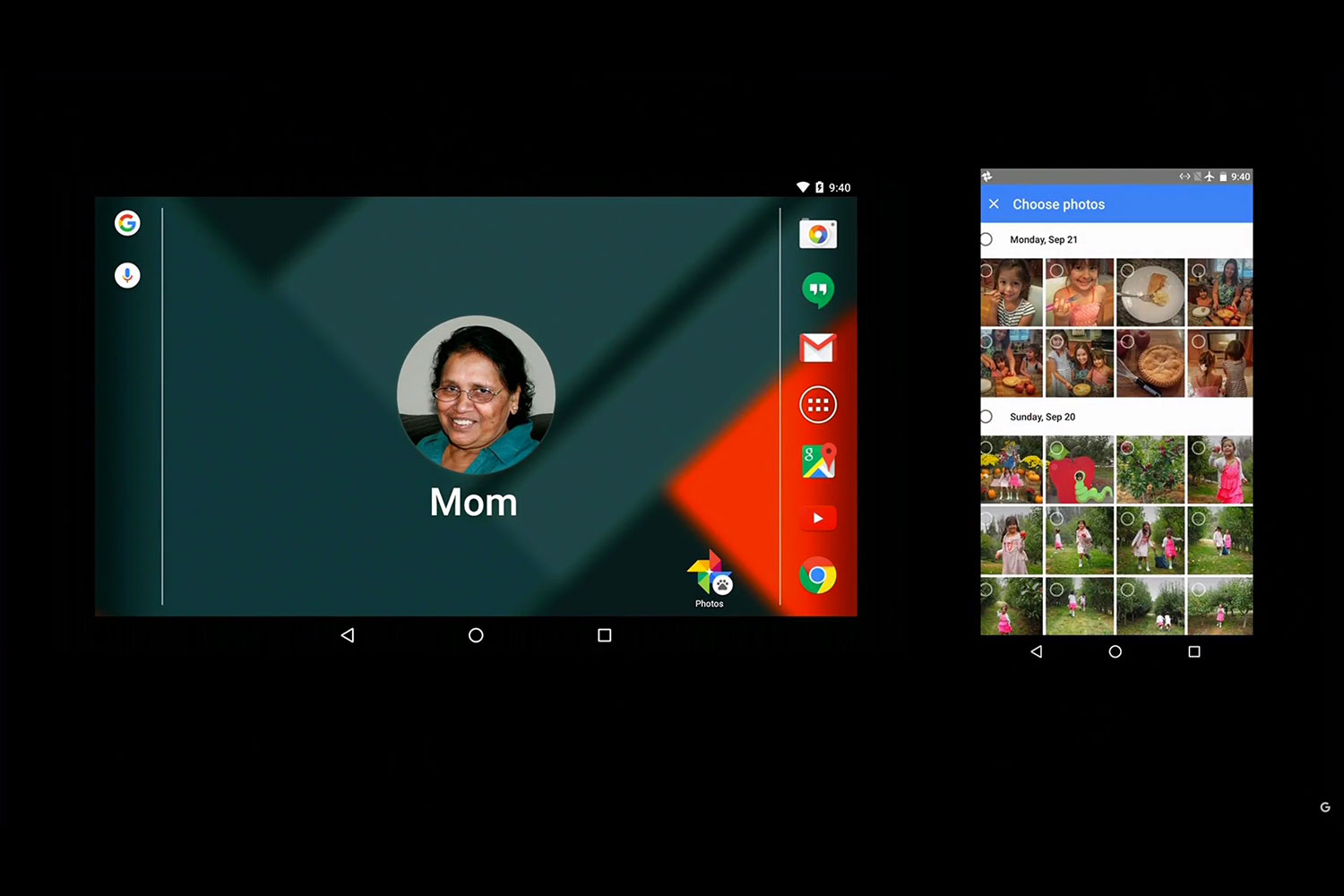

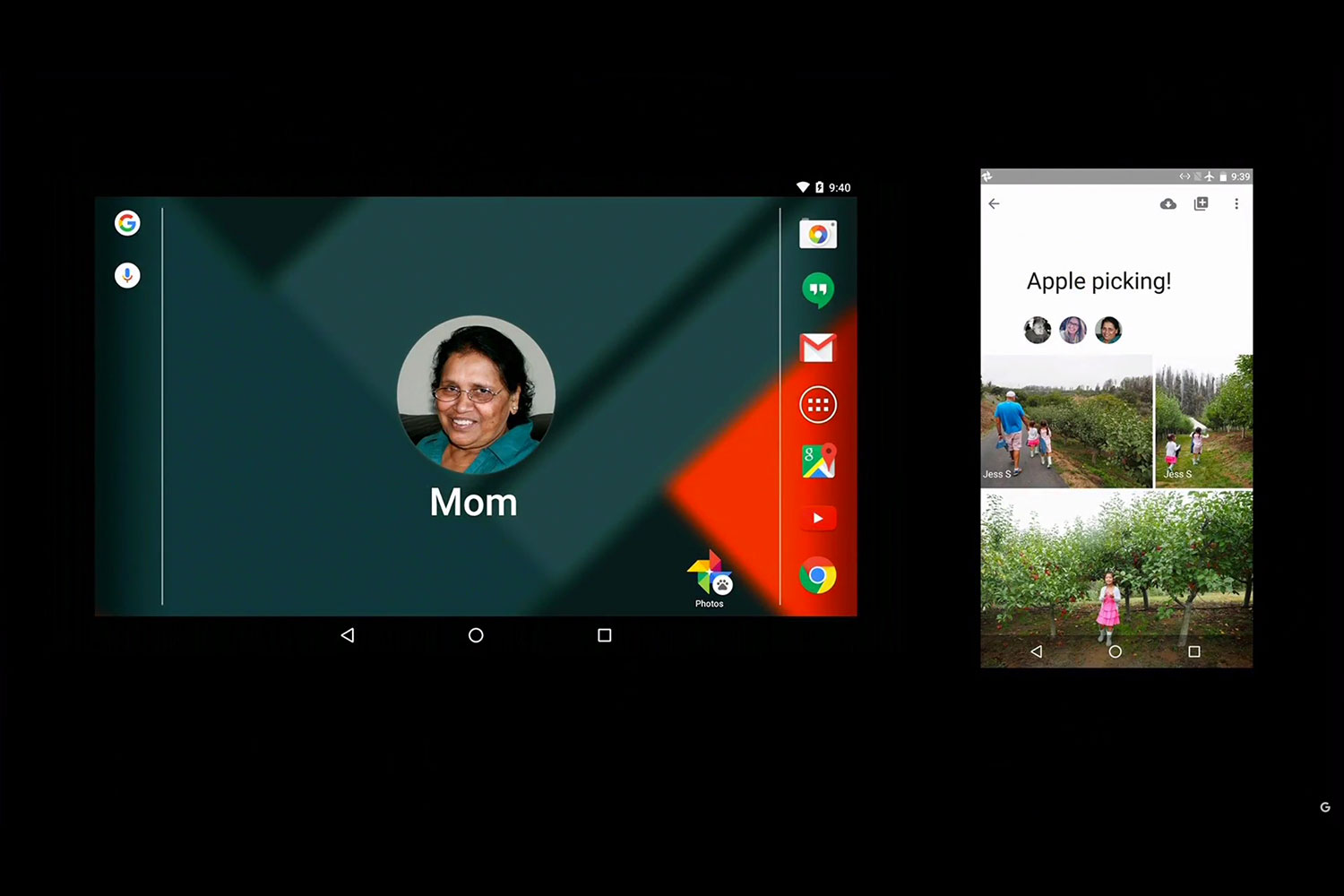

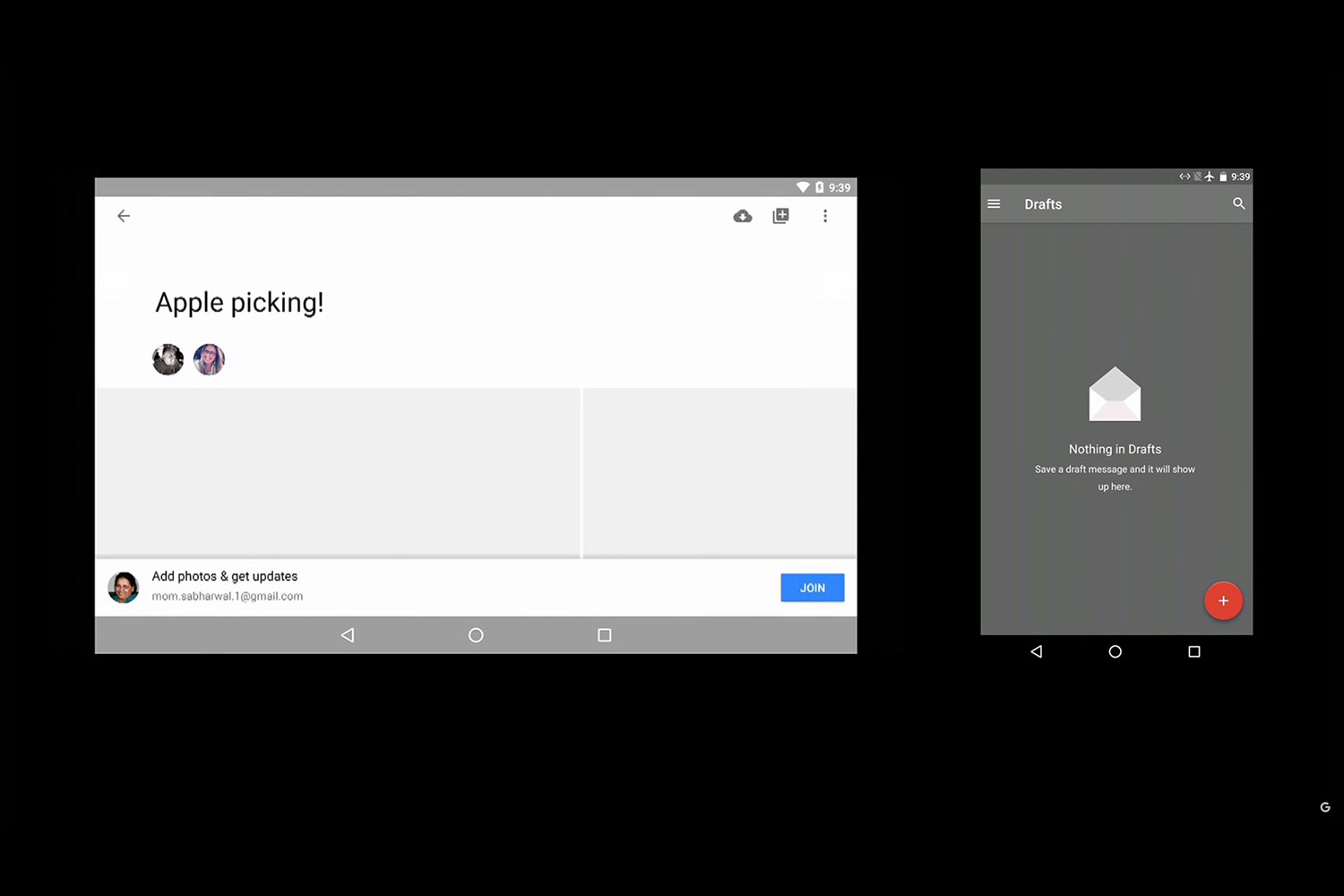

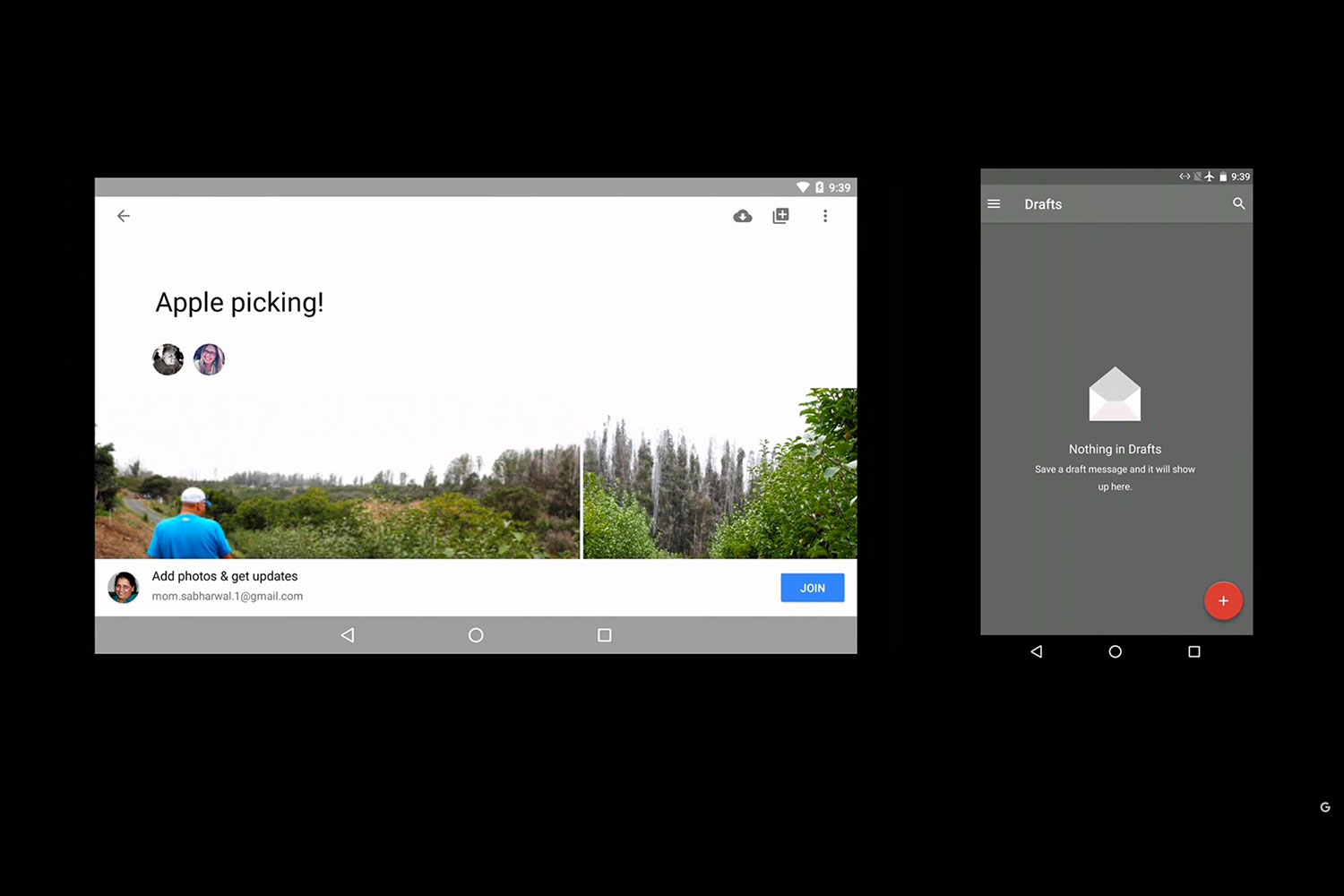

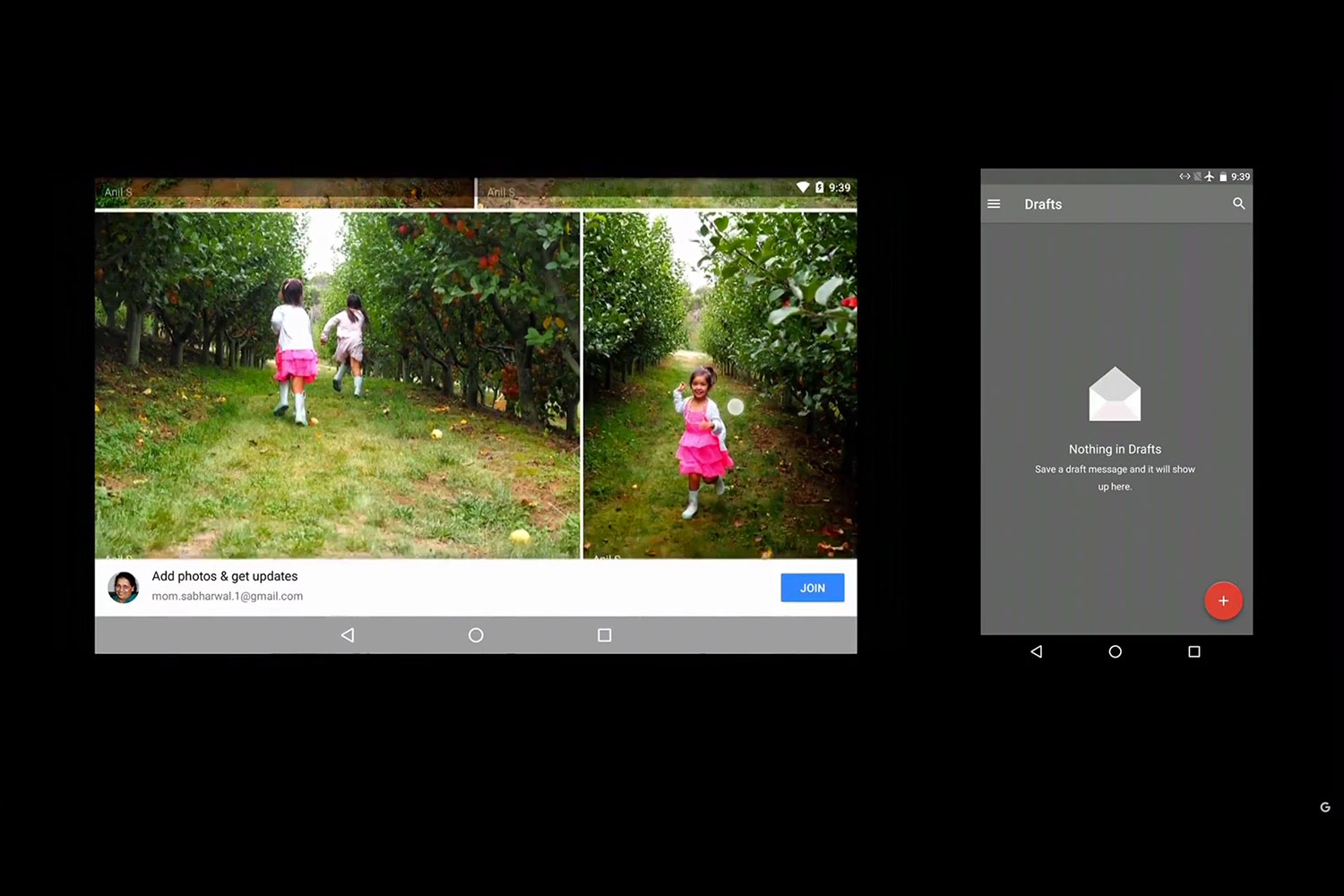

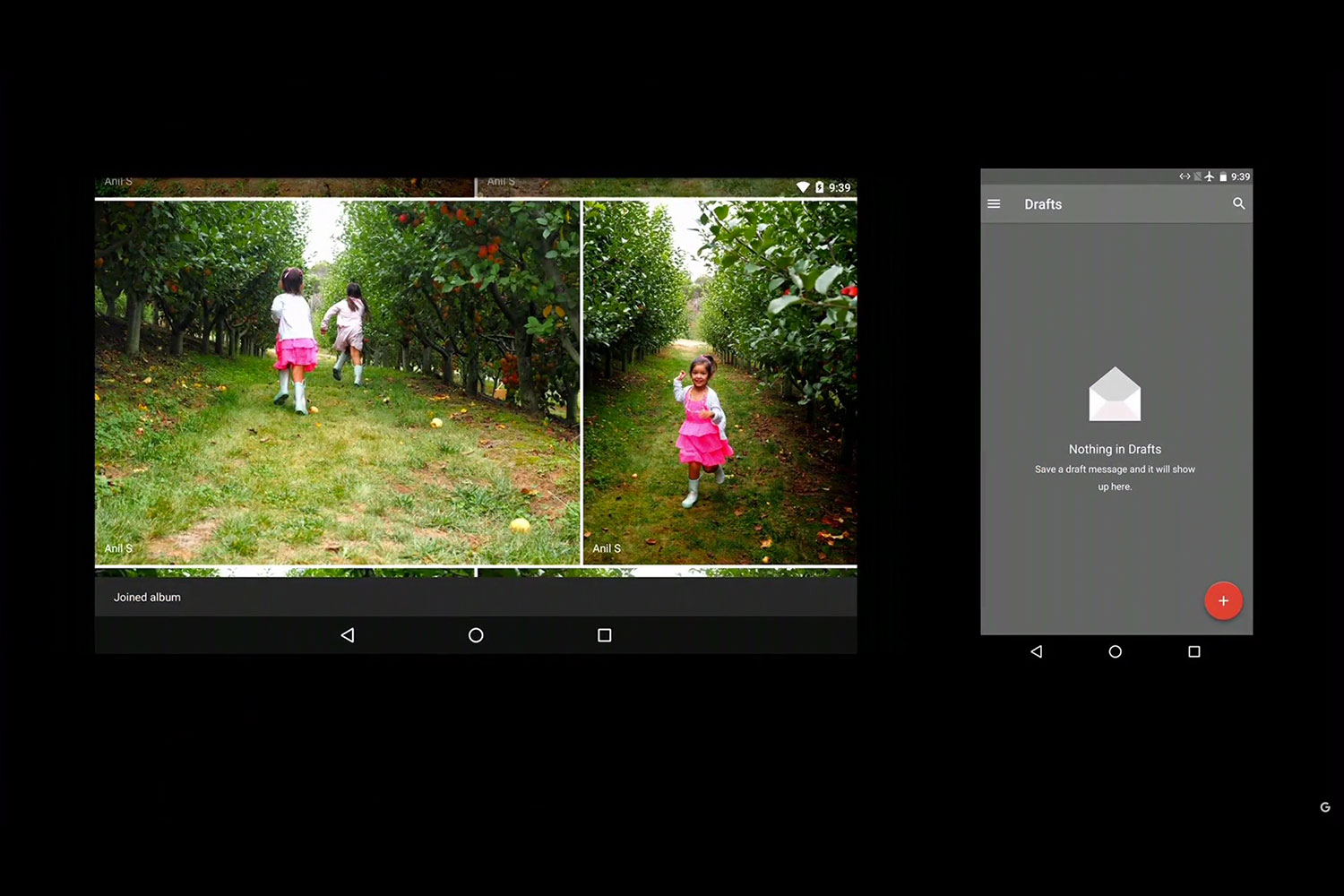

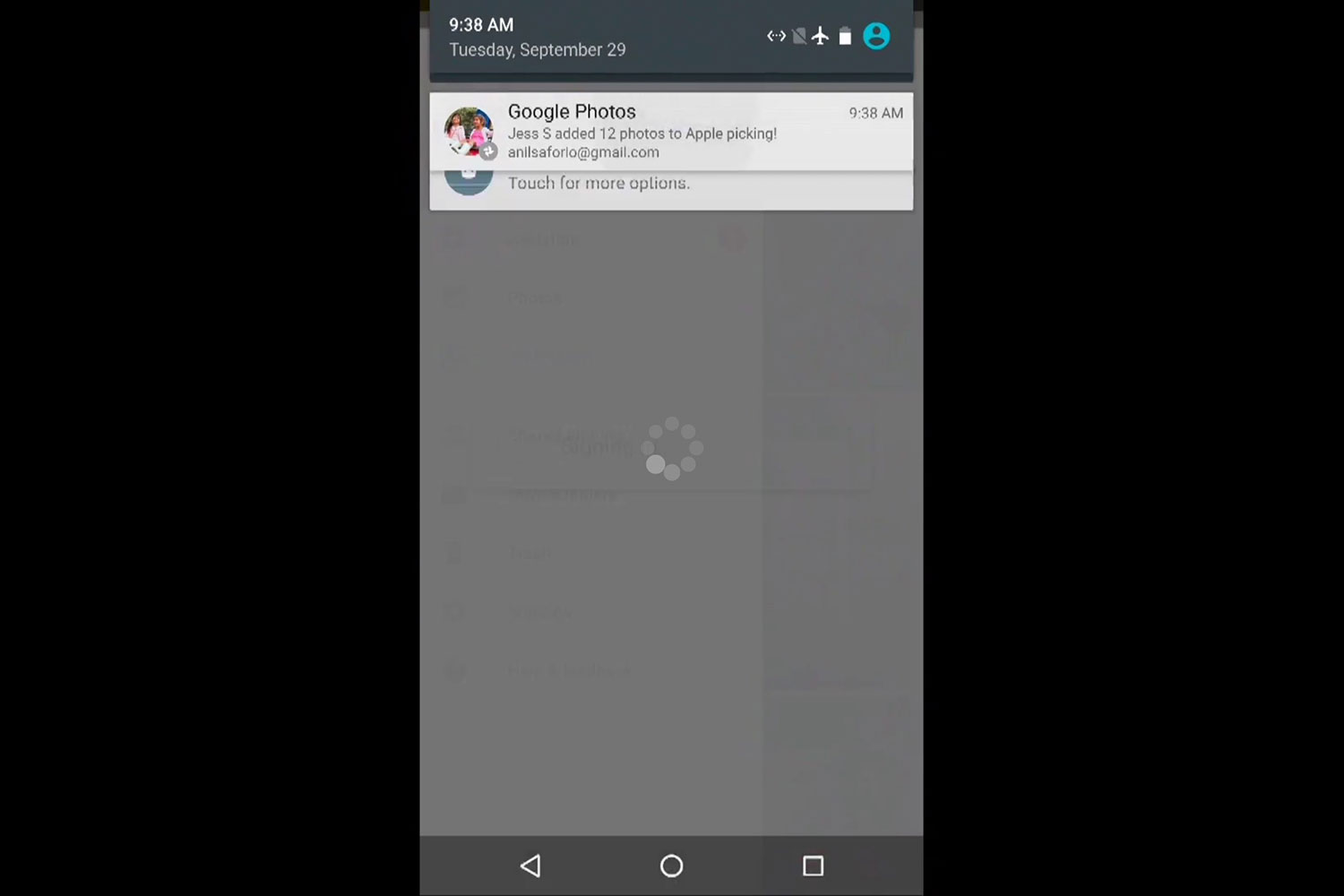

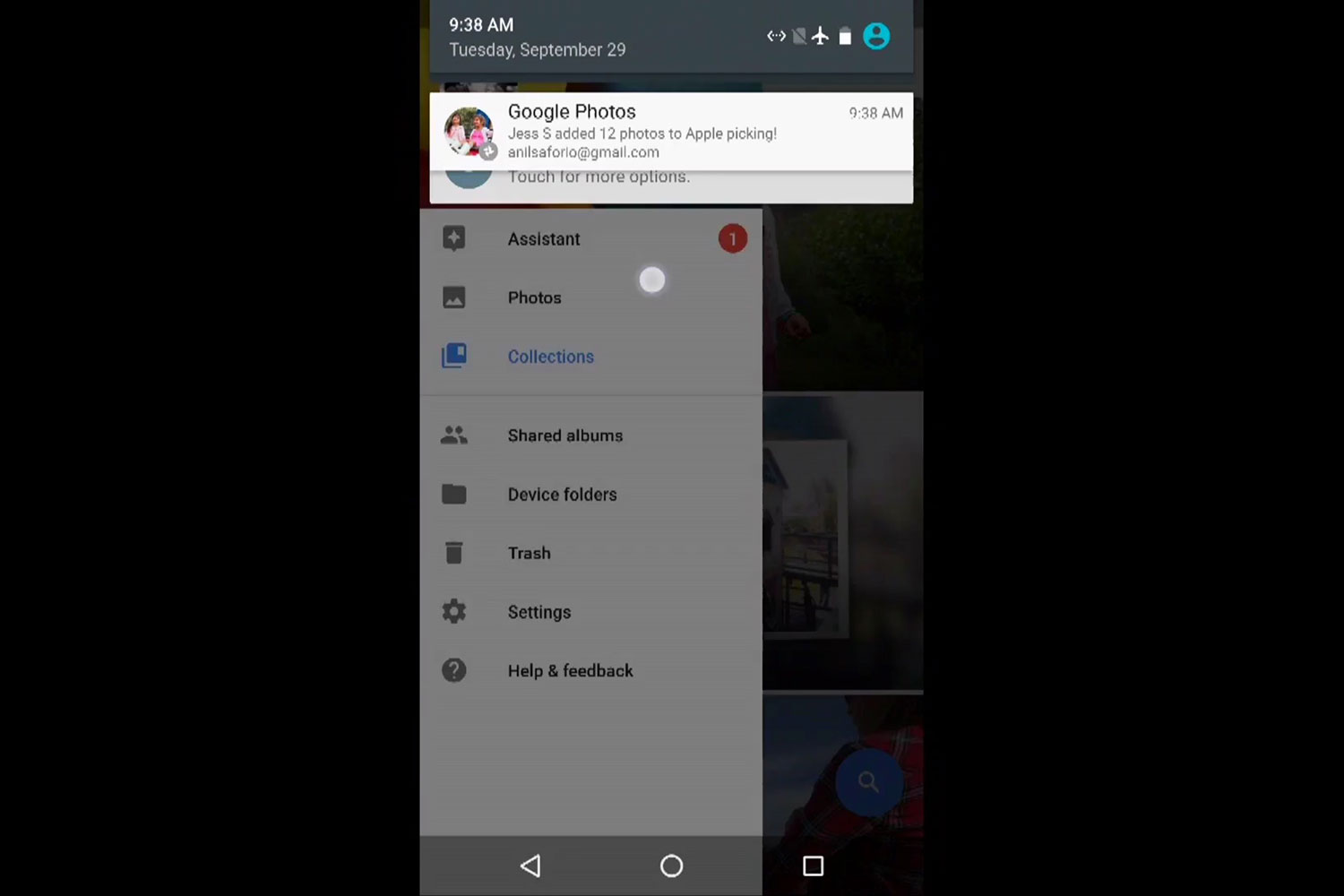

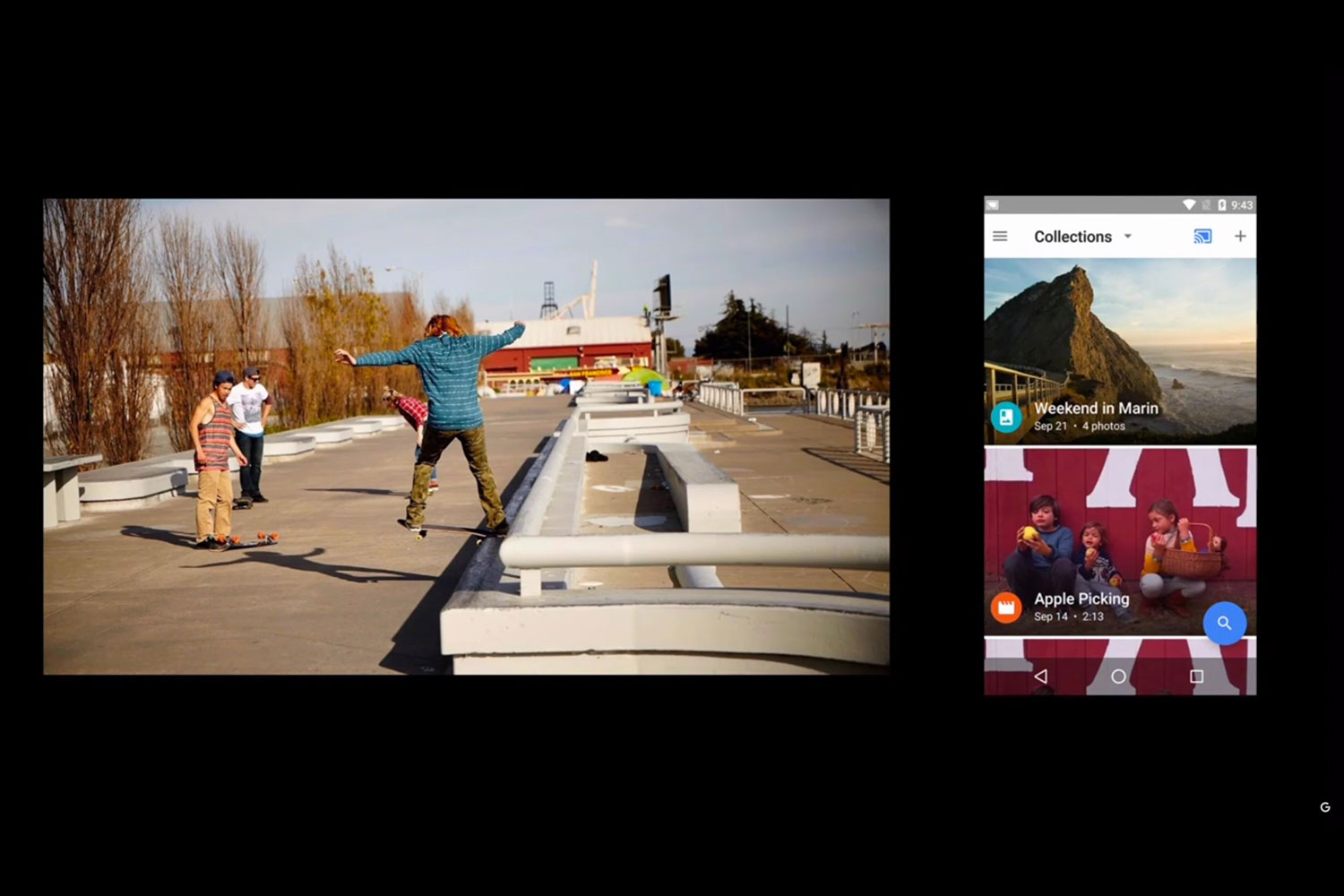

As the name suggests, shared albums let you invite friends and family to add their photos to a particular album – for example a birthday where everyone attended, but different devices were used to take photos and videos. Another example Google Photos director Anil Sabharwal demoed on stage had him sharing an album with his mother to keep her in the loop about what’s happening with her grandchildren. Whenever photos are added, everyone who’s invited to join the album will get a notification. It’s very similar to photo sharing in Apple’s iCloud, but Google Photos is available on a variety of devices. This feature, which makes Google Photos more social among the people close to you, will roll out later this year.

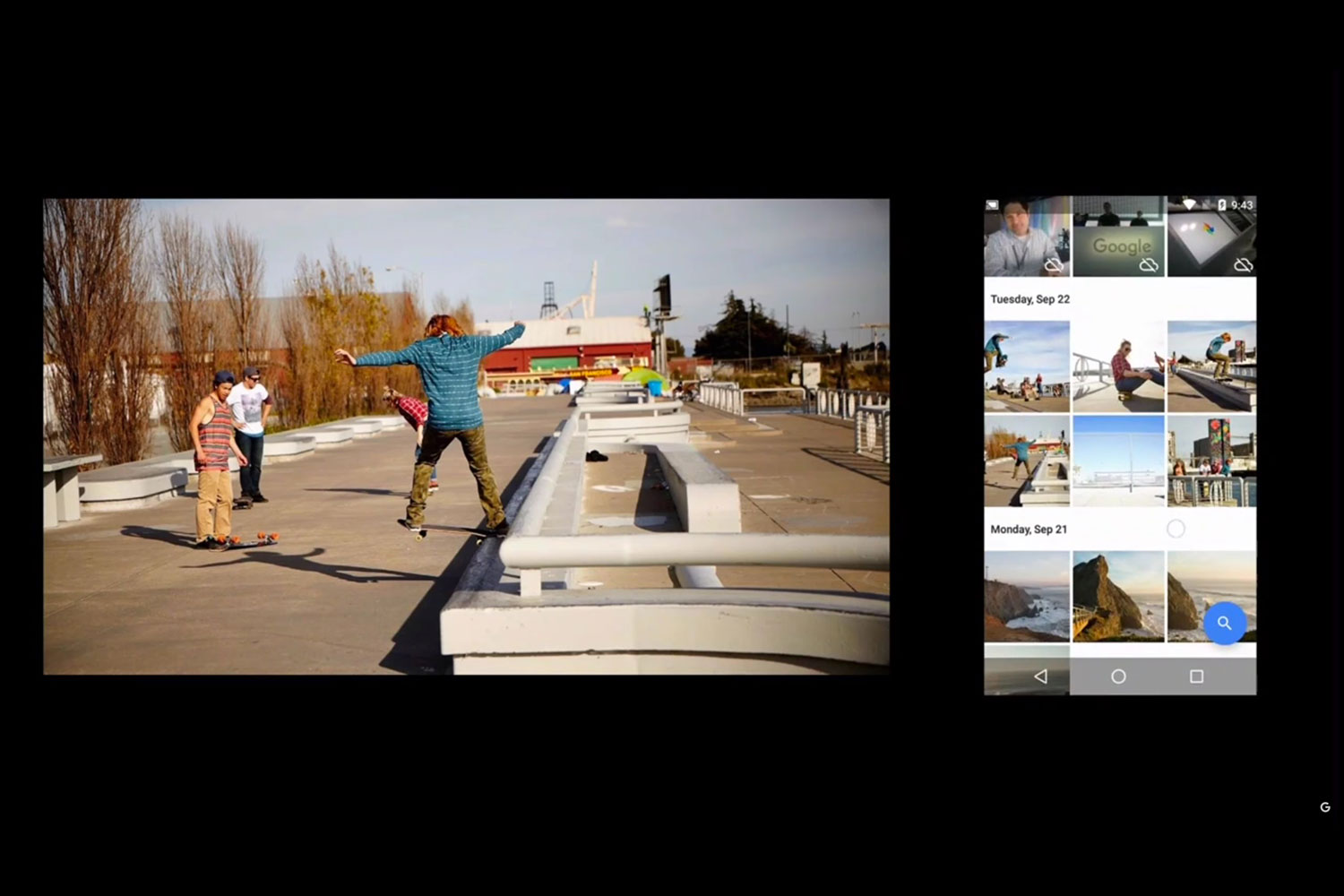

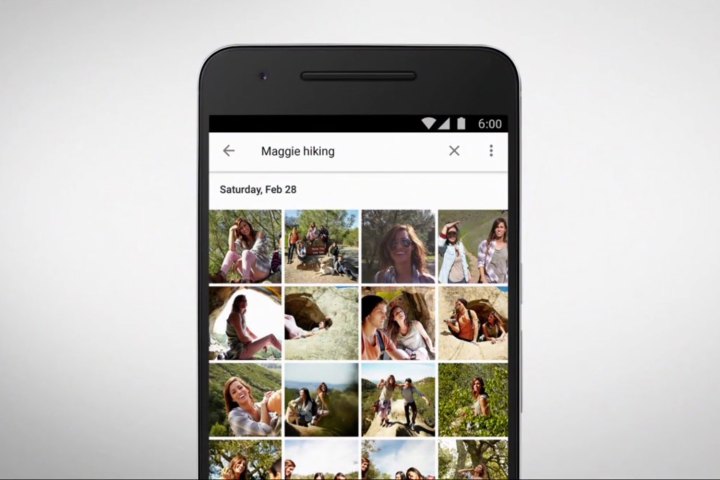

Perhaps more interesting is Google’s continued use of machine learning to better organize and search for photos. You can now label collections of similar faces, and Sabharwal says these labels remain private. The private labels are used to help you refine your searches, using compound words. For example, instead of typing a name and pulling up hundreds of photos of that person, you can type the name and an activity or event, and Google Photos will search for only those photos, using facial recognition and scene analysis. This is to help a user find exactly what he/she is looking for, quicker. This function is available for Android this week, and will roll out later for Web and iOS.

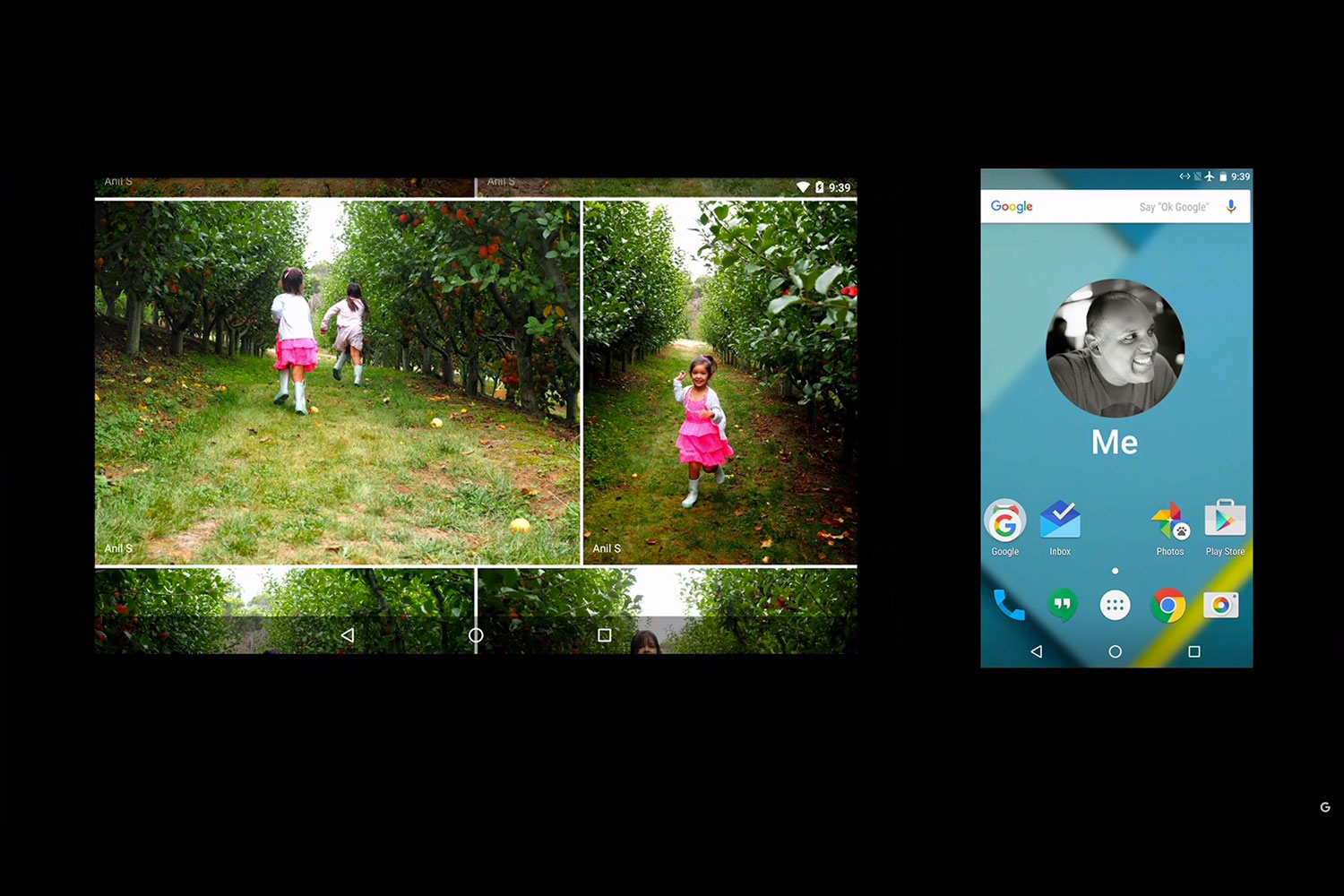

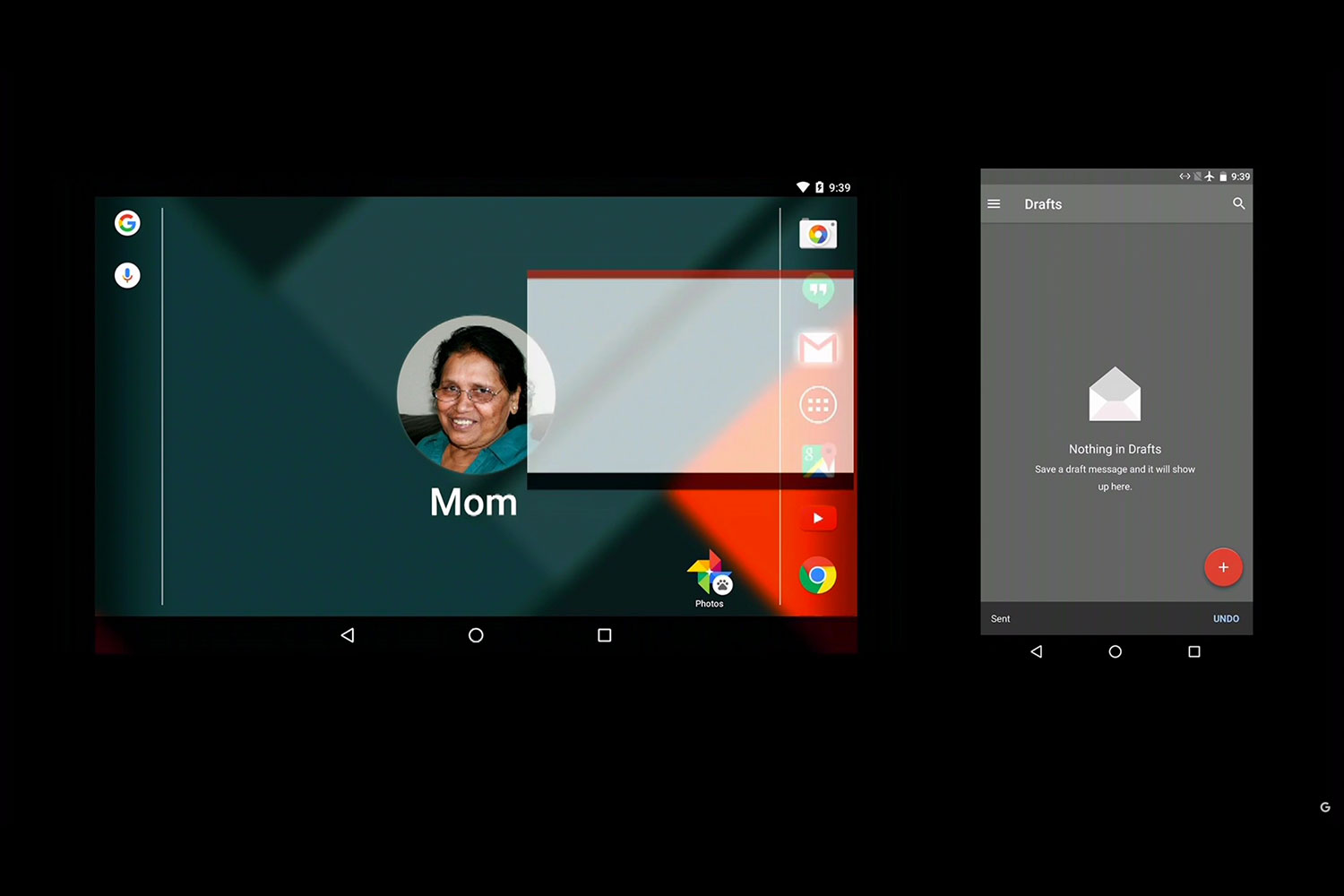

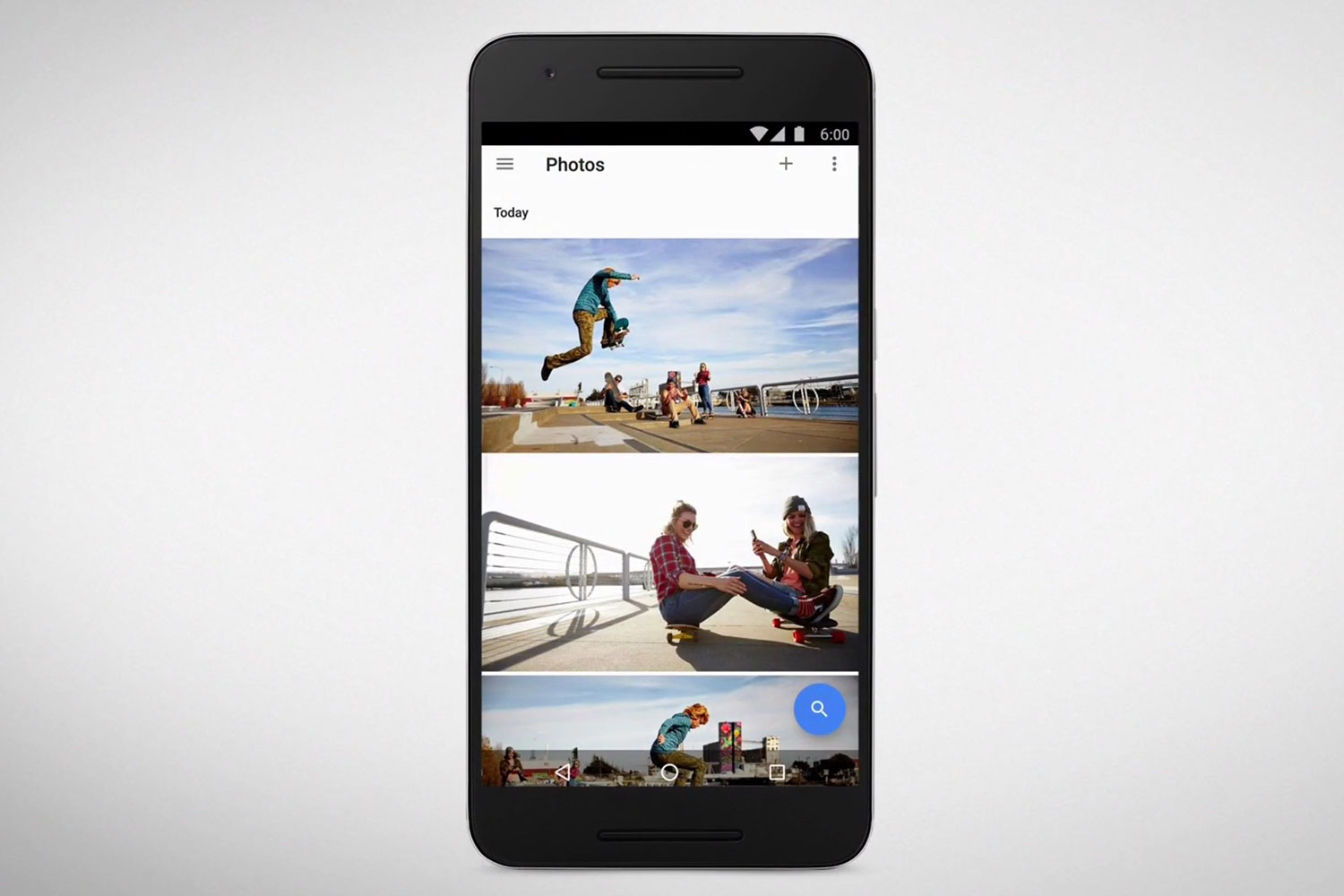

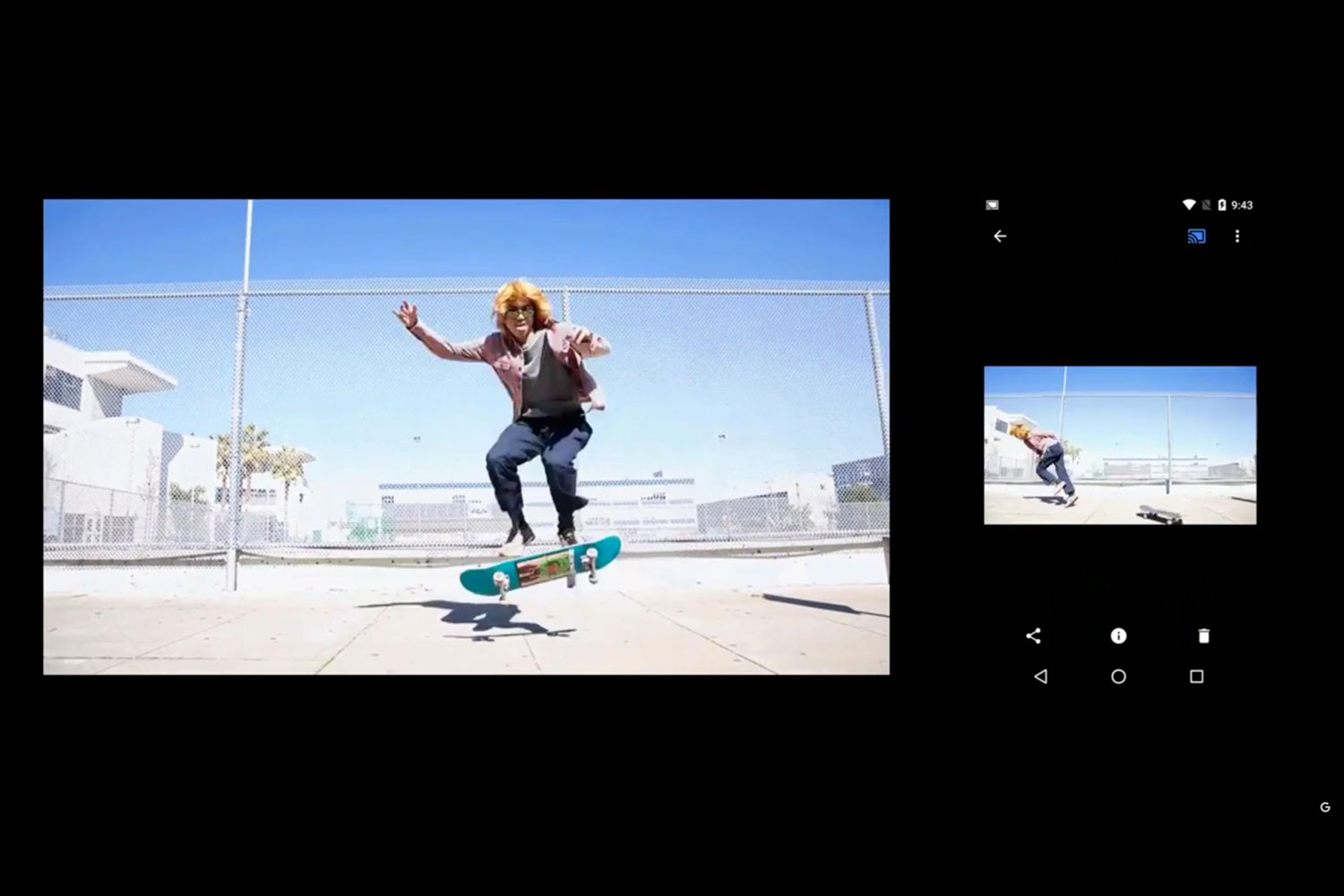

Google Photos will also support Chromecast, available this week on Android and soon on iOS. The app will automatically detect a nearby Chromecast device on the same network, and allow you to cast photos to a television; you can even cast animated GIFs. A nice component is that you can cast and leave a photo on a TV while you search for photos on the app; because you aren’t mirroring everything that you see, you can show only the photos you want to cast – saving private content for your eyes only.

In related news, Google also announced new camera specs and features in the upcoming Nexus 5X and Nexus 6P smartphones. Both utilize 12.3-megapixel sensors from Sony with 1.55-micron-sized pixels. Unlike the 1.2-micrn pixels in the new iPhone 6S and 6S Plus, Google says these larger pixels are able to collect 92 percent more light. Larger pixels allow for shorter exposure times and less motion blur, says Google’s VP of Android engineering, Dave Burke, without the need for optical image stabilization. This is particularly useful in low-light situations, allowing for less graininess and better tone mapping. Autofocus is also faster by using laser detection. Google didn’t reveal the actual sensor size, and the photos it displayed from an iPhone 6S and new Nexus phone clearly show the differences. However, Apple is using some interesting technology to deal with the smaller pixels, so we’ll hold judgment.

Other camera features include slow-motion video (120 frames per second in the Nexus 5X and 240 fps in the 6P; Google says the Google Photos app will let you edit the area to slow down); “smart burst” of 30 frames per second, which can be turned into animate GIFs (this is a bit different than the iPhone 6S’ Live Photos, which is recording short videos of 15 fps); and the double-tap of the power button to launch the camera.