Photos taken through windows or glass usually have two nearly identical reflections, one slightly offset from the other. Double-paned windows, as well as plain old thick windows, are often the culprits, says YiChang Shih, one of the MIT researchers who developed the algorithm. “With that kind of window, there’s one reflection coming from the inner pane and another reflection from the outer pane,” Shih says. “The inner side will give a reflection, and the outer side will give a reflection as well.”

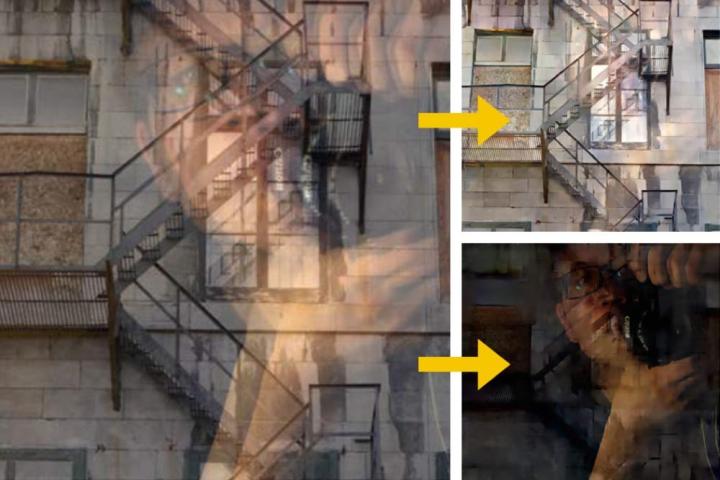

So, the algorithm looks at the values for images inside the glass and outside of it, and attempts to correct it so that it looks the way it was intended. “You have an image from outdoor (A) and another image from indoor (B), and what you capture is the sum of these two pictures (C),” Shih says, who presents the problem as a complex mathematical challenge in then trying to recover A and B data from C.

“But the value of A for one pixel has to be the same as the value of B for a pixel a fixed distance away in a prescribed direction. That constraint drastically reduces the range of solutions that the algorithm has to consider,” MIT News writes.

The process in really technical, but in short, the algorithm uses a technique developed at Hebrew University of Jerusalem in Israel that analyzes the colors of the images by breaking them into 8 x 8 blocks of pixels, calculating “the correlation between each pixel and each of the others.” Out of 197 images from Google and Flickr that they tested the algorithm on, 96 worked. (Check out MIT News for a more detailed explanation.)

“People have worked on methods for eliminating these reflections from photos, but there had been drawbacks in past approaches,” Yoav Schechner, a professor of electrical engineering at Israel’s Technion, told MIT News. “Some methods attempt using a single shot. This is very hard, so prior results had partial success, and there was no automated way of telling if the recovered scene is the one reflected by the window or the one behind the window. This work does a good job on several fronts.”

“The ideas here can progress into routine photography, if the algorithm is further ‘robustified’ and becomes part of toolboxes used in digital photography,” Schechner adds. “It may help robot vision in the presence of confusing glass reflection.”

The MIT researchers will present the algorithm at the Computer Vision and Pattern Recognition conference in Boston, in June. The research is still in its early stages, but over time, we could see it embedded into digital cameras and smartphones, or photo software, and do away with unwanted reflections once and for all.