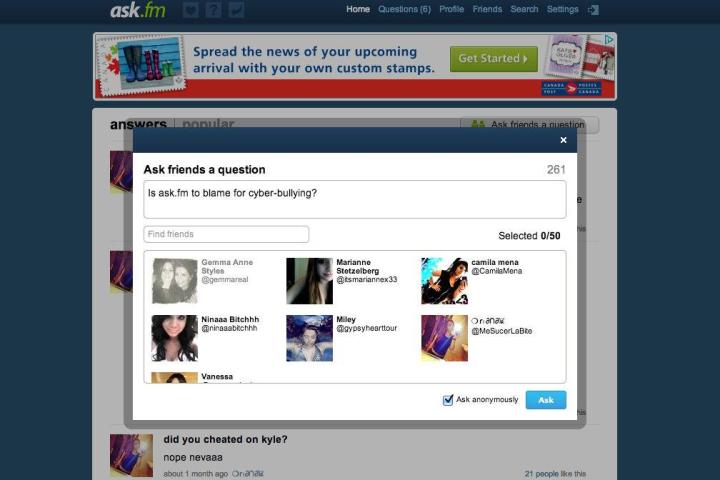

According to a new Wired report, SRI International, which first came to prominence for developing Siri, is now working on computer algorithms that can identify cyberbullying as it happens, and put a much quicker end to it than any human possibly could. Normal Winarsky, president of SRI Ventures, told Wired, “Social networks are overwhelmed with these kinds of problems, and human curators can’t manage the load.” But maybe technology can.

Given SRI’s extensive background in and knowledge of communication, the company seems like the perfect fit in teaching computers how to flag, stop, and perhaps even prevent bullying and other digital abuse. Already, their familiarity with language has allowed SRI to create algorithms capable of grading essays in standardized tests based on more than just grammar and checkpoints. And now, in addition to being taught what good writing looks like, the machines at SRI are also being fed nastier information about what forms cyberbullying may take.

Bill Mark, president of SRI’s information and computing sciences group, told Wired, “Bullying is a difficult problem to solve because you have to understand context.” It’s not as simple as just flagging curse words and name calling — it’s also being able to recognize threats and other negative sentiments that humans can easily identify as cruel and abusive, but may not be as obvious to a computer.

According to Winarsky, at SRI’s current rate of development, an initial testing model could be ready in six months to a year, but a more sophisticated and robust system may take longer to perfect. After all, this AI would need to “understand the meaning behind language, parsing grammar and sentence models, and structure, in addition to machine learning and statistical approaches” in order to identify potential bullying scenarios.

Ultimately, the goal would be to lessen the burden currently borne by human content moderators, who sometimes leave work depressed, or worse. So while such a system wouldn’t solve the root of the issue — the bullying itself — perhaps it can lessen its effects.